Despite a common definition for word (as stated on Wikipedia) being:

The largest possible address size, used to designate a location in memory, is typically a hardware word (here, "hardware word" means the full-sized natural word of the processor, as opposed to any other definition used).

x86 systems, according to some sources, note it's treated as 16 bits:

In the x86 PC (Intel, AMD, etc.), although the architecture has long supported 32-bit and 64-bit registers, its native word size stems back to its 16-bit origins, and a "single" word is 16 bits. A "double" word is 32 bits. See 32-bit computer and 64-bit computer.

Yet Intel's official documentation (sdm vol 2, section 1.3.1) states:

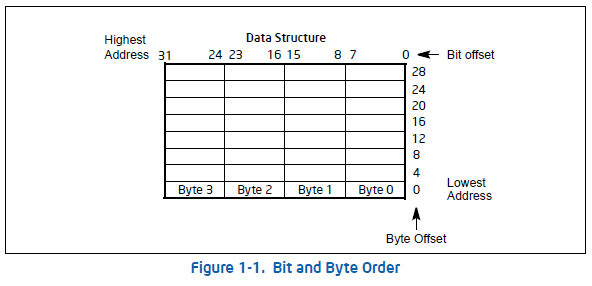

this means the bytes of a word are numbered starting from the least significant byte. Figure 1-1 illustrates these conventions.

and Figure 1-1 shows 4 bytes in little endian sequence, not 2 bytes or 8 bytes (as the varying definition by sources linked above would suggest) of word in the x86-64 context:

And where my confusion really lies about all this is how instructions are fetched and parsed. I'm writing an emulator and once I parse a PE formatted executable and get to the text section, if I'm to follow the 4-byte little endian format, doesn't that mean the 4th byte would be parsed first?

Let's make up some bytes for example:

.text segment buffer:

< 0x10, 0x1A, 0x1B, 0x1C, 0x1D, 0x1E, 0x1F, 0x20 > ....

Would I parse the first instruction as 1C, 1B, 1A, 10, 20, 1F, 1E, 1D ... (and so on, being variable length there's obviously potentially more words to read depending on what the real bytes are here)?