Both the optimization manual and the datasheet of the processor family (Section 2.4.2) mention that the L1 data cache is 8-way associative. Another source is InstLatx64, which provides cpuid dumps for many processors including Ice Lake processors. Take for example the dump for i7-1065G7

CPUID 00000004: 1C004121-02C0003F-0000003F-00000000 [SL 00]

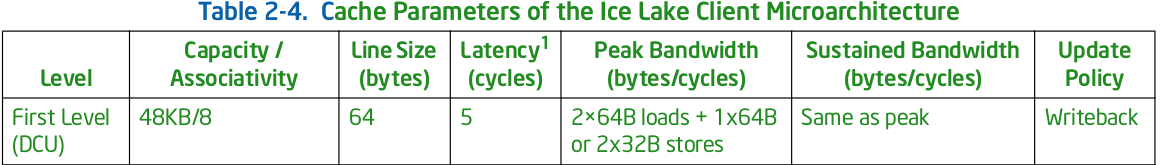

Cache information can be found in cpuid leaf 0x4. The Intel SDM Volume 2 discusses how to decode these bytes. Bits 31 - 22 of EBX (the second from the left) represent the number of ways minus one. These bits in binary are 1011, which is 11 in decimal. So cpuid says that there are 12 ways. Other information we can obtain from here is that the L1 data cache is 48KB in size, with 64-byte cache line size, and uses the simple addressing scheme. So based on the cpuid information, bits 11-6 of the address represent the cache set index.

So which one is right? The optimization manual could be wrong (and that wouldn't be the first time), but also the cpuid dump could be buggy (and that also wouldn't be the first time). Well, both could be wrong, but this is historically much less likely. Other examples of discrepancies between the manual and cpuid information are discussed here, so we know that errors exist in both sources. Moreover, I'm not aware of any other Intel source that mentions the number of ways in the L1D. Of course, non-Intel sources could be wrong as well.

Having 8 ways with 96 sets would result in an unusual design and unlikely to happen without more than a mere mention of a single number in the optimization manual (although that doesn't necessarily mean that the cache has to have 12 ways). This by itself makes the manual more likely to be wrong here.

Fortunately, Intel does document implementation bugs in their processors in the spec update documents. We can check with spec update document for the Ice Lake processors, which you can find here. Two cpuid bugs are documented there:

CPUID TLB Information is Inaccurate

I've already discussed this issue in my answer on Understanding TLB from CPUID results on Intel. The second bug is:

CPUID L2 Cache Information May Be Inaccurate

This is not relevant to your question.

The fact that the spec update document mentions some cpuid bugs strongly suggests that the information from cpuid leaf 0x4 was validated by Intel and is accurate. So the optimization manual (and the datasheet) is probably wrong in this case.

1 Software-visible latency/bandwidth will vary depending on access patterns and other factors.

1 Software-visible latency/bandwidth will vary depending on access patterns and other factors.