The high order bits are reserved in case the address bus would be increased in the future, so you can't use it simply like that

The AMD64 architecture defines a 64-bit virtual address format, of which the low-order 48 bits are used in current implementations (...) The architecture definition allows this limit to be raised in future implementations to the full 64 bits, extending the virtual address space to 16 EB (264 bytes). This is compared to just 4 GB (232 bytes) for the x86.

http://en.wikipedia.org/wiki/X86-64#Architectural_features

More importantly, according to the same article [Emphasis mine]:

... in the first implementations of the architecture, only the least significant 48 bits of a virtual address would actually be used in address translation (page table lookup). Further, bits 48 through 63 of any virtual address must be copies of bit 47 (in a manner akin to sign extension), or the processor will raise an exception. Addresses complying with this rule are referred to as "canonical form."

As the CPU will check the high bits even if they're unused, they're not really "irrelevant". You need to make sure that the address is canonical before using the pointer.

Some recent CPUs can optionally ignore high bits, checking only that the topmost matches bit #47 (PML4) or #56 (PML5). Intel Linear Address Masking (LAM) can be enabled by the kernel on a per-process basis, for either user-space, the kernel, or both. AMD UAI (Upper Address Ignore) is similar. ARM64 has a similar feature, Top Byte Ignore (TBI). This makes it more efficient to store data in pointers, and easier (you don't have to manually strip it out before deref or passing to a function that isn't aware of the tagging.)

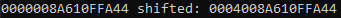

That said, in x86_64 you're still free to use the high 16 bits if needed (if the virtual address is not wider than 48 bits, see below), but you have to check and fix the pointer value by sign-extending it before dereferencing.

Note that casting the pointer value to long is not the correct way to do because long is not guaranteed to be wide enough to store pointers. You need to use uintptr_t or intptr_t.

int *p1 = &val; // original pointer

uint8_t data = ...;

const uintptr_t MASK = ~(1ULL << 48);

// === Store data into the pointer ===

// Note: To be on the safe side and future-proof (because future implementations

// can increase the number of significant bits in the pointer), we should

// store values from the most significant bits down to the lower ones

int *p2 = (int *)(((uintptr_t)p1 & MASK) | (data << 56));

// === Get the data stored in the pointer ===

data = (uintptr_t)p2 >> 56;

// === Deference the pointer ===

// Sign extend first to make the pointer canonical

// Note: Technically this is implementation defined. You may want a more

// standard-compliant way to sign-extend the value

intptr_t p3 = ((intptr_t)p2 << 16) >> 16;

val = *(int*)p3;

WebKit's JavaScriptCore and Mozilla's SpiderMonkey engine as well as LuaJIT use this in the nan-boxing technique. If the value is NaN, the low 48-bits will store the pointer to the object with the high 16 bits serve as tag bits, otherwise it's a double value.

Previously Linux also uses the 63rd bit of the GS base address to indicate whether the value was written by the kernel

In reality you can usually use the 48th bit, too. Because most modern 64-bit OSes split kernel and user space in half, so bit 47 is always zero and you have 17 top bits free for use

You can also use the lower bits to store data. It's called a tagged pointer. If int is 4-byte aligned then the 2 low bits are always 0 and you can use them like in 32-bit architectures. For 64-bit values you can use the 3 low bits because they're already 8-byte aligned. Again you also need to clear those bits before dereferencing.

int *p1 = &val; // the pointer we want to store the value into

int tag = 1;

const uintptr_t MASK = ~0x03ULL;

// === Store the tag ===

int *p2 = (int *)(((uintptr_t)p1 & MASK) | tag);

// === Get the tag ===

tag = (uintptr_t)p2 & 0x03;

// === Get the referenced data ===

// Clear the 2 tag bits before using the pointer

intptr_t p3 = (uintptr_t)p2 & MASK;

val = *(int*)p3;

One famous user of this is the V8 engine with SMI (small integer) optimization. The lowest bit in the address will serve as a tag for type:

- if it's 1, the value is a pointer to the real data (objects, floats or bigger integers). The next higher bit (w) indicates that the pointer is weak or strong. Just clear the tag bits and dereference it

- if it's 0, it's a small integer. In 32-bit V8 or 64-bit V8 with pointer compression it's a 31-bit int, do a signed right shift by 1 to restore the value; in 64-bit V8 without pointer compression it's a 32-bit int in the upper half

32-bit V8

|----- 32 bits -----|

Pointer: |_____address_____w1|

Smi: |___int31_value____0|

64-bit V8

|----- 32 bits -----|----- 32 bits -----|

Pointer: |________________address______________w1|

Smi: |____int32_value____|0000000000000000000|

https://v8.dev/blog/pointer-compression

So as commented below, Intel has published PML5 which provides a 57-bit virtual address space, if you're on such a system you can only use 7 high bits

You can still use some work around to get more free bits though. First you can try to use a 32-bit pointer in 64-bit OSes. In Linux if x32abi is allowed then pointers are only 32-bit long. In Windows just clear the /LARGEADDRESSAWARE flag and pointers now have only 32 significant bits and you can use the upper 32 bits for your purpose. See How to detect X32 on Windows?. Another way is to use some pointer compression tricks: How does the compressed pointer implementation in V8 differ from JVM's compressed Oops?

You can further get more bits by requesting the OS to allocate memory only in the low region. For example if you can ensure that your application never uses more than 64MB of memory then you need only a 26-bit address. And if all the allocations are 32-byte aligned then you have 5 more bits to use, which means you can store 64 - 21 = 43 bits of information in the pointer!

I guess ZGC is one example of this. It uses only 42 bits for addressing which allows for 242 bytes = 4 × 240 bytes = 4 TB

ZGC therefore just reserves 16TB of address space (but not actually uses all of this memory) starting at address 4TB.

A first look into ZGC

It uses the bits in the pointer like this:

6 4 4 4 4 4 0

3 7 6 5 2 1 0

+-------------------+-+----+-----------------------------------------------+

|00000000 00000000 0|0|1111|11 11111111 11111111 11111111 11111111 11111111|

+-------------------+-+----+-----------------------------------------------+

| | | |

| | | * 41-0 Object Offset (42-bits, 4TB address space)

| | |

| | * 45-42 Metadata Bits (4-bits) 0001 = Marked0

| | 0010 = Marked1

| | 0100 = Remapped

| | 1000 = Finalizable

| |

| * 46-46 Unused (1-bit, always zero)

|

* 63-47 Fixed (17-bits, always zero)

For more information on how to do that see

Side note: Using linked list for cases with tiny key values compared to the pointers is a huge memory waste, and it's also slower due to bad cache locality. In fact you shouldn't use linked list in most real life problems