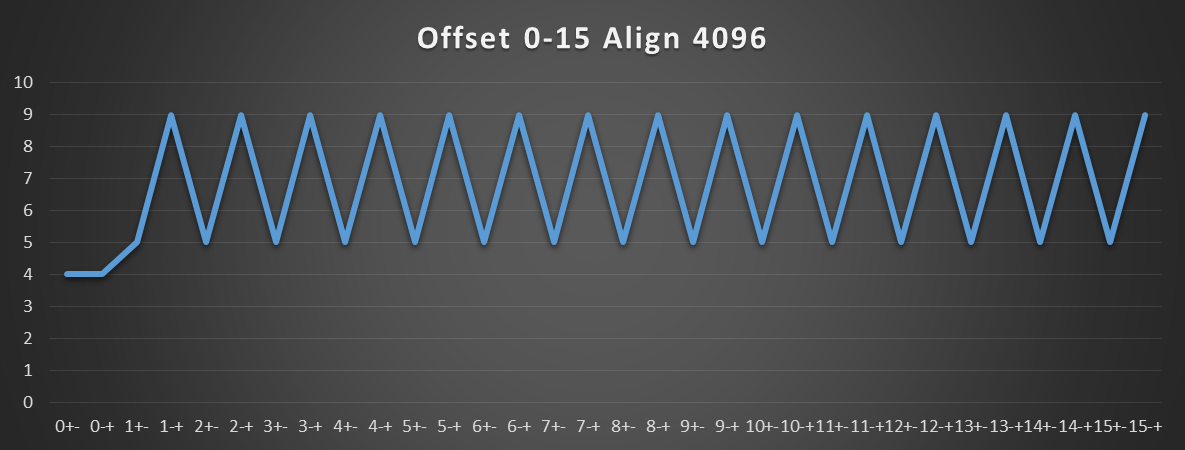

Optimization rule: in pointer-connected data structures like linked-lists / trees, put the next or left/right pointers in the first 16 bytes of the object. malloc typically returns 16-byte aligned blocks (alignof(maxalign_t)), so this will ensure the linking pointers are in the same page as the start of the object.

Any other way of ensuring that important struct members are in the same page as the start of the object will also work.

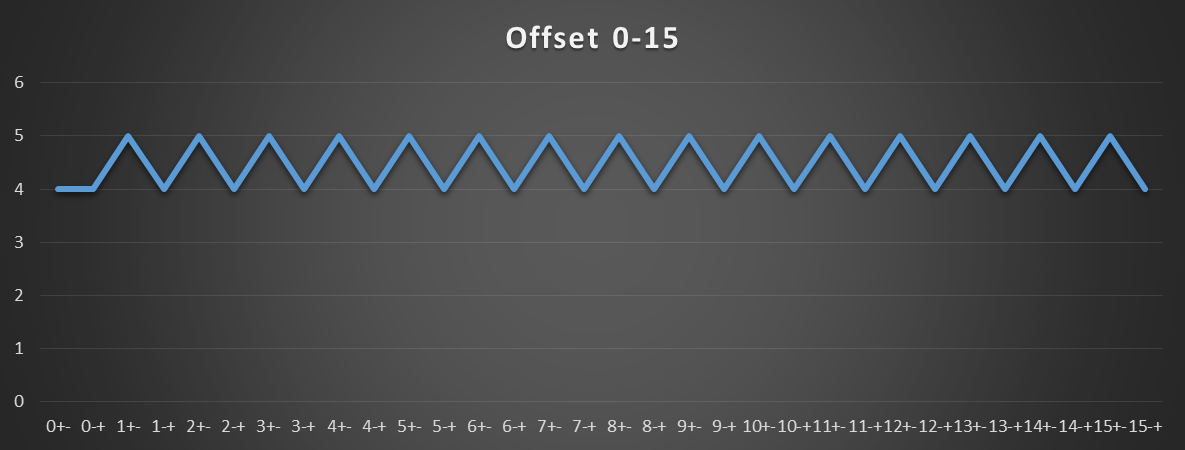

Sandybridge-family normally has 5 cycle L1d load-use latency, but there's a special case for pointer-chasing with small positive displacements with base+disp addressing modes.

Sandybridge-family has 4 cycle load-use latency for [reg + 0..2047] addressing modes, when the base reg is the result of a mov load, not an ALU instruction. Or a penalty if reg+disp is in a different page than reg.

Based on these test results on Haswell and Skylake (and probably original SnB but we don't know), it appears that all of the following conditions must be true:

base reg comes from another load. (A rough heuristic for pointer-chasing, and usually means that load latency is probably part of a dep chain). If objects are usually allocated not crossing a page boundary, then this is a good heuristic. (The HW can apparently detect which execution unit the input is being forwarded from.)

Addressing mode is [reg] or [reg+disp8/disp32]. (Or an indexed load with an xor-zeroed index register! Usually not practically useful, but might provide some insight into the issue/rename stage transforming load uops.)

displacement < 2048. i.e. all bits above bit 11 are zero (a condition HW can check without a full integer adder/comparator.)

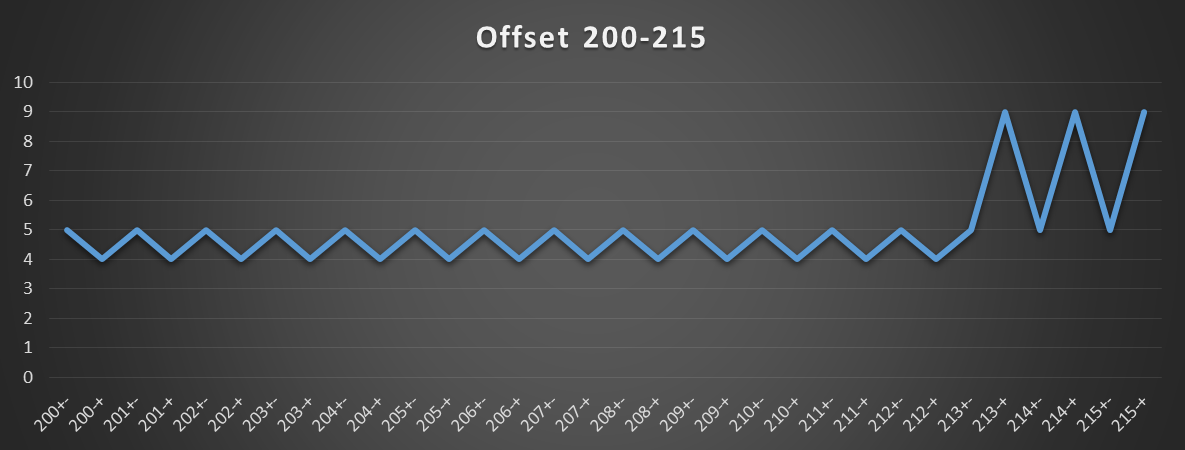

(Skylake but not Haswell/Broadwell): the last load wasn't a retried-fastpath. (So base = result of a 4 or 5 cycle load, it will attempt the fast path. But base = result of a 10 cycle retried load, it won't. The penalty on SKL seems to be 10, vs. 9 on HSW).

I don't know if it's the last load attempted on that load port that matters, or if it's actually what happened to the load that produced that input. Perhaps experiments chasing two dep chains in parallel could shed some light; I've only tried one pointer chasing dep chain with a mix of page-changing and non-page-changing displacements.

If all those things are true, the load port speculates that the final effective address will be in the same page as the base register. This is a useful optimization in real cases when load-use latency forms a loop-carried dep chain, like for a linked list or binary tree.

microarchitectural explanation (my best guess at explaining the result, not from anything Intel published):

It seems that indexing the L1dTLB is on the critical path for L1d load latency. Starting that 1 cycle early (without waiting for the output of an adder to calculate the final address) shaves a cycle off the full process of indexing L1d using the low 12 bits of the address, then comparing the 8 tags in that set against the high bits of the physical address produced by the TLB. (Intel's L1d is VIPT 8-way 32kiB, so it has no aliasing problems because the index bits all come from the low 12 bits of the address: the offset within a page which is the same in both the virtual and physical address. i.e. the low 12 bits translate for free from virt to phys.)

Since we don't find an effect for crossing 64-byte boundaries, we know the load port is adding the displacement before indexing the cache.

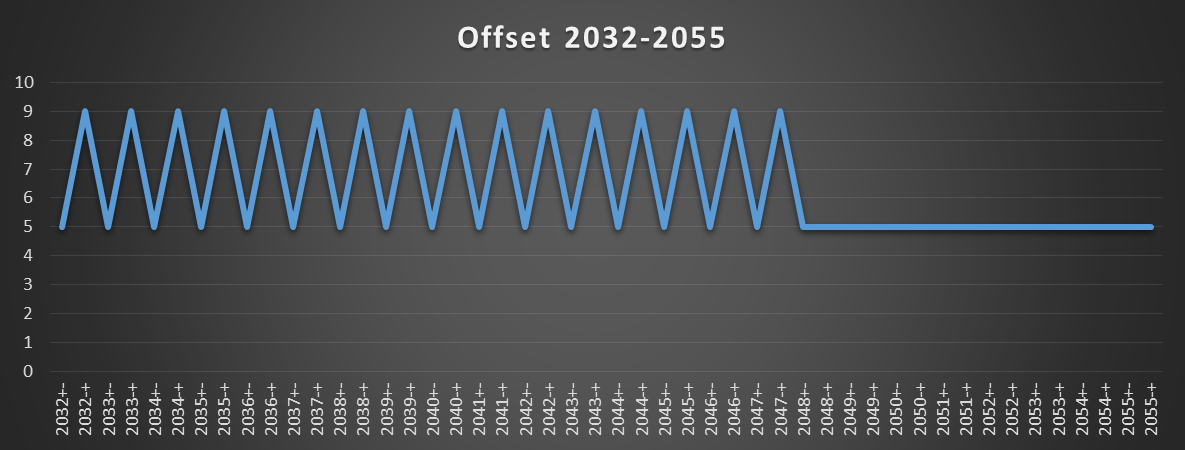

As Hadi suggests, it seems likely that if there's carry-out from bit 11, the load port lets the wrong-TLB load complete and then redoes it using the normal path. (On HSW, the total load latency = 9. On SKL the total load latency can be 7.5 or 10).

Aborting right away and retrying on the next cycle (to make it 5 or 6 cycles instead of 9) would in theory be possible, but remember that the load ports are pipelined with 1 per clock throughput. The scheduler is expecting to be able to send another uop to the load port in the next cycle, and Sandybridge-family standardizes latencies for everything of 5 cycles and shorter. (There are no 2-cycle instructions).

I didn't test if 2M hugepages help, but probably not. I think the TLB hardware is simple enough that it couldn't recognize that a 1-page-higher index would still pick the same entry. So it probably does the slow retry any time the displacement crosses a 4k boundary, even if that's in the same hugepage. (Page-split loads work this way: if the data actually crosses a 4k boundary (e.g. 8-byte load from page-4), you pay the page-split penalty not just the cache-line split penalty, regardless of hugepages)

Intel's optimization manual documents this special case in section 2.4.5.2 L1 DCache (in the Sandybridge section), but doesn't mention any different-page limitation, or the fact that it's only for pointer-chasing, and doesn't happen when there's an ALU instruction in the dep chain.

(Sandybridge)

Table 2-21. Effect of Addressing Modes on Load Latency

-----------------------------------------------------------------------

Data Type | Base + Offset > 2048 | Base + Offset < 2048

| Base + Index [+ Offset] |

----------------------+--------------------------+----------------------

Integer | 5 | 4

MMX, SSE, 128-bit AVX | 6 | 5

X87 | 7 | 6

256-bit AVX | 7 | 7

(remember, 256-bit loads on SnB take 2 cycles in the load port, unlike on HSW/SKL)

The text around this table also doesn't mention the limitations that exist on Haswell/Skylake, and may also exist on SnB (I don't know).

Maybe Sandybridge doesn't have those limitations and Intel didn't document the Haswell regression, or else Intel just didn't document the limitations in the first place. The table is pretty definite about that addressing mode always being 4c latency with offset = 0..2047.

@Harold's experiment of putting an ALU instruction as part of the load/use pointer-chasing dependency chain confirms that it's this effect that's causing the slowdown: an ALU insn decreased the total latency, effectively giving an instruction like and rdx, rdx negative incremental latency when added to the mov rdx, [rdx-8] dep chain in this specific page-crossing case.

Previous guesses in this answer included the suggestion that using the load result in an ALU vs. another load was what determined the latency. That would be super weird and require looking into the future. That was a wrong interpretation on my part of the effect of adding an ALU instruction into the loop. (I hadn't known about the 9-cycle effect on page crossing, and was thinking that the HW mechanism was a forwarding fast-path for the result inside the load port. That would make sense.)

We can prove that it's the source of the base reg input that matters, not the destination of the load result: Store the same address at 2 separate locations, before and after a page boundary. Create a dep chain of ALU => load => load, and check that it's the 2nd load that's vulnerable to this slowdown / able to benefit from the speedup with a simple addressing mode.

%define off 16

lea rdi, [buf+4096 - 16]

mov [rdi], rdi

mov [rdi+off], rdi

mov ebp, 100000000

.loop:

and rdi, rdi

mov rdi, [rdi] ; base comes from AND

mov rdi, [rdi+off] ; base comes from a load

dec ebp

jnz .loop

... sys_exit_group(0)

section .bss

align 4096

buf: resb 4096*2

Timed with Linux perf on SKL i7-6700k.

off = 8, the speculation is correct and we get total latency = 10 cycles = 1 + 5 + 4. (10 cycles per iteration).

off = 16, the [rdi+off] load is slow, and we get 16 cycles / iter = 1 + 5 + 10. (The penalty seems to be higher on SKL than HSW)

With the load order reversed (doing the [rdi+off] load first), it's always 10c regardless of off=8 or off=16, so we've proved that mov rdi, [rdi+off] doesn't attempt the speculative fast-path if its input is from an ALU instruction.

Without the and, and off=8, we get the expected 8c per iter: both use the fast path. (@harold confirms HSW also gets 8 here).

Without the and, and off=16, we get 15c per iter: 5+10. The mov rdi, [rdi+16] attempts the fast path and fails, taking 10c. Then mov rdi, [rdi] doesn't attempt the fast-path because its input failed. (@harold's HSW takes 13 here: 4 + 9. So that confirms HSW does attempt the fast-path even if the last fast-path failed, and that the fast-path fail penalty really is only 9 on HSW vs. 10 on SKL)

It's unfortunate that SKL doesn't realize that [base] with no displacement can always safely use the fast path.

On SKL, with just mov rdi, [rdi+16] in the loop, the average latency is 7.5 cycles. Based on tests with other mixes, I think it alternates between 5c and 10c: after a 5c load that didn't attempt the fast path, the next one does attempt it and fails, taking 10c. That makes the next load use the safe 5c path.

Adding a zeroed index register actually speeds it up in this case where we know the fast-path is always going to fail. Or using no base register, like [nosplit off + rdi*1], which NASM assembles to 48 8b 3c 3d 10 00 00 00 mov rdi,QWORD PTR [rdi*1+0x10]. Notice that this requires a disp32, so it's bad for code size.

Also beware that indexed addressing modes for micro-fused memory operands are un-laminated in some cases, while base+disp modes aren't. But if you're using pure loads (like mov or vbroadcastss), there's nothing inherently wrong with an indexed addressing mode. Using an extra zeroed register isn't great, though.

On Ice Lake, this special 4 cycle fast path for pointer chasing loads is gone: GP register loads that hit in L1 now generally take 5 cycles, with no difference based on the presence of indexing or the size of the offset.

[base + 0..2047]special case 4-cycle load-use latency, but it's plausible that it's based on using the base reg to start a TLB check before an add, and is slower if it turns out they're in different pages. (And BTW, that special case is only when forwarding to another addressing mode, not to an ALU instruction.) – Nilamov rdx, [rdx]/and rdx,rdx. – Nila