Write-combining buffers have been a feature of Intel CPUs going back to at least the Pentium 4 and probably before. The basic idea is that these cache-line sized buffers collect writes to the same cache line so they can be handled as a unit. As an example of their implications for software performance, if you don't write the full cache line, you may experience reduced performance.

For example, in Intel 64 and IA-32 Architectures Optimization Reference Manual section "3.6.10 Write Combining" starts with the following description (emphasis added):

Write combining (WC) improves performance in two ways:

• On a write miss to the first-level cache, it allows multiple stores to the same cache line to occur before that cache line is read for ownership (RFO) from further out in the cache/memory hierarchy. Then the rest of line is read, and the bytes that have not been written are combined with the unmodified bytes in the returned line.

• Write combining allows multiple writes to be assembled and written further out in the cache hierarchy as a unit. This saves port and bus traffic. Saving traffic is particularly important for avoiding partial writes to uncached memory.

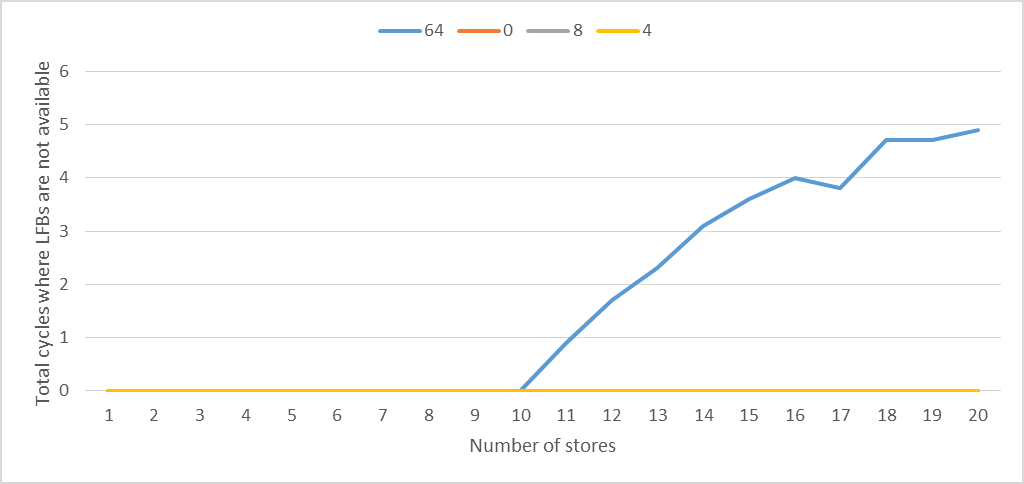

There are six write-combining buffers (on Pentium 4 and Intel Xeon processors with a CPUID signature of family encoding 15, model encoding 3; there are 8 write-combining buffers). Two of these buffers may be written out to higher cache levels and freed up for use on other write misses. Only four write- combining buffers are guaranteed to be available for simultaneous use. Write combining applies to memory type WC; it does not apply to memory type UC.

There are six write-combining buffers in each processor core in Intel Core Duo and Intel Core Solo processors. Processors based on Intel Core microarchitecture have eight write-combining buffers in each core. Starting with Intel microarchitecture code name Nehalem, there are 10 buffers available for write- combining.

Write combining buffers are used for stores of all memory types. They are particularly important for writes to uncached memory ...

My question is whether write combining applies to WB memory regions (that's the "normal" memory you are using 99.99% of the time in user programs), when using normal stores (that's anything other than non-temporal stores, i.e., the stores you are using 99.99% of the time).

The text above is hard to interpret exactly, and since not to have been updated since the Core Duo era. You have the part that says write combing "applies to WC memory but not UC", but of course that leaves out all the other types, like WB. Later you have that "[WC is] particularly important for writes to uncached memory", seemly contradicting the "doesn't apply to UC part".

So are write combining buffers used on modern Intel chips for normal stores to WB memory?

x86 store buffer write coalescing. Ok, I found a comment on Unexpectedly poor and weirdly bimodal performance for store loop on Intel Skylake where you linked to Dr. Bandwidth's post: software.intel.com/en-us/forums/… – Regressivemovntdqaloads from WC memory do read from an LFB, so load ports are connected to LFBs somehow. (And normal loads may get their data straight from an LFB for early restart? Or do they replay the load uop itself, not just dependent uops, maybe to redo the TLB check?) So LFB snooping is plausible, but I think the main sticking point is having M without data. – Regressive