On Intel chips, the frequency impact and the specific frequency transition behavior depends on both the width of the operation and the specific instruction used.

As far as instruction-related frequency limits go, there are three frequency levels – so-called licenses – from fastest to slowest: L0, L1 and L2. L0 is the "nominal" speed you'll see written on the box: when the chip says "3.5 GHz turbo", they are referring to the single-core L0 turbo. L1 is a lower speed sometimes called AVX turbo or AVX2 turbo5, originally associated with AVX and AVX2 instructions1. L2 is a lower speed than L1, sometimes called "AVX-512 turbo".

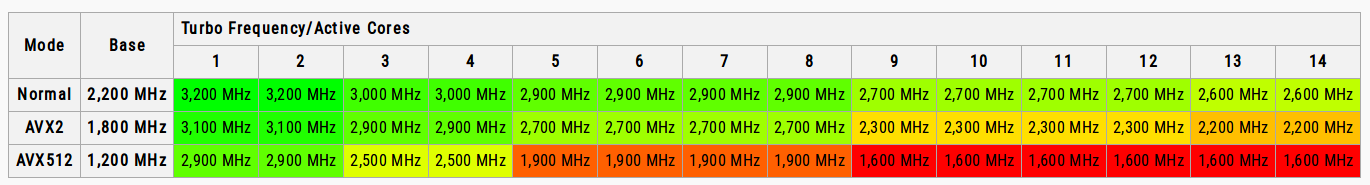

The exact speeds for each license also depend on the number of active cores. For up to date tables, you can usually consult WikiChip. For example, the table for the Xeon Gold 5120 is here:

![Xeon Gold 5120 Frequencies]()

The Normal, AVX2 and AVX512 rows correspond to the L0, L1 and L2 licenses respectively. Note that the relative slowdown for L1 and L2 licenses generally gets worse as the number of cores increase: for 1 or 2 active cores the L1 and L2 speeds are 97% and 91% of L0, but for 13 or 14 cores they are 85% and 62% respectively. This varies by chip, but the general trend is usually the same.

Those preliminaries out of the way, let's get to what I think you are asking: which instructions cause which licenses to be activated?

Here's a table, showing the implied license for instructions based on their width and their categorization as light or heavy:

Width Light Heavy

--------- ------- -------

Scalar L0 N/A

128-bit L0 L0

256-bit L0 L1*

512-bit L1 L2*

*soft transition (see below)

So we immediately see that all scalar (non-SIMD) instructions and all 128-bit wide instructions2 always run at full speed in the L0 license.

256-bit instructions will run in L0 or L1, depending on whether they are light or heavy, and 512-bit instructions will run in L1 or L2 on the same basis.

So what is this light and heavy thing?

Light vs Heavy

It's easiest to start by explaining heavy instructions.

Heavy instructions are all SIMD instructions that need to run on the FP/FMA unit. Basically that's the majority of the FP instructions (those usually ending in ps or pd, like addpd) as well as integer multiplication instructions which largely start with vpmul or vpmad since SIMD integer multiplication actually runs on the SIMD unit, as well as vplzcnt(q|d) which apparently also runs on the FMA unit.

Given that, light instructions are everything else. In particular, integer arithmetic other than multiplication, logical instructions, shuffles/blends (including FP) and SIMD load and store are light.

Transitions

The L1 and L2 entries in the Heavy column are marked with an asterisk, like L1*. That's because these instructions cause a soft transition when they occur. The other L1 entry (for 512-bit light instructions) causes a hard transition. Here we'll discuss the two transition types.

Hard Transition

A hard transition occurs immediately as soon as any instruction with the given license executes4. The CPU stops, takes some halt cycles and enters the new mode.

Soft Transition

Unlike hard transitions, a soft transition doesn't occur immediately as soon as any instruction is executed. Rather, the instructions initially execute with a reduced throughput (as slow as 1/4 their normal rate), without changing the frequency. If the CPU decides that "enough" heavy instructions are executing per unit time, and a specific threshold is reached, a transition to the higher-numbered license occurs.

That is, the CPU understands that if only a few heavy instructions arrive, or even if many arrive but they aren't dense when considering other non-heavy instructions, it may not be worth reducing the frequency.

Guidelines

Given the above, we can establish some reasonable guidelines. You never have to be scared of 128-bit instructions, since they never cause license related3 downclocking.

Furthermore, you never have to be worried about light 256-bit wide instructions either, since they also don't cause downclocking. If you aren't using a lot of vectorized FP math, you aren't likely to be using heavy instructions, so this would apply to you. Indeed, compilers already liberally insert 256-bit instructions when you use the appropriate -march option, especially for data movement and auto-vectorized loops.

Using heavy AVX/AVX2 instructions and light AVX-512 instructions is trickier, because you will run in the L1 licenses. If only a small part of your process (say 10%) can take advantage, it probably isn't worth slowing down the rest of your application. The penalties associated with L1 are generally moderate - but check the details for your chip.

Using heavy AVX-512 instructions is even trickier, because the L2 license comes with serious frequency penalties on most chips. On the other hand, it is important to note that only FP and integer multiply instructions fall into the heavy category, so as a practical matter a lot of integer 512-bit wide use will only incur the L1 license.

1 Although, as we'll see, this a bit of a misnomer because AVX-512 instructions can set the speed to this license, and some AVX/2 instructions don't.

2 128-bit wide means using xmm registers, regardless of what instruction set they were introduced in - mainstream AVX-512 contains 128-bit variants for most/all new instructions.

3 Note the weasel clause license related - you may certainly suffer other causes of downclocking, such as thermal, power or current limits, and it is possible that 128-bit instructions could trigger this, but I think it is fairly unlikely on a desktop or server system (low power, small form factor devices are another matter).

4 Evidently, we are talking only about transitions to a higher-level license, e.g., from L0 to L1 when a hard-transition L1 instruction executes. If you are already in L1 or L2 nothing happens - there is no transition if you are already in the same level and you don't transition to lower-numbered levels based on any specific instruction but rather running for a certain time without any instructions of the higher-numbered level.

5 Out of the two AVX2 turbo is more common, which I never really understood because 256-bit instructions are as much associated with AVX as compared to AVX2, and most of the heavy instructions which actually trigger AVX turbo (L1 license) are actually FP instructions in AVX, not AVX2. The only exception is AVX2 integer multiplies.

instructions to avoidin order to accomplish what exactly? – Verlinevermeervzeroupperat the start of your program. – Dolf-mpreferred-vector-width=256option. I don't know if it prevents gcc from ever producing AVX-512 instructions (outside of direct intrinsic use), but it is certainly possible. I am not aware of any option which distinguishes between "heavy" and "light" instructions however. Usually this isn't a problem, since if you turn off AVX-512 and don't have a bunch of FP ops, you are probably targeting L0 anyways, and AVX-512 light is still L1. – Dolf-march=skylake-avx512 -mtune=skylake-avx512 -mprefer-vector-width=128, and then I decompiled itobjdump -d my binary > binary.asm, and thengrep -i ymm binary.asm. I guess it is safe to conclude that it doesn't use any 256 and 512 bit registers and so no AVX-256 and AVX-512 instructions are emitted? @Dolf Tho, I still see manyvzeroupperinstructions. I thought it were only used with ymm registers. No? – Anoxia