Modern x86 CPUs break down the incoming instruction stream into micro-operations (uops1) and then schedule these uops out-of-order as their inputs become ready. While the basic idea is clear, I'd like to know the specific details of how ready instructions are scheduled, since it impacts micro-optimization decisions.

For example, take the following toy loop2:

top:

lea eax, [ecx + 5]

popcnt eax, eax

add edi, eax

dec ecx

jnz top

this basically implements the loop (with the following correspondence: eax -> total, c -> ecx):

do {

total += popcnt(c + 5);

} while (--c > 0);

I'm familiar with the process of optimizing any small loop by looking at the uop breakdown, dependency chain latencies, and so on. In the loop above we have only one carried dependency chain: dec ecx. The first three instructions of the loop (lea, popcnt, add) are part of a dependency chain that starts fresh each loop.

The final dec and jne are fused. So we have a total of 4 fused-domain uops, and one only loop-carried dependency chain with a latency of 1 cycle. So based on that criteria, it seems that the loop can execute at 1 cycle/iteration.

However, we should look at the port pressure too:

- The

leacan execute on ports 1 and 5 - The

popcntcan execute on port 1 - The

addcan execute on port 0, 1, 5 and 6 - The predicted-taken

jnzexecutes on port 6

So to get to 1 cycle/iteration, you pretty much need the following to happen:

- The

popcntmust execute on port 1 (the only port it can execute on) - The

leamust execute on port 5 (and never on port 1) - The

addmust execute on port 0, and never on any of the other three ports it can execute on - The

jnzcan only execute on port 6 anyway

That's a lot of conditions! If instructions just got scheduled randomly, you could get a much worse throughput. For example, 75% of the add would go to port 1, 5 or 6, which would delay the popcnt, lea or jnz by one cycle. Similarly for the lea which can go to 2 ports, one shared with popcnt.

IACA on the other hand reports a result very close to optimal, 1.05 cycles per iteration:

Intel(R) Architecture Code Analyzer Version - 2.1

Analyzed File - l.o

Binary Format - 64Bit

Architecture - HSW

Analysis Type - Throughput

Throughput Analysis Report

--------------------------

Block Throughput: 1.05 Cycles Throughput Bottleneck: FrontEnd, Port0, Port1, Port5

Port Binding In Cycles Per Iteration:

---------------------------------------------------------------------------------------

| Port | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 |

---------------------------------------------------------------------------------------

| Cycles | 1.0 0.0 | 1.0 | 0.0 0.0 | 0.0 0.0 | 0.0 | 1.0 | 0.9 | 0.0 |

---------------------------------------------------------------------------------------

N - port number or number of cycles resource conflict caused delay, DV - Divider pipe (on port 0)

D - Data fetch pipe (on ports 2 and 3), CP - on a critical path

F - Macro Fusion with the previous instruction occurred

* - instruction micro-ops not bound to a port

^ - Micro Fusion happened

# - ESP Tracking sync uop was issued

@ - SSE instruction followed an AVX256 instruction, dozens of cycles penalty is expected

! - instruction not supported, was not accounted in Analysis

| Num Of | Ports pressure in cycles | |

| Uops | 0 - DV | 1 | 2 - D | 3 - D | 4 | 5 | 6 | 7 | |

---------------------------------------------------------------------------------

| 1 | | | | | | 1.0 | | | CP | lea eax, ptr [ecx+0x5]

| 1 | | 1.0 | | | | | | | CP | popcnt eax, eax

| 1 | 0.1 | | | | | 0.1 | 0.9 | | CP | add edi, eax

| 1 | 0.9 | | | | | | 0.1 | | CP | dec ecx

| 0F | | | | | | | | | | jnz 0xfffffffffffffff4

It pretty much reflects the necessary "ideal" scheduling I mentioned above, with a small deviation: it shows the add stealing port 5 from the lea on 1 out of 10 cycles. It also doesn't know that the fused branch is going to go to port 6 since it is predicted taken, so it puts most of the uops for the branch on port 0, and most of the uops for the add on port 6, rather than the other way around.

It's not clear if the extra 0.05 cycles that IACA reports over the optimal is the result of some deep, accurate analysis, or a less insightful consequence of the algorithm it uses, e.g., analyzing the loop over a fixed number of cycles, or just a bug or whatever. The same goes for the 0.1 fraction of a uop that it thinks will go to the non-ideal port. It is also not clear if one explains the other - I would think that mis-assigning a port 1 out of 10 times would cause a cycle count of 11/10 = 1.1 cycles per iteration, but I haven't worked out the actual downstream results - maybe the impact is less on average. Or it could just be rounding (0.05 == 0.1 to 1 decimal place).

So how do modern x86 CPUs actually schedule? In particular:

- When multiple uops are ready in the reservation station, in what order are they scheduled to ports?

- When a uop can go to multiple ports (like the

addandleain the example above), how is it decided which port is chosen? - If any of the answers involve a concept like oldest to choose among uops, how is it defined? Age since it was delivered to the RS? Age since it became ready? How are ties broken? Does program order ever come into it?

Results on Skylake

Let's measure some actual results on Skylake to check which answers explain the experimental evidence, so here are some real-world measured results (from perf) on my Skylake box. Confusingly, I'm going switch to using imul for my "only executes on one port" instruction, since it has many variants, including 3-argument versions that allow you to use different registers for the source(s) and destination. This is very handy when trying to construct dependency chains. It also avoids the whole "incorrect dependency on destination" that popcnt has.

Independent Instructions

Let's start by looking at the simple (?) case that the instructions are relatively independent - without any dependency chains other than trivial ones like the loop counter.

Here's a 4 uop loop (only 3 executed uops) with mild pressure. All instructions are independent (don't share any sources or destinations). The add could in principle steal the p1 needed by the imul or p6 needed by the dec:

Example 1

instr p0 p1 p5 p6

xor (elim)

imul X

add X X X X

dec X

top:

xor r9, r9

add r8, rdx

imul rax, rbx, 5

dec esi

jnz top

The result is that this executes with perfect scheduling at 1.00 cycles/iteration:

560,709,974 uops_dispatched_port_port_0 ( +- 0.38% )

1,000,026,608 uops_dispatched_port_port_1 ( +- 0.00% )

439,324,609 uops_dispatched_port_port_5 ( +- 0.49% )

1,000,041,224 uops_dispatched_port_port_6 ( +- 0.00% )

5,000,000,110 instructions:u # 5.00 insns per cycle ( +- 0.00% )

1,000,281,902 cycles:u

( +- 0.00% )

As expected, p1 and p6 are fully utilized by the imul and dec/jnz respectively, and then the add issues roughly half and half between the remaining available ports. Note roughly - the actual ratio is 56% and 44%, and this ratio is pretty stable across runs (note the +- 0.49% variation). If I adjust the loop alignment, the split changes (53/46 for 32B alignment, more like 57/42 for 32B+4 alignment). Now, we if change nothing except the position of imul in the loop:

Example 2

top:

imul rax, rbx, 5

xor r9, r9

add r8, rdx

dec esi

jnz top

Then suddenly the p0/p5 split is exactly 50%/50%, with 0.00% variation:

500,025,758 uops_dispatched_port_port_0 ( +- 0.00% )

1,000,044,901 uops_dispatched_port_port_1 ( +- 0.00% )

500,038,070 uops_dispatched_port_port_5 ( +- 0.00% )

1,000,066,733 uops_dispatched_port_port_6 ( +- 0.00% )

5,000,000,439 instructions:u # 5.00 insns per cycle ( +- 0.00% )

1,000,439,396 cycles:u ( +- 0.01% )

So that's already interesting, but it's hard to tell what's going on. Perhaps the exact behavior depends on the initial conditions at loop entry and is sensitive to ordering within the loop (e.g. because counters are used). This example shows that something more than "random" or "stupid" scheduling is going on. In particular, if you just eliminate the imul instruction from the loop, you get the following:

Example 3

330,214,329 uops_dispatched_port_port_0 ( +- 0.40% )

314,012,342 uops_dispatched_port_port_1 ( +- 1.77% )

355,817,739 uops_dispatched_port_port_5 ( +- 1.21% )

1,000,034,653 uops_dispatched_port_port_6 ( +- 0.00% )

4,000,000,160 instructions:u # 4.00 insns per cycle ( +- 0.00% )

1,000,235,522 cycles:u ( +- 0.00% )

Here, the add is now roughly evenly distributed among p0, p1 and p5 - so the presence of the imul did affect the add scheduling: it wasn't just a consequence of some "avoid port 1" rule.

Note here that total port pressure is only 3 uops/cycle since the xor is a zeroing idiom and is eliminated in the renamer. Let's try with the max pressure of 4 uops. I expect whatever mechanism kicked in above to be able to perfectly schedule this also. We only change xor r9, r9 to xor r9, r10, so it is no longer a zeroing idiom. We get the following results:

Example 4

top:

xor r9, r10

add r8, rdx

imul rax, rbx, 5

dec esi

jnz top

488,245,238 uops_dispatched_port_port_0 ( +- 0.50% )

1,241,118,197 uops_dispatched_port_port_1 ( +- 0.03% )

1,027,345,180 uops_dispatched_port_port_5 ( +- 0.28% )

1,243,743,312 uops_dispatched_port_port_6 ( +- 0.04% )

5,000,000,711 instructions:u # 2.66 insns per cycle ( +- 0.00% )

1,880,606,080 cycles:u ( +- 0.08% )

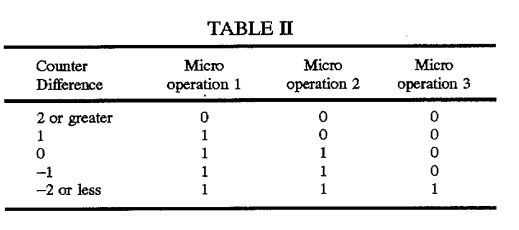

Oops! Rather than evenly scheduling everything across p0156, the scheduler has underused p0 (it's only executing something ~49% of cycles), and hence p1 and p6 are oversubscribed because they are executing both their required ops of imul and dec/jnz. This behavior, I think is consistent with a counter-based pressure indicator as hayesti indicated in their answer, and with uops being assigned to a port at issue-time, not at execution time as both hayesti and Peter Cordes mentioned. That behavior3 makes the execute the oldest ready uops rule not nearly as effective. If uops weren't bound to execution ports at issue, but rather at execution, then this "oldest" rule would fix the problem above after one iteration - once one imul and one dec/jnz got held back for a single iteration, they will always be older than the competing xor and add instructions, so should always get scheduled first. One thing I am learning though, is that if ports are assigned at issue time, this rule doesn't help because the ports are pre-determined at issue time. I guess it still helps a bit in favoring instructions that are part of long dependency chains (since these will tend to fall behind), but it's not the cure-all I thought it was.

That also seems to be an explanation of the results above: p0 gets assigned more pressure than it really has because the dec/jnz combo can in theory execute on p06. In fact because the branch is predicted taken it only ever goes to p6, but perhaps that info can't feed into the pressure balancing algorithm, so the counters tend to see equal pressure on p016, meaning that the add and the xor get spread around differently than optimal.

Probably we can test this, by unrolling the loop a bit so the jnz is less of a factor...

1 OK, it is properly written μops, but that kills search-ability and to actually type the "μ" character I'm usually resorting to copy-pasting the character from a webpage.

2 I had originally used imul instead of popcnt in the loop, but, unbelievably, IACA doesn't support it!

3 Please note that I'm not suggesting this is a poor design or anything - there are probably very good hardware reasons why the scheduler cannot easily make all its decisions at execution time.

perf. They definitely show that at least in some cases IACA is way optimistic. Even in fairly simple-to-schedule cases (no dep chains) there is significant mis-scheduling, which nearly doubles the runtime. – Tonguetiedi -> ecx, should bec -> ecx. I was doing a similar analysis to a slightly different piece of code and I'm not able to figure out how or why it's being scheduled in a suboptimal way, and then I stumbled upon this question. – Platinicp6, notp0. Same forcall.p0is only able to handle conditional jumps that are (predicted) not taken. I added a test to uarch-bench just now to illustrate this. Run with--timer=libpfc --test-name=misc/*tight* --extra-events=UOPS_DISPATCHED.PORT_0,UOPS_DISPATCHED.PORT_1,UOPS_DISPATCHED.PORT_5,UOPS_DISPATCHED.PORT_6... – Tonguetiedtight_loop3) are any uops handled byp0. Perhapsp6is the only one capable of maintaining a renamed version ofripor otherwise updating whatever structure handles changes toripother than the normal flow of instructions. – Tonguetied