I got a question that I fight around for days with now.

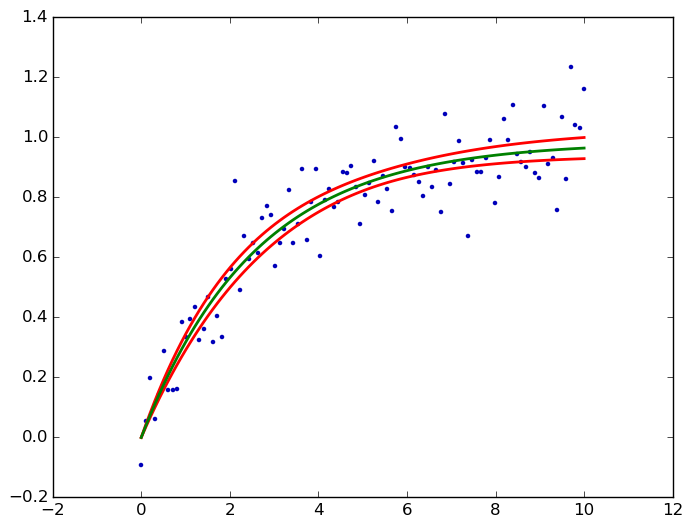

How do I calculate the (95%) confidence band of a fit?

Fitting curves to data is the every day job of every physicist -- so I think this should be implemented somewhere -- but I can't find an implementation for this neither do I know how to do this mathematically.

The only thing I found is seaborn that does a nice job for linear least-square.

import numpy as np

from matplotlib import pyplot as plt

import seaborn as sns

import pandas as pd

x = np.linspace(0,10)

y = 3*np.random.randn(50) + x

data = {'x':x, 'y':y}

frame = pd.DataFrame(data, columns=['x', 'y'])

sns.lmplot('x', 'y', frame, ci=95)

plt.savefig("confidence_band.pdf")

But this is just linear least-square. When I want to fit e.g. a saturation curve like  , I'm screwed.

, I'm screwed.

Sure, I can calculate the t-distribution from the std-error of a least-square method like scipy.optimize.curve_fit but that is not what I'm searching for.

Thanks for any help!!