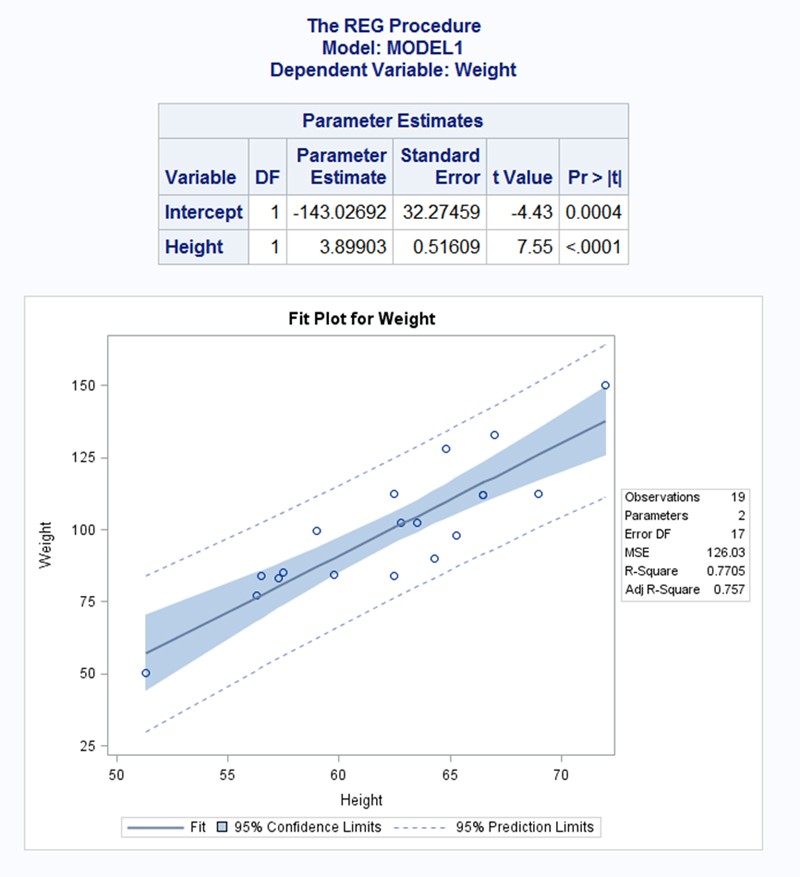

Here's what I put together. I tried to closely emulate your screenshot.

Given

import numpy as np

import scipy as sp

import scipy.stats as stats

import matplotlib.pyplot as plt

%matplotlib inline

# Raw Data

heights = np.array([50,52,53,54,58,60,62,64,66,67,68,70,72,74,76,55,50,45,65])

weights = np.array([25,50,55,75,80,85,50,65,85,55,45,45,50,75,95,65,50,40,45])

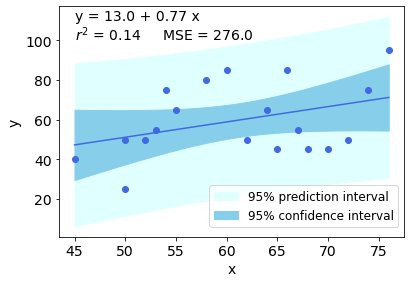

Two detailed options to plot confidence intervals:

def plot_ci_manual(t, s_err, n, x, x2, y2, ax=None):

"""Return an axes of confidence bands using a simple approach.

Notes

-----

.. math:: \left| \: \hat{\mu}_{y|x0} - \mu_{y|x0} \: \right| \; \leq \; T_{n-2}^{.975} \; \hat{\sigma} \; \sqrt{\frac{1}{n}+\frac{(x_0-\bar{x})^2}{\sum_{i=1}^n{(x_i-\bar{x})^2}}}

.. math:: \hat{\sigma} = \sqrt{\sum_{i=1}^n{\frac{(y_i-\hat{y})^2}{n-2}}}

References

----------

.. [1] M. Duarte. "Curve fitting," Jupyter Notebook.

http://nbviewer.ipython.org/github/demotu/BMC/blob/master/notebooks/CurveFitting.ipynb

"""

if ax is None:

ax = plt.gca()

ci = t * s_err * np.sqrt(1/n + (x2 - np.mean(x))**2 / np.sum((x - np.mean(x))**2))

ax.fill_between(x2, y2 + ci, y2 - ci, color="#b9cfe7", edgecolor="")

return ax

def plot_ci_bootstrap(xs, ys, resid, nboot=500, ax=None):

"""Return an axes of confidence bands using a bootstrap approach.

Notes

-----

The bootstrap approach iteratively resampling residuals.

It plots `nboot` number of straight lines and outlines the shape of a band.

The density of overlapping lines indicates improved confidence.

Returns

-------

ax : axes

- Cluster of lines

- Upper and Lower bounds (high and low) (optional) Note: sensitive to outliers

References

----------

.. [1] J. Stults. "Visualizing Confidence Intervals", Various Consequences.

http://www.variousconsequences.com/2010/02/visualizing-confidence-intervals.html

"""

if ax is None:

ax = plt.gca()

bootindex = sp.random.randint

for _ in range(nboot):

resamp_resid = resid[bootindex(0, len(resid) - 1, len(resid))]

# Make coeffs of for polys

pc = sp.polyfit(xs, ys + resamp_resid, 1)

# Plot bootstrap cluster

ax.plot(xs, sp.polyval(pc, xs), "b-", linewidth=2, alpha=3.0 / float(nboot))

return ax

Code

# Computations ----------------------------------------------------------------

# Modeling with Numpy

def equation(a, b):

"""Return a 1D polynomial."""

return np.polyval(a, b)

x = heights

y = weights

p, cov = np.polyfit(x, y, 1, cov=True) # parameters and covariance from of the fit of 1-D polynom.

y_model = equation(p, x) # model using the fit parameters; NOTE: parameters here are coefficients

# Statistics

n = weights.size # number of observations

m = p.size # number of parameters

dof = n - m # degrees of freedom

t = stats.t.ppf(0.975, n - m) # t-statistic; used for CI and PI bands

# Estimates of Error in Data/Model

resid = y - y_model # residuals; diff. actual data from predicted values

chi2 = np.sum((resid / y_model)**2) # chi-squared; estimates error in data

chi2_red = chi2 / dof # reduced chi-squared; measures goodness of fit

s_err = np.sqrt(np.sum(resid**2) / dof) # standard deviation of the error

# Plotting --------------------------------------------------------------------

fig, ax = plt.subplots(figsize=(8, 6))

# Data

ax.plot(

x, y, "o", color="#b9cfe7", markersize=8,

markeredgewidth=1, markeredgecolor="b", markerfacecolor="None"

)

# Fit

ax.plot(x, y_model, "-", color="0.1", linewidth=1.5, alpha=0.5, label="Fit")

x2 = np.linspace(np.min(x), np.max(x), 100)

y2 = equation(p, x2)

# Confidence Interval (select one)

plot_ci_manual(t, s_err, n, x, x2, y2, ax=ax)

#plot_ci_bootstrap(x, y, resid, ax=ax)

# Prediction Interval

pi = t * s_err * np.sqrt(1 + 1/n + (x2 - np.mean(x))**2 / np.sum((x - np.mean(x))**2))

ax.fill_between(x2, y2 + pi, y2 - pi, color="None", linestyle="--")

ax.plot(x2, y2 - pi, "--", color="0.5", label="95% Prediction Limits")

ax.plot(x2, y2 + pi, "--", color="0.5")

#plt.show()

The following modifications are optional, originally implemented to mimic the OP's desired result.

# Figure Modifications --------------------------------------------------------

# Borders

ax.spines["top"].set_color("0.5")

ax.spines["bottom"].set_color("0.5")

ax.spines["left"].set_color("0.5")

ax.spines["right"].set_color("0.5")

ax.get_xaxis().set_tick_params(direction="out")

ax.get_yaxis().set_tick_params(direction="out")

ax.xaxis.tick_bottom()

ax.yaxis.tick_left()

# Labels

plt.title("Fit Plot for Weight", fontsize="14", fontweight="bold")

plt.xlabel("Height")

plt.ylabel("Weight")

plt.xlim(np.min(x) - 1, np.max(x) + 1)

# Custom legend

handles, labels = ax.get_legend_handles_labels()

display = (0, 1)

anyArtist = plt.Line2D((0, 1), (0, 0), color="#b9cfe7") # create custom artists

legend = plt.legend(

[handle for i, handle in enumerate(handles) if i in display] + [anyArtist],

[label for i, label in enumerate(labels) if i in display] + ["95% Confidence Limits"],

loc=9, bbox_to_anchor=(0, -0.21, 1., 0.102), ncol=3, mode="expand"

)

frame = legend.get_frame().set_edgecolor("0.5")

# Save Figure

plt.tight_layout()

plt.savefig("filename.png", bbox_extra_artists=(legend,), bbox_inches="tight")

plt.show()

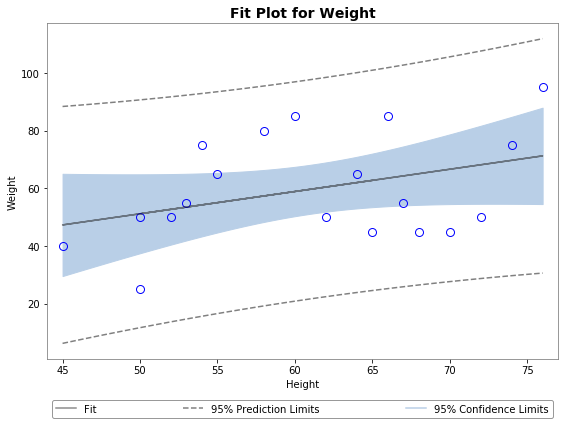

Output

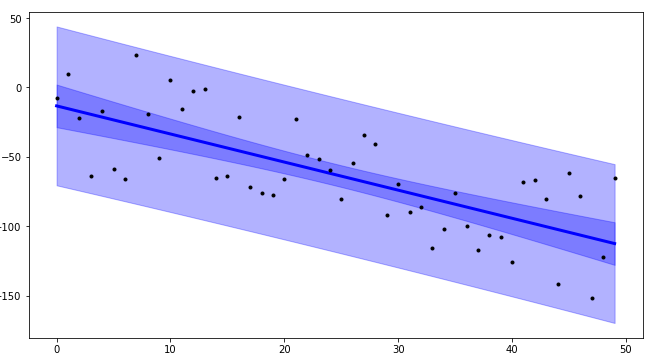

Using plot_ci_manual():

![enter image description here]()

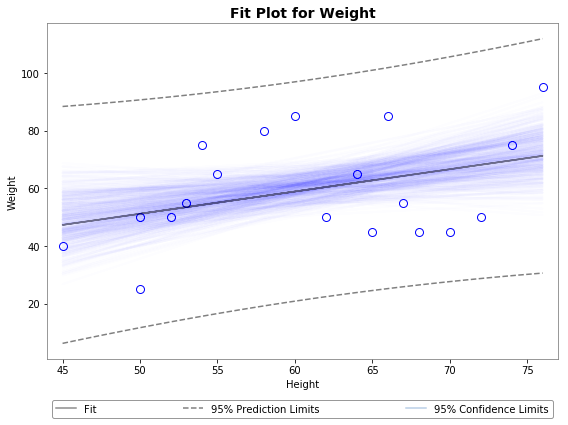

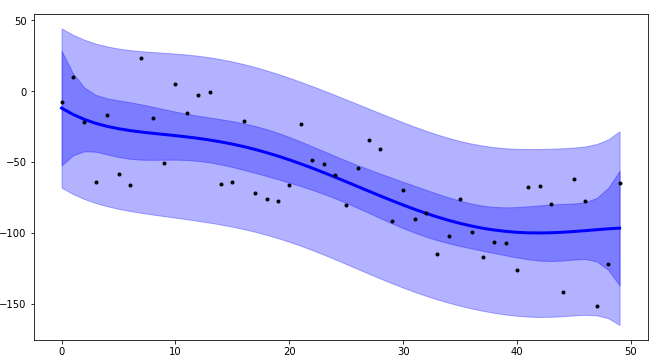

Using plot_ci_bootstrap():

![enter image description here]()

Hope this helps. Cheers.

Details

I believe that since the legend is outside the figure, it does not show up in matplotblib's popup window. It works fine in Jupyter using %maplotlib inline.

The primary confidence interval code (plot_ci_manual()) is adapted from another source producing a plot similar to the OP. You can select a more advanced technique called residual bootstrapping by uncommenting the second option plot_ci_bootstrap().

Updates

- This post has been updated with revised code compatible with Python 3.

stats.t.ppf() accepts the lower tail probability. According to the following resources, t = sp.stats.t.ppf(0.95, n - m) was corrected to t = sp.stats.t.ppf(0.975, n - m) to reflect a two-sided 95% t-statistic (or one-sided 97.5% t-statistic).

y2 was updated to respond more flexibly with a given model (@regeneration).- An abstracted

equation function was added to wrap the model function. Non-linear regressions are possible although not demonstrated. Amend appropriate variables as needed (thanks @PJW).

See Also

- This post on plotting bands with

statsmodels library.

- This tutorial on plotting bands and computing confidence intervals with

uncertainties library (install with caution in a separate environment).