Background

Assuming we have a family of matrices Ms of size n-by-n, which should meet the following requirements:

- Entries of the matrix are either

0or1, i.e., boolean, but diagonal entries are always0s - The matrix is symmetric, i.e.,

M == t(M) - There is a constant row (or equivalently, column) sum constraint

p, such thatall(rowSums(M)==p) == TRUE

Questions

- Is there any potential features from the particular matrix structure, e.g., symmetry, boolean, or somethings else, such that we can take benefits from that to improve the efficiency of searching?

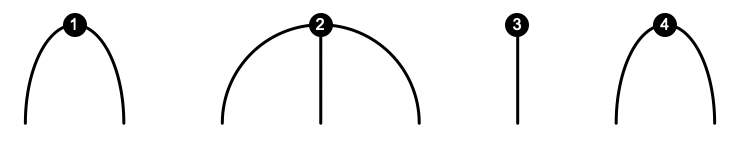

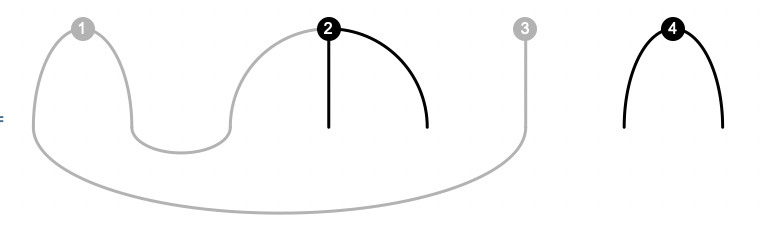

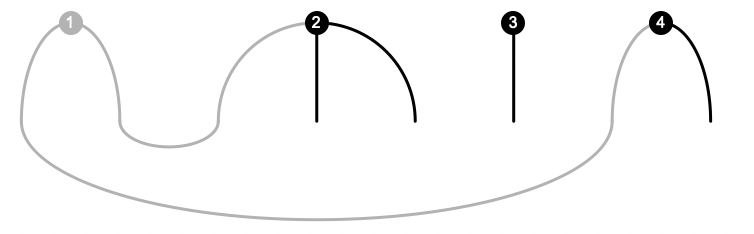

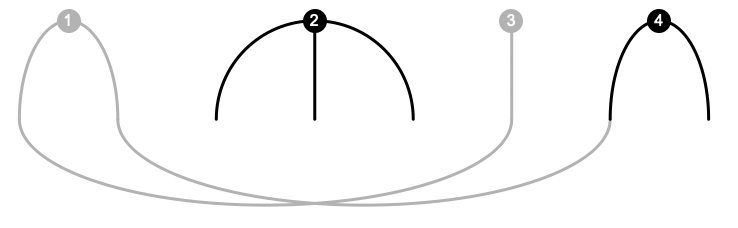

- It seems that the problem can be interpreted as a graph theory way. For example, the matrix is an adjacent matrix of a graph that consists of

nvertices with both indegrees and outdegrees being equal top. This can be done bysample_degseq, but we may have to find all of its isomorphic mappings. How can we do that if we useigraphapproaches?

My code so far looks like below, but it is slow when we have larger n or p (and also I am not sure if some of matrices are missed during the enumeration).

f <- function(n, p) {

# helper function to check if requirement holds

checker <- function(M, p, i = nrow(M) - 1) {

rs <- rowSums(M)

if ((i == nrow(M) - 1)) {

return(all(rs == p))

} else {

return(all(rs[1:i] == p) && all(rs[-(1:i)] <= p))

}

}

# start from all-0's matrix

lst <- list(matrix(0, n, n))

for (i in 1:(n - 1)) {

js <- (i + 1):n

r <- list()

for (mat in lst) {

k <- p - sum(mat[i, ])

if (k == 0) {

if (checker(mat, p, i)) {

r <- c(r, list(mat))

}

}

if (k > 0 && length(js) >= k) {

idx <- combn(length(js), k, \(v) js[v], simplify = FALSE)

for (u in idx) {

mm <- mat

mm[i, u] <- 1

mm[u, i] <- 1

if (checker(mm, p, i)) {

r <- c(r, list(mm))

}

}

}

}

lst <- r

}

lst

}

Examples

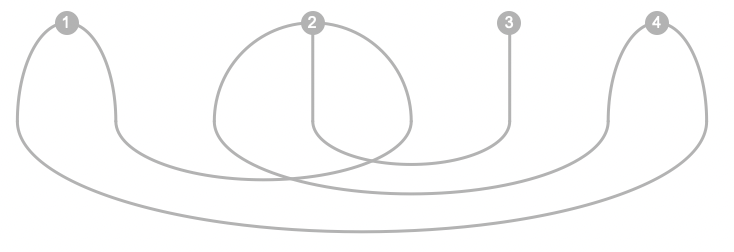

- For

n <- 4andp <- 2, we can find 3 matrices

[[1]]

[,1] [,2] [,3] [,4]

[1,] 0 1 1 0

[2,] 1 0 0 1

[3,] 1 0 0 1

[4,] 0 1 1 0

[[2]]

[,1] [,2] [,3] [,4]

[1,] 0 1 0 1

[2,] 1 0 1 0

[3,] 0 1 0 1

[4,] 1 0 1 0

[[3]]

[,1] [,2] [,3] [,4]

[1,] 0 0 1 1

[2,] 0 0 1 1

[3,] 1 1 0 0

[4,] 1 1 0 0

- For

n <- 3andp <- 2, we can find only 1 matrix

[[1]]

[,1] [,2] [,3]

[1,] 0 1 1

[2,] 1 0 1

[3,] 1 1 0

n!/(n/2)!such matrices for any non-trivialp. Any code generating them will be slow. – Dacostanmight not be that big like hundreds or thousands, but probably up to 10 or 20. I guess there might beigraphsolutions, but no idea how it can be implemented. Your help would be greatly appreciated! – Skimmiais_graphical). If not, cut the search, otherwise continue generating in a recursive manner. If the graph is directed, we split each vertex into an "in" and "out" instance and connect out to in only. – Embaymentn!/(n/2)!such kind of matrices since the row sum constraintpand the symmetry property are two picky filtering conditions. For example,n <- 5andp <- 3will give an empty list since no such matrix can be found. – Skimmiachoose(n-1, p)combinations. Furthermore, all rows would have the same combinations with a 0 inserted at different locations to account for the diag being zero. Last, we know that the upper.tri is equal ton * p / 2. I assume graph theory will get you there faster, but I feel like there might be ways to improve efficiency of brute force algorithm. – Clovah