I'll address the last question first. Microsoft's .NET implementation has release semantics on writes1. It's not C# per se, so the same program, no matter the language, in a different implementation can have weak non-volatile writes.

The visibility of side-effects is regarding multiple threads. Forget about CPUs, cores and caches. Imagine, instead, that each thread has a snapshot of what is on the heap that requires some sort of synchronization to communicate side-effects between threads.

So, what does C# say? The C# language specification (newer draft) says fundamentally the same as the Common Language Infrastructure standard (CLI; ECMA-335 and ISO/IEC 23271) with some differences. I'll talk about them later on.

So, what does the CLI say? That only volatile operations are visible side-effects.

Note that it also says that non-volatile operations on the heap are side-effects as well, but not guaranteed to be visible. Just as important2, it doesn't state they're guaranteed to not be visible either.

What exactly happens on volatile operations? A volatile read has acquire semantics, it precedes any following memory reference. A volatile write has release semantics, it follows any preceding memory reference.

Acquiring a lock performs a volatile read, and releasing a lock performs a volatile write.

Interlocked operations have acquire and release semantics.

There's another important term to learn, which is atomicity.

Reads and writes, volatile or not, are guaranteed to be atomic on primitive values up to 32 bits on 32-bit architectures and up to 64 bits on 64-bit architectures. They're also guaranteed to be atomic for references. For other types, such as long structs, the operations are not atomic, they may require multiple, independent memory accesses.

However, even with volatile semantics, read-modify-write operations, such as v += 1 or the equivalent ++v (or v++, in terms of side-effects) , are not atomic.

Interlocked operations guarantee atomicity for certain operations, typically addition, subtraction and compare-and-swap (CAS), i.e. write some value if and only if the current value is still some expected value. .NET also has an atomic Read(ref long) method for integers of 64 bits which works even in 32-bit architectures.

I'll keep referring to acquire semantics as volatile reads and release semantics as volatile writes, and either or both as volatile operations.

What does this all mean in terms of order?

That a volatile read is a point before which no memory references may cross, and a volatile write is a point after which no memory references may cross, both at the language level and at the machine level.

That non-volatile operations may cross to after following volatile reads if there are no volatile writes in between, and cross to before preceding volatile writes if there are no volatile reads in between.

That volatile operations within a thread are sequential and may not be reordered.

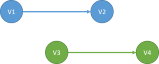

That volatile operations in a thread are made visible to all other threads in the same order. However, there is no total order of volatile operations from all threads, i.e. if one threads performs V1 and then V2, and another thread performs V3 and then V4, then any order that has V1 before V2 and V3 before V4 can be observed by any thread. In this case, it can be either of the following:

- V1 V2 V3 V4

![V1 V2 V3 V4]()

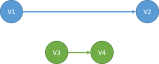

- V1 V3 V2 V4

![V1 V3 V2 V4]()

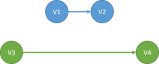

- V1 V3 V4 V2

![V1 V3 V4 V2]()

- V3 V1 V2 V4

![V3 V1 V2 V4]()

- V3 V1 V4 V2

![V3 V1 V4 V2]()

- V3 V4 V1 V2

![V3 V4 V1 V2]()

That is, any possible order of observed side-effects are valid for any thread for a single execution. There is no requirement on total ordering, such that all threads observe only one of the possible orders for a single execution.

How are things synchronized?

Essentially, it boils down to this: a synchronization point is where you have a volatile read that happens after a volatile write.

In practice, you must detect if a volatile read in one thread happened after a volatile write in another thread3. Here's a basic example:

public class InefficientEvent

{

private volatile bool signalled = false;

public Signal()

{

signalled = true;

}

public InefficientWait()

{

while (!signalled)

{

}

}

}

However generally inefficient, you can run two different threads, such that one calls InefficientWait() and another one calls Signal(), and the side-effects of the latter when it returns from Signal() become visible to the former when it returns from InefficientWait().

Volatile accesses are not as generally useful as interlocked accesses, which are not as generally useful as synchronization primitives. My advice is that you should develop code safely first, using synchronization primitives (locks, semaphores, mutexes, events, etc.) as needed, and if you find reasons to improve performance based on actual data (e.g. profiling), then and only then see if you can improve.

If you ever reach high contention for fast locks (used only for a few reads and writes without blocking), depending on the amount of contention, switching to interlocked operations may either improve or decrease performance. Especially so when you have to resort to compare-and-swap cycles, such as:

var currentValue = Volatile.Read(ref field);

var newValue = GetNewValue(currentValue);

var oldValue = currentValue;

var spinWait = new SpinWait();

while ((currentValue = Interlocked.CompareExchange(ref field, newValue, oldValue)) != oldValue)

{

spinWait.SpinOnce();

newValue = GetNewValue(currentValue);

oldValue = currentValue;

}

Meaning, you have to profile the solution as well and compare with the current state. And be aware of the A-B-A problem.

There's also SpinLock, which you must really profile against monitor-based locks, because although they may make the current thread yield, they don't put the current thread to sleep, akin to the shown usage of SpinWait.

Switching to volatile operations is like playing with fire. You must make sure through analytical proof that your code is correct, otherwise you may get burned when you least expect.

Usually, the best approach for optimization in the case of high contention is to avoid contention. For instance, to perform a transformation on a big list in parallel, it's often better to divide and delegate the problem to multiple work items that generate results which are merged in a final step, rather than having multiple threads locking the list for updates. This has a memory cost, so it depends on the length of the data set.

What are the differences between the C# specification and the CLI specification regarding volatile operations?

C# specifies side-effects, not mentioning their inter-thread visibility, as being a read or write of a volatile field, a write to a non-volatile variable, a write to an external resource, and the throwing of an exception.

C# specifies critical execution points at which these side-effects are preserved between threads: references to volatile fields, lock statements, and thread creation and termination.

If we take critical execution points as points where side-effects become visible, it adds to the CLI specification that thread creation and termination are visible side-effects, i.e. new Thread(...).Start() has release semantics on the current thread and acquire semantics at the start of the new thread, and exiting a thread has release semantics on the current thread and thread.Join() has acquire semantics on the waiting thread.

C# doesn't mention volatile operations in general, such as performed by classes in System.Threading instead of only through using fields declared as volatile and using the lock statement. I believe this is not intentional.

C# states that captured variables can be simultaneously exposed to multiple threads. The CIL doesn't mention it, because closures are a language construct.

1.

There are a few places where Microsoft (ex-)employees and MVPs state that writes have release semantics:

In my code, I ignore this implementation detail. I assume non-volatile writes are not guaranteed to become visible.

2.

There is a common misconception that you're allowed to introduce reads in C# and/or the CLI.

However, that is true only for local arguments and variables.

For static and instance fields, or arrays, or anything on the heap, you cannot sanely introduce reads, as such introduction may break the order of execution as seen from the current thread of execution, either from legitimate changes in other threads, or from changes through reflection.

That is, you can't turn this:

object local = field;

if (local != null)

{

// code that reads local

}

into this:

if (field != null)

{

// code that replaces reads on local with reads on field

}

if you can ever tell the difference. Specifically, a NullReferenceException being thrown by accessing local's members.

In the case of C#'s captured variables, they're equivalent to instance fields.

It's important to note that the CLI standard:

says that non-volatile accesses are not guaranteed to be visible

doesn't say that non-volatile accesses are guaranteed to not be visible

says that volatile accesses affect the visibility of non-volatile accesses

But you can turn this:

object local2 = local1;

if (local2 != null)

{

// code that reads local2 on the assumption it's not null

}

into this:

if (local1 != null)

{

// code that replaces reads on local2 with reads on local1,

// as long as local1 and local2 have the same value

}

You can turn this:

var local = field;

local?.Method()

into this:

var local = field;

var _temp = local;

(_temp != null) ? _temp.Method() : null

or this:

var local = field;

(local != null) ? local.Method() : null

because you can't ever tell the difference. But again, you cannot turn it into this:

(field != null) ? field.Method() : null

I believe it was prudent in both specifications stating that an optimizing compiler may reorder reads and writes as long as a single thread of execution observes them as written, instead of generally introducing and eliminating them altogether.

Note that read elimination may be performed by either the C# compiler or the JIT compiler, i.e. multiple reads on the same non-volatile field, separated by instructions that don't write to that field and that don't perform volatile operations or equivalent, may be collapsed to a single read. It's as if a thread never synchronizes with other threads, so it keeps observing the same value:

public class Worker

{

private bool working = false;

private bool stop = false;

public void Start()

{

if (!working)

{

new Thread(Work).Start();

working = true;

}

}

public void Work()

{

while (!stop)

{

// TODO: actual work without volatile operations

}

}

public void Stop()

{

stop = true;

}

}

There's no guarantee that Stop() will stop the worker. Microsoft's .NET implementation guarantees that stop = true; is a visible side-effect, but it doesn't guarantee that the read on stop inside Work() is not elided to this:

public void Work()

{

bool localStop = stop;

while (!localStop)

{

// TODO: actual work without volatile operations

}

}

That comment says quite a lot. To perform this optimization, the compiler must prove that there are no volatile operations whatsoever, either directly in the block, or indirectly in the whole methods and properties call tree.

For this specific case, one correct implementation is to declare stop as volatile. But there are more options, such as using the equivalent Volatile.Read and Volatile.Write, using Interlocked.CompareExchange, using a lock statement around accesses to stop, using something equivalent to a lock, such as a Mutex, or Semaphore and SemaphoreSlim if you don't want the lock to have thread-affinity, i.e. you can release it on a different thread than the one that acquired it, or using a ManualResetEvent or ManualResetEventSlim instead of stop in which case you can make Work() sleep with a timeout while waiting for a stop signal before the next iteration, etc.

3.

One significant difference of .NET's volatile synchronization compared to Java's volatile synchronization is that Java requires you to use the same volatile location, whereas .NET only requires that an acquire (volatile read) happens after a release (volatile write). So, in principle you can synchronize in .NET with the following code, but you can't synchronize with the equivalent code in Java:

using System;

using System.Threading;

public class SurrealVolatileSynchronizer

{

public volatile bool v1 = false;

public volatile bool v2 = false;

public int state = 0;

public void DoWork1(object b)

{

var barrier = (Barrier)b;

barrier.SignalAndWait();

Thread.Sleep(100);

state = 1;

v1 = true;

}

public void DoWork2(object b)

{

var barrier = (Barrier)b;

barrier.SignalAndWait();

Thread.Sleep(200);

bool currentV2 = v2;

Console.WriteLine("{0}", state);

}

public static void Main(string[] args)

{

var synchronizer = new SurrealVolatileSynchronizer();

var thread1 = new Thread(synchronizer.DoWork1);

var thread2 = new Thread(synchronizer.DoWork2);

var barrier = new Barrier(3);

thread1.Start(barrier);

thread2.Start(barrier);

barrier.SignalAndWait();

thread1.Join();

thread2.Join();

}

}

This surreal example expects threads and Thread.Sleep(int) to take an exact amount of time. If this is so, it synchronizes correctly, because DoWork2 performs a volatile read (acquire) after DoWork1 performs a volatile write (release).

In Java, even with such surreal expectations fulfilled, this would not guarantee synchronization. In DoWork2, you'd have to read from the same volatile field you wrote to in DoWork1.

volatile. – Foveolaguardis volatile anddataisn't. Thread A writes todataand then toguard. One second later, thread B readsguardand thendata. Acquire–release semantics only guarantee that thread B cannot observe the new value ofguardand the old value ofdata. Rather, it may observe new–new, old–old, or old–new. – Kalongdataat all (irrespective of which value ofguardis observed), given that the half-fence generated by the volatile read ofguardoccurs chronologically after the half-fence generated by the volatile write ofguard? – Kalong