I have 3 questions:

1)

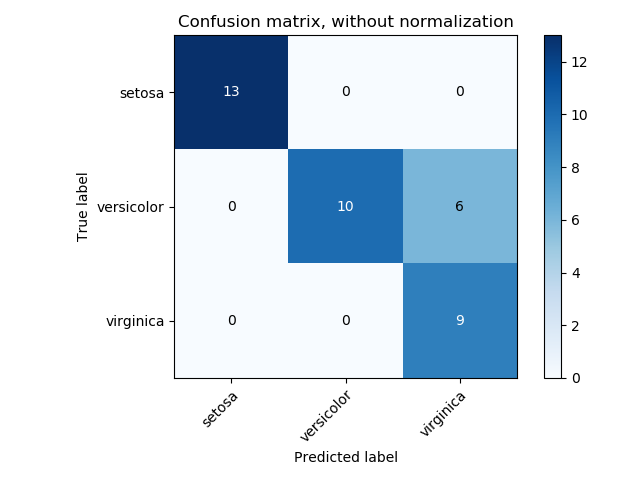

The confusion matrix for sklearn is as follows:

TN | FP

FN | TP

While when I'm looking at online resources, I find it like this:

TP | FP

FN | TN

Which one should I consider?

2)

Since the above confusion matrix for scikit learn is different than the one I find in other rescources, in a multiclass confusion matrix, what's the structure will be? I'm looking at this post here: Scikit-learn: How to obtain True Positive, True Negative, False Positive and False Negative In that post, @lucidv01d had posted a graph to understand the categories for multiclass. is that category the same in scikit learn?

3)

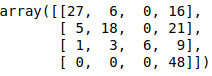

How do you calculate the accuracy of a multiclass? for example, I have this confusion matrix:

[[27 6 0 16]

[ 5 18 0 21]

[ 1 3 6 9]

[ 0 0 0 48]]

In that same post I referred to in question 2, he has written this equation:

Overall accuracy

ACC = (TP+TN)/(TP+FP+FN+TN)

but isn't that just for binary? I mean, for what class do I replace TP with?