My problem:

I have a dataset which is a large JSON file. I read it and store it in the trainList variable.

Next, I pre-process it - in order to be able to work with it.

Once I have done that I start the classification:

- I use the

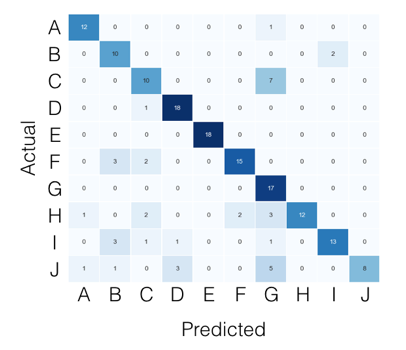

kfoldcross validation method in order to obtain the mean accuracy and train a classifier. - I make the predictions and obtain the accuracy & confusion matrix of that fold.

- After this, I would like to obtain the

True Positive(TP),True Negative(TN),False Positive(FP)andFalse Negative(FN)values. I'll use these parameters to obtain the Sensitivity and Specificity.

Finally, I would use this to put in HTML in order to show a chart with the TPs of each label.

Code:

The variables I have for the moment:

trainList #It is a list with all the data of my dataset in JSON form

labelList #It is a list with all the labels of my data

Most part of the method:

#I transform the data from JSON form to a numerical one

X=vec.fit_transform(trainList)

#I scale the matrix (don't know why but without it, it makes an error)

X=preprocessing.scale(X.toarray())

#I generate a KFold in order to make cross validation

kf = KFold(len(X), n_folds=10, indices=True, shuffle=True, random_state=1)

#I start the cross validation

for train_indices, test_indices in kf:

X_train=[X[ii] for ii in train_indices]

X_test=[X[ii] for ii in test_indices]

y_train=[listaLabels[ii] for ii in train_indices]

y_test=[listaLabels[ii] for ii in test_indices]

#I train the classifier

trained=qda.fit(X_train,y_train)

#I make the predictions

predicted=qda.predict(X_test)

#I obtain the accuracy of this fold

ac=accuracy_score(predicted,y_test)

#I obtain the confusion matrix

cm=confusion_matrix(y_test, predicted)

#I should calculate the TP,TN, FP and FN

#I don't know how to continue