NOTE: I have just read your comment, and as I can understand you don't have access to command line. Please check Solution Two, this will definitely work.

The only solution that will work for you (which work for me at 12GB database) is directly from the command line:

Solution One

mysql -u root -p

set global net_buffer_length=1000000; --Set network buffer length to a large byte number

set global max_allowed_packet=1000000000; --Set maximum allowed packet size to a large byte number

SET foreign_key_checks = 0; --Disable foreign key checking to avoid delays, errors and unwanted behavior

source file.sql --Import your sql dump file

SET foreign_key_checks = 1; --Remember to enable foreign key checks when the procedure is complete!

If you have root access you can create bash script:

#!/bin/sh

# store start date to a variable

imeron=`date`

echo "Import started: OK"

dumpfile="/home/bob/bobiras.sql"

ddl="set names utf8; "

ddl="$ddl set global net_buffer_length=1000000;"

ddl="$ddl set global max_allowed_packet=1000000000; "

ddl="$ddl SET foreign_key_checks = 0; "

ddl="$ddl SET UNIQUE_CHECKS = 0; "

ddl="$ddl SET AUTOCOMMIT = 0; "

# if your dump file does not create a database, select one

ddl="$ddl USE jetdb; "

ddl="$ddl source $dumpfile; "

ddl="$ddl SET foreign_key_checks = 1; "

ddl="$ddl SET UNIQUE_CHECKS = 1; "

ddl="$ddl SET AUTOCOMMIT = 1; "

ddl="$ddl COMMIT ; "

echo "Import started: OK"

time mysql -h 127.0.0.1 -u root -proot -e "$ddl"

# store end date to a variable

imeron2=`date`

echo "Start import:$imeron"

echo "End import:$imeron2"

Source

Solution Two

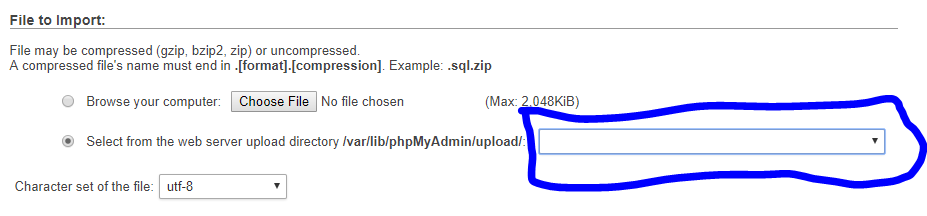

Also, there is another option which is very good for those who are on shared hosting and don't have command line access. This solution worked for me on 4-5GB files:

- MySQL Dumper: Download (You will able to backup/restore SQL file directly from "MySQL Dumper" you don't need phpmyadmin anymore).

- Big Dump: Download (Just restore from Compress file and SQL file, need BIGDUMP PHP file editing for big import

$linespersession = 3000; Change to $linespersession = 30000;)

Solution Three:

This solution definitely works, it is slow but works.

Download Trial version of (32 or 64 bit): Navicat MySQL Version 12

Install -> and RUN as Trial.

After that Add your Computer IP (Internet IP, not local IP), to the MySQL Remote in cPanel (new database/hosting). You can use wildcard IP in cPanel to access MySQL from any IP.

Goto Navicat MySQL: click on Connection put a connection name.

In next "Hostname/IP" add your "Hosting IP Address" (don't use localhost).

Leave port as it is (if your hosting defined a different port put that one here).

add your Database Username and Password

Click Test Connection, If it's successful, click on "OK"

Now on the Main Screen you will see all the database connected with the username on the left side column.

Double click on your database where you want to import SQL file:

Icon color of the database will change and you will see "Tables/views/function etc..".

Now right click on database and select "Execute SQL file" (http://prntscr.com/gs6ef1).

choose the file, choose "continue on error" if you want to and finally run it. Its take some time depending on your network connection speed and computer performance.

SHOW VARIABLES LIKE "%timeout%";Which of those approximates the time before you timed out? – Gyral