Is there a hint I can put in my code indicating that a line should be removed from cache? As opposed to a prefetch hint, which would indicate I will soon need a line. In my case, I know when I won't need a line for a while, so I want to be able to get rid of it to free up space for lines I do need.

clflush, clflushopt

Invalidates from every level of the cache hierarchy in the cache coherence domain the cache line that contains the linear address specified with the memory operand. If that cache line contains modified data at any level of the cache hierarchy, that data is written back to memory.

They are not available on every CPU (in particular, clflushopt is only available on the 6th generation and later). To be certain, you should use CPUID to verify their availability:

The availability of

CLFLUSHis indicated by the presence of the CPUID feature flagCLFSH(CPUID.01H:EDX[bit 19]).The availability of

CLFLUSHOPTis indicated by the presence of theCPUIDfeature flagCLFLUSHOPT(CPUID.(EAX=7,ECX=0):EBX[bit 23]).

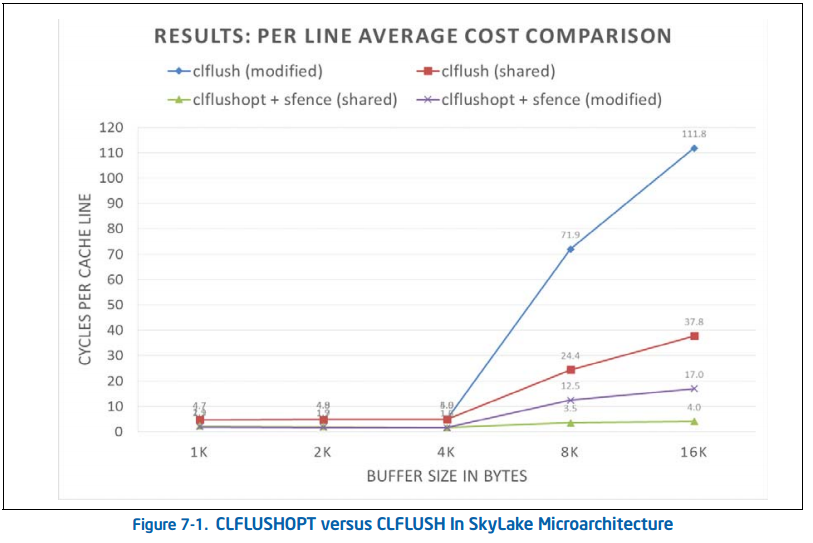

If available, you should use clflushopt. It outperforms clflush when flushing buffers larger than 4KiB (64 lines).

This is the benchmark from Intel's Optimization Manual:

For informational purpose (assuming you are running in a privileged context), you can also use invd (as a nuke-from-orbit option). This:

Invalidates (flushes) the processor’s internal caches and issues a special-function bus cycle that directs external caches to also flush themselves. Data held in internal caches is not written back to main memory.

or wbinvd, which:

Writes back all modified cache lines in the processor’s internal cache to main memory and invalidates (flushes) the internal caches. The instruction then issues a special-function bus cycle that directs external caches to also write back modified data and another bus cycle to indicate that the external caches should be invalidated.

A future instruction that could make it into the ISA is club. Although this won't fit your need (because it doesn't necessarily invalidate the line), it's worth mentioning for completeness. This would:

Writes back to memory the cache line (if dirty) that contains the linear address specified with the memory operand from any level of the cache hierarchy in the cache coherence domain. The line may be retained in the cache hierarchy in non-modified state. Retaining the line in the cache hierarchy is a performance optimization (treated as a hint by hardware) to reduce the possibility of cache miss on a subsequent access. Hardware may choose to retain the line at any of the levels in the cache hierarchy, and in some cases, may invalidate the line from the cache hierarchy.

cldemote instruction but it has not yet made it to the mainstream ISA. It's in the set extensions reference manual. clwb writes back a modified line but may keep it in the original cache or in a more distant cache. That's unreliable for your purpose. What are the use cases for demoting a cache line? Lines are automatically evicted, so it's for saving bandwidth I guess (i.e. writing a modified line now rather than when making room for a new line). –

Uncircumcised © 2022 - 2024 — McMap. All rights reserved.

prefetchntabefore reading the memory. But unfortunately SSE4.1 streaming loads (movntdqa) don't seem to have any effect on write-back memory on CPUs like Intel Skylake desktop; the non-temporal hint seems to be ignored, instead of doing anything cool like inserting newly-allocated cache lines into the LRU position (next to be evicted). – Supertanker