I have a TensorFlow model that I built (a 1D CNN) that I would now like to implement into .NET.

In order to do so I need to know the Input and Output nodes.

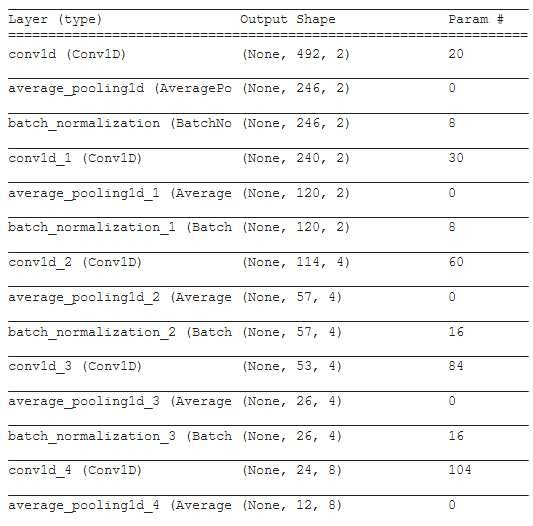

When I uploaded the model on Netron I get a different graph depending on my save method and the only one that looks correct comes from an h5 upload. Here is the model.summary():

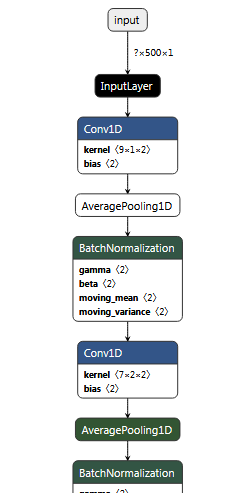

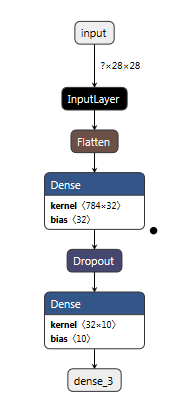

If I save the model as an h5 model.save("Mn_pb_model.h5") and load that into the Netron to graph it, everything looks correct:

However, ML.NET will not accept h5 format so it needs to be saved as a pb.

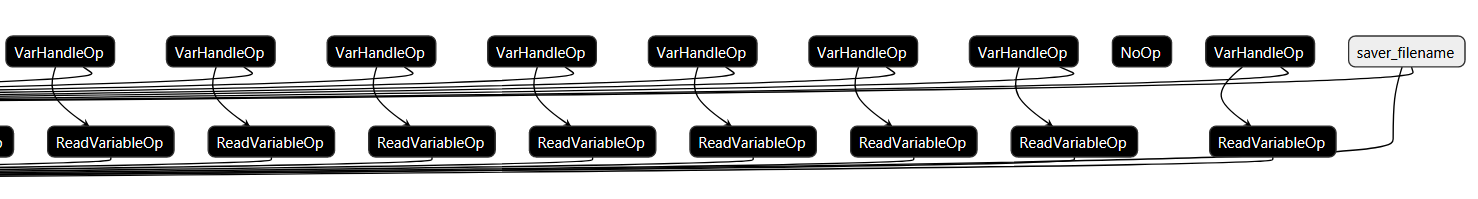

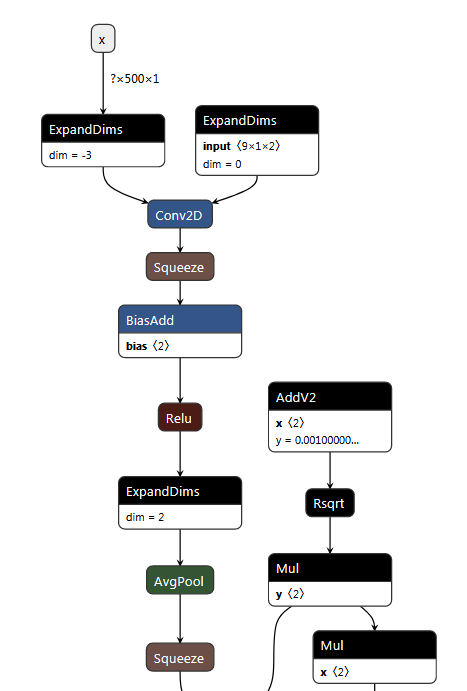

In looking through samples of adopting TensorFlow in ML.NET, this sample shows a TensorFlow model that is saved in a similar format to the SavedModel format - recommended by TensorFlow (and also recommended by ML.NET here "Download an unfrozen [SavedModel format] ..."). However when saving and loading the pb file into Netron I get this:

And zoomed in a little further (on the far right side),

As you can see, it looks nothing like it should.

Additionally the input nodes and output nodes are not correct so it will not work for ML.NET (and I think something is wrong).

I am using the recommended way from TensorFlow to determine the Input / Output nodes:

When I try to obtain a frozen graph and load it into Netron, at first it looks correct, but I don't think that it is:

There are four reasons I do not think this is correct.

- it is very different from the graph when it was uploaded as an h5 (which looks correct to me).

- as you can see from earlier, I am using 1D convolutions throughout and this is showing that it goes to 2D (and remains that way).

- this file size is 128MB whereas the one in the TensorFlow to ML.NET example is only 252KB. Even the Inception model is only 56MB.

- if I load the Inception model in TensorFlow and save it as an h5, it looks the same as from the ML.NET resource, yet when I save it as a frozen graph it looks different. If I take the same model and save it in the recommended

SavedModelformat, it shows up all messed up in Netron. Take any model you want and save it in the recommendedSavedModelformat and you will see for yourself (I've tried it on a lot of different models).

Additionally in looking at the model.summary() of Inception with it's graph, it is similar to its graph in the same way my model.summary() is to the h5 graph.

It seems like there should be an easier way (and a correct way) to save a TensorFlow model so it can be used in ML.NET.

Please show that your suggested solution works: In the answer that you provide, please check that it works (load the pb model [this should also have a Variables folder in order to work for ML.NET] into Netron and show that it is the same as the h5 model, e.g., screenshot it). So that we are all trying the same thing, here is a link to a MNIST ML crash course example. It takes less than 30s to run the program and produces a model called my_model. From here you can save it according to your method and upload it to see the graph on Netron. Here is the h5 model upload:

SavedModelformat to load into ML.NET, yet when it is in this format you can't read it into ML.NET because the nodes are messed up. Also, this ML.NET TensorFlow example is in pb format and it's Netron graph looks the same as it's h5 counterpart (hinting at the fact that they should be the same in order to be used in ML.NET). – Shool