I was experimenting with AVX -AVX2 instruction sets to see the performance of streaming on consecutive arrays. So I have below example, where I do basic memory read and store.

#include <iostream>

#include <string.h>

#include <immintrin.h>

#include <chrono>

const uint64_t BENCHMARK_SIZE = 5000;

typedef struct alignas(32) data_t {

double a[BENCHMARK_SIZE];

double c[BENCHMARK_SIZE];

alignas(32) double b[BENCHMARK_SIZE];

}

data;

int main() {

data myData;

memset(&myData, 0, sizeof(data_t));

auto start = std::chrono::high_resolution_clock::now();

for (auto i = 0; i < std::micro::den; i++) {

for (uint64_t i = 0; i < BENCHMARK_SIZE; i += 1) {

myData.b[i] = myData.a[i] + 1;

}

}

auto end = std::chrono::high_resolution_clock::now();

std::cout << (end - start).count() / std::micro::den << " " << myData.b[1]

<< std::endl;

}

And after compiling with g++-4.9 -ggdb -march=core-avx2 -std=c++11 struct_of_arrays.cpp -O3 -o struct_of_arrays

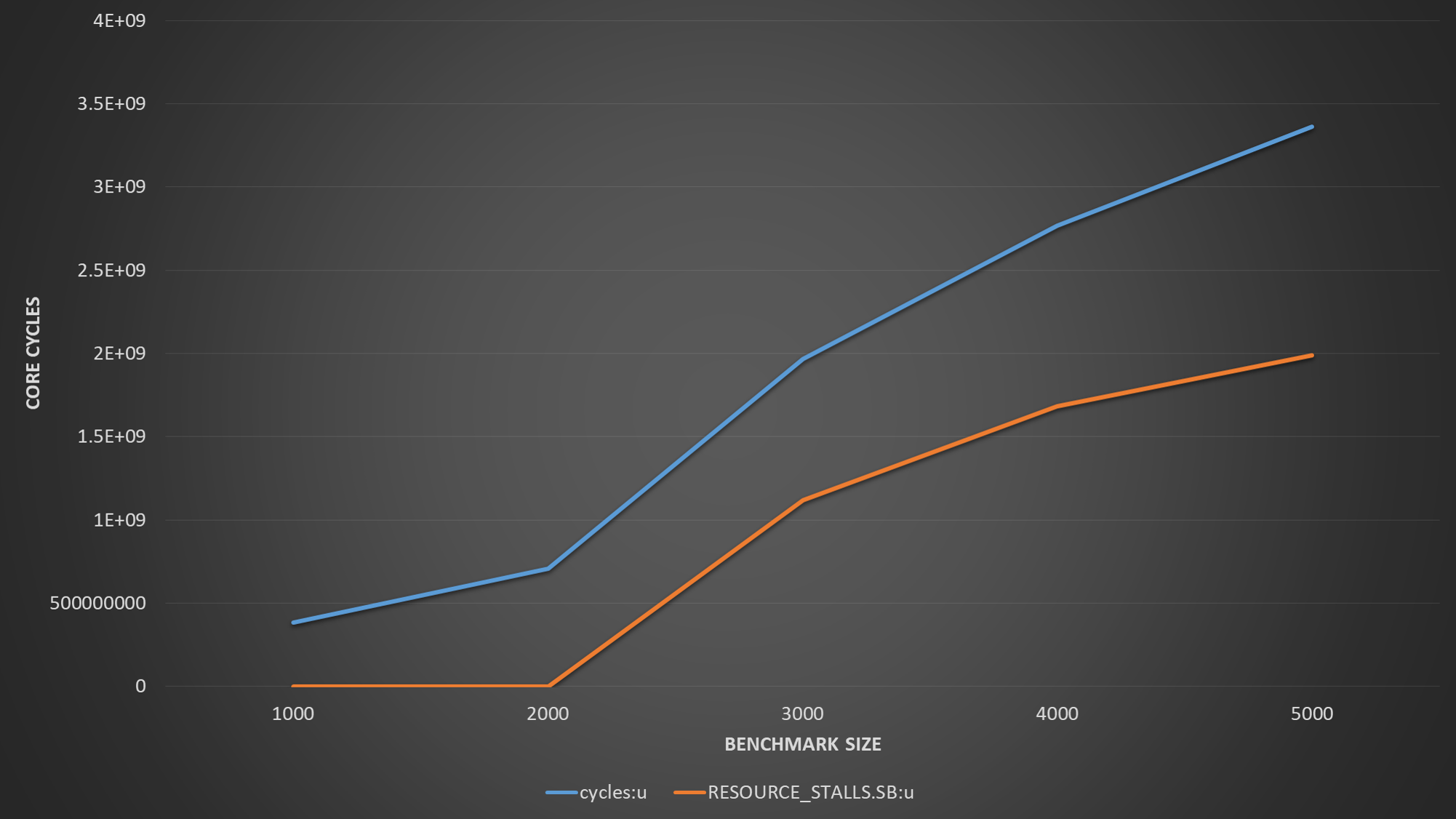

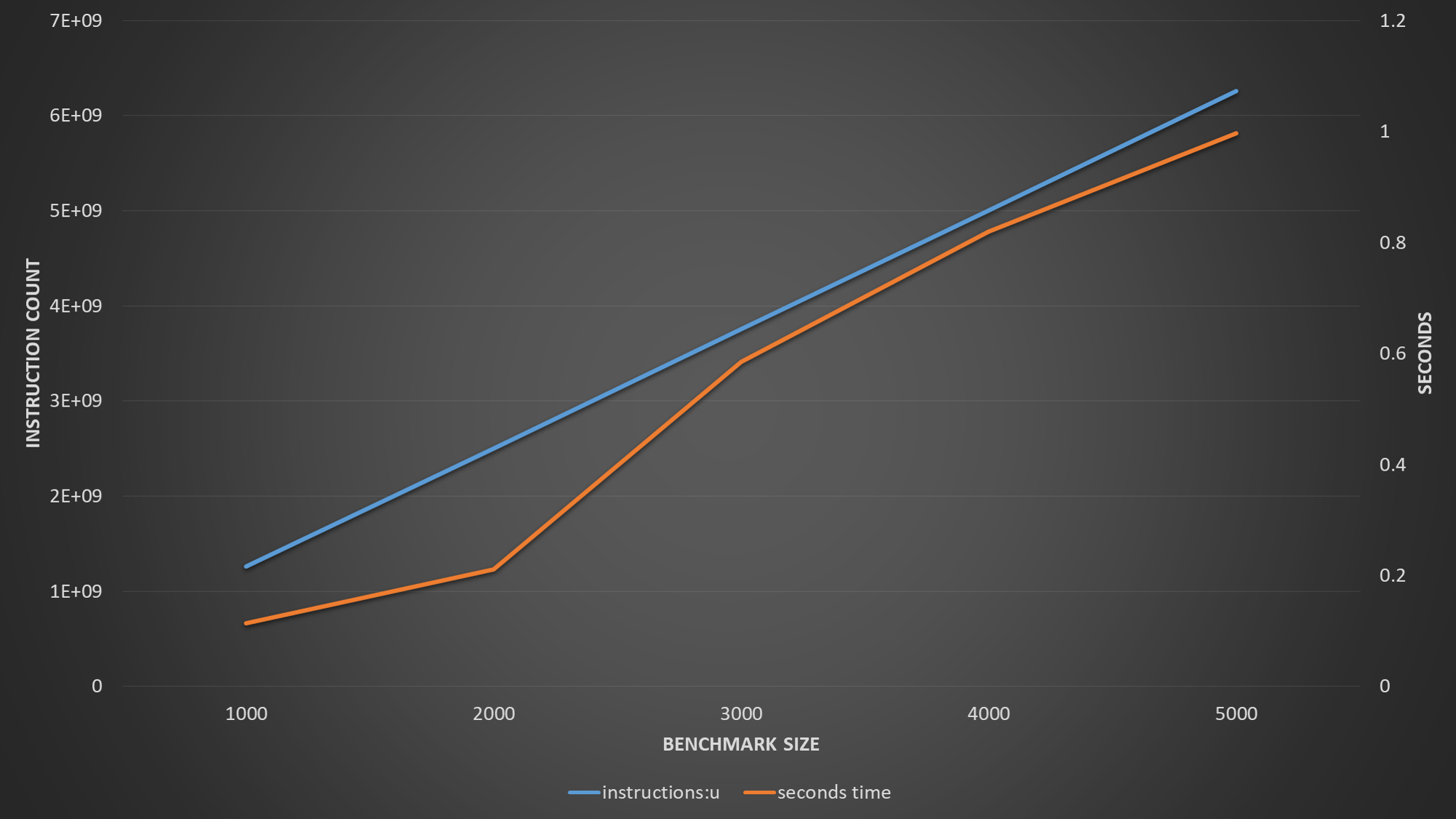

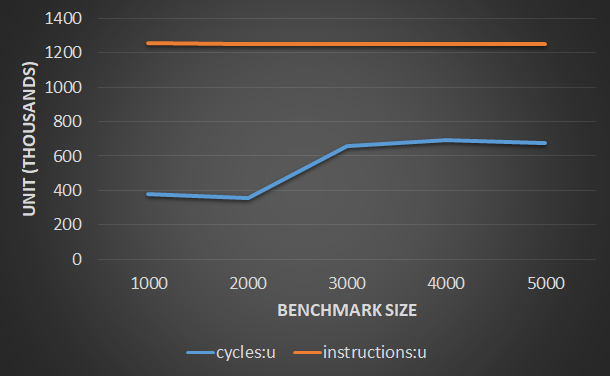

I see quite good instruction per cycle performance and timings, for benchmark size 4000. However once I increase the benchmark size to 5000, I see instruction per cycle drops significantly and also latency jumps. Now my question is, although I can see that performance degradation seems to be related to L1 cache, I can not explain why this happens so suddenly.

To give more insight, if I run perf with Benchmark size 4000, and 5000

| Event | Size=4000 | Size=5000 |

|-------------------------------------+-----------+-----------|

| Time | 245 ns | 950 ns |

| L1 load hit | 525881 | 527210 |

| L1 Load miss | 16689 | 21331 |

| L1D writebacks that access L2 cache | 1172328 | 623710387 |

| L1D Data line replacements | 1423213 | 624753092 |

So my question is, why this impact is happening, considering haswell should be capable of delivering 2* 32 bytes to read, and 32 bytes store each cycle?

EDIT 1

I realized with this code gcc smartly eliminates accesses to the myData.a since it is set to 0. To avoid this I did another benchmark which is slightly different, where a is explicitly set.

#include <iostream>

#include <string.h>

#include <immintrin.h>

#include <chrono>

const uint64_t BENCHMARK_SIZE = 4000;

typedef struct alignas(64) data_t {

double a[BENCHMARK_SIZE];

alignas(32) double c[BENCHMARK_SIZE];

alignas(32) double b[BENCHMARK_SIZE];

}

data;

int main() {

data myData;

memset(&myData, 0, sizeof(data_t));

std::cout << sizeof(data) << std::endl;

std::cout << sizeof(myData.a) << " cache lines " << sizeof(myData.a) / 64

<< std::endl;

for (uint64_t i = 0; i < BENCHMARK_SIZE; i += 1) {

myData.b[i] = 0;

myData.a[i] = 1;

myData.c[i] = 2;

}

auto start = std::chrono::high_resolution_clock::now();

for (auto i = 0; i < std::micro::den; i++) {

for (uint64_t i = 0; i < BENCHMARK_SIZE; i += 1) {

myData.b[i] = myData.a[i] + 1;

}

}

auto end = std::chrono::high_resolution_clock::now();

std::cout << (end - start).count() / std::micro::den << " " << myData.b[1]

<< std::endl;

}

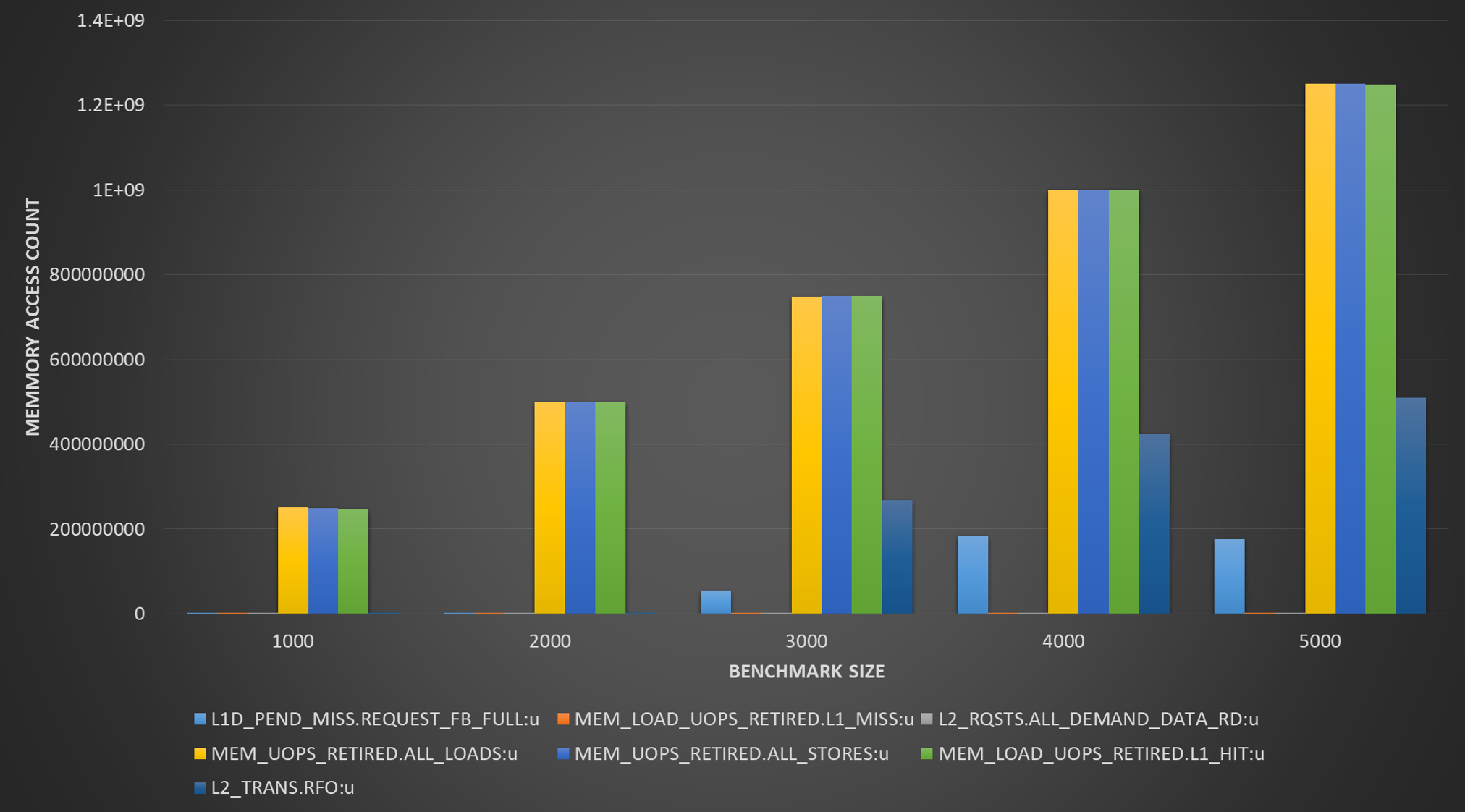

Second example will have one array being read and other array being written. And this one produces following perf output for different sizes:

| Event | Size=1000 | Size=2000 | Size=3000 | Size=4000 |

|----------------+-------------+-------------+-------------+---------------|

| Time | 86 ns | 166 ns | 734 ns | 931 ns |

| L1 load hit | 252,807,410 | 494,765,803 | 9,335,692 | 9,878,121 |

| L1 load miss | 24,931 | 585,891 | 370,834,983 | 495,678,895 |

| L2 load hit | 16,274 | 361,196 | 371,128,643 | 495,554,002 |

| L2 load miss | 9,589 | 11,586 | 18,240 | 40,147 |

| L1D wb acc. L2 | 9,121 | 771,073 | 374,957,848 | 500,066,160 |

| L1D repl. | 19,335 | 1,834,100 | 751,189,826 | 1,000,053,544 |

Again same pattern is seen as pointed out in the answer, with increasing data set size data does not fit in L1 anymore and L2 becomes bottleneck. What is also interesting is that prefetching does not seem to be helping and L1 misses increases considerably. Although, I would expect to see at least 50 percent hit rate considering each cache line brought into L1 for read will be a hit for the second access (64 byte cache line 32 byte is read with each iteration). However, once dataset is spilled over to L2 it seems L1 hit rate drops to 2%. Considering arrays are not really overlapping with L1 cache size this should not be because of cache conflicts. So this part still does not make sense to me.