I am working on a project which is image searching engine. The logic behind is that there will be some images stored in a database and the user will input a new image and it will be matched with the ones stored in the database. The result will be a list of closest match of the query image with stored images in the database.

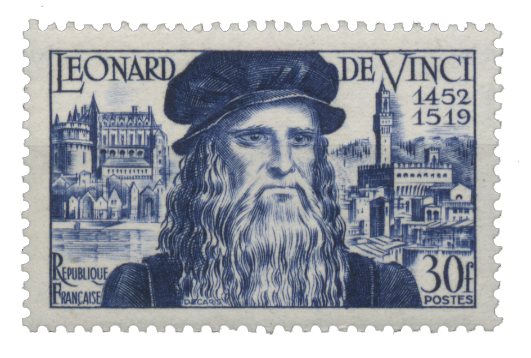

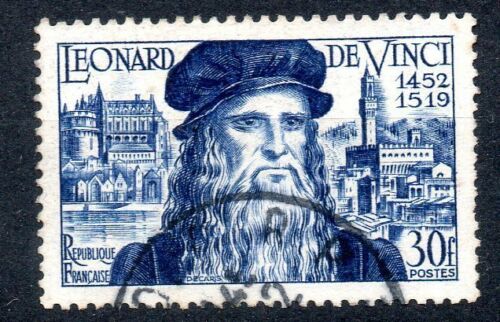

The images are of stamps. Now the problem is that there are New and Used stamps. New is just a stamp image and Used is with a some part of it obscured by a black cancellation mark so it cannot be a perfect match.

Here are few sample of both (New and Used):

I have used various measures, such as compare_mse, compare_ssim and compare_nrmse. But they all tilt towards dissimilarity. I have also used the https://github.com/EdjoLabs/image-match algorithm but it also is same giving low similarity score.

Do you guys think I neeed to use some preprocessing or something? I have also removed black borders from the image but the result is somewhat better not satisfactory though. I have converted them into gray-scale and matched, still no satisfactory results. Any recommendations and suggestion on how to get high similarity scores would be greatly appreciated! Here is my code:

img1 = cv2.imread('C:\\Users\\Quin\\Desktop\\1frclean2.jpg', cv2.IMREAD_GRAYSCALE)

img2 = cv2.imread('C:\\Users\\Quin\\Desktop\\1fr.jpg', cv2.IMREAD_GRAYSCALE)

compare_mse(cv2.resize(img1, (355, 500)), cv2.resize(img2, (355, 500)))

compare_ssim(cv2.resize(img1, (355, 500)), cv2.resize(img2, (355, 500)))

MSE returned 4797.232123943662 and SSIM returned 0.2321816144043102.