This answer is for someone with large blob storage with millions of blobs.

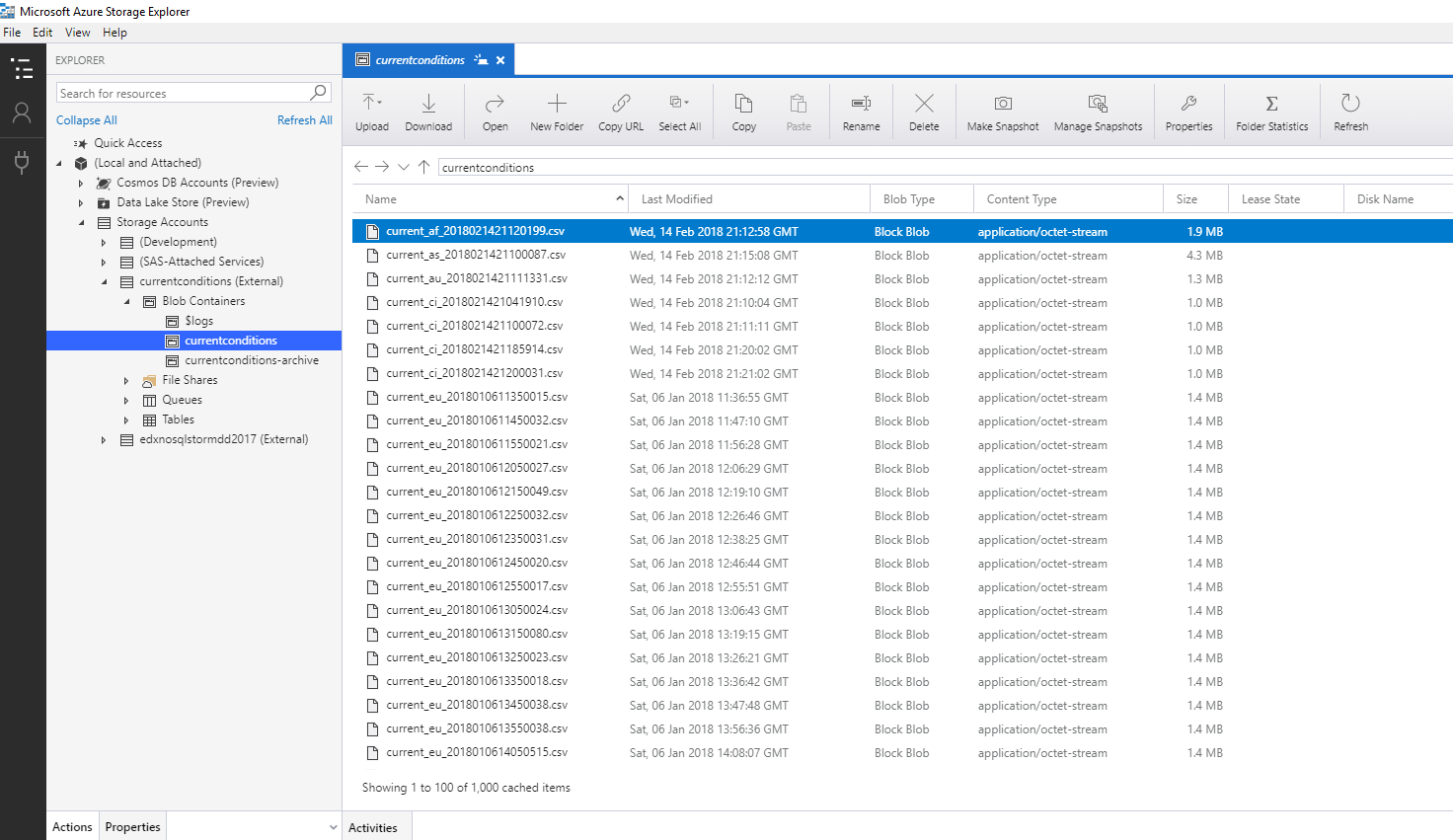

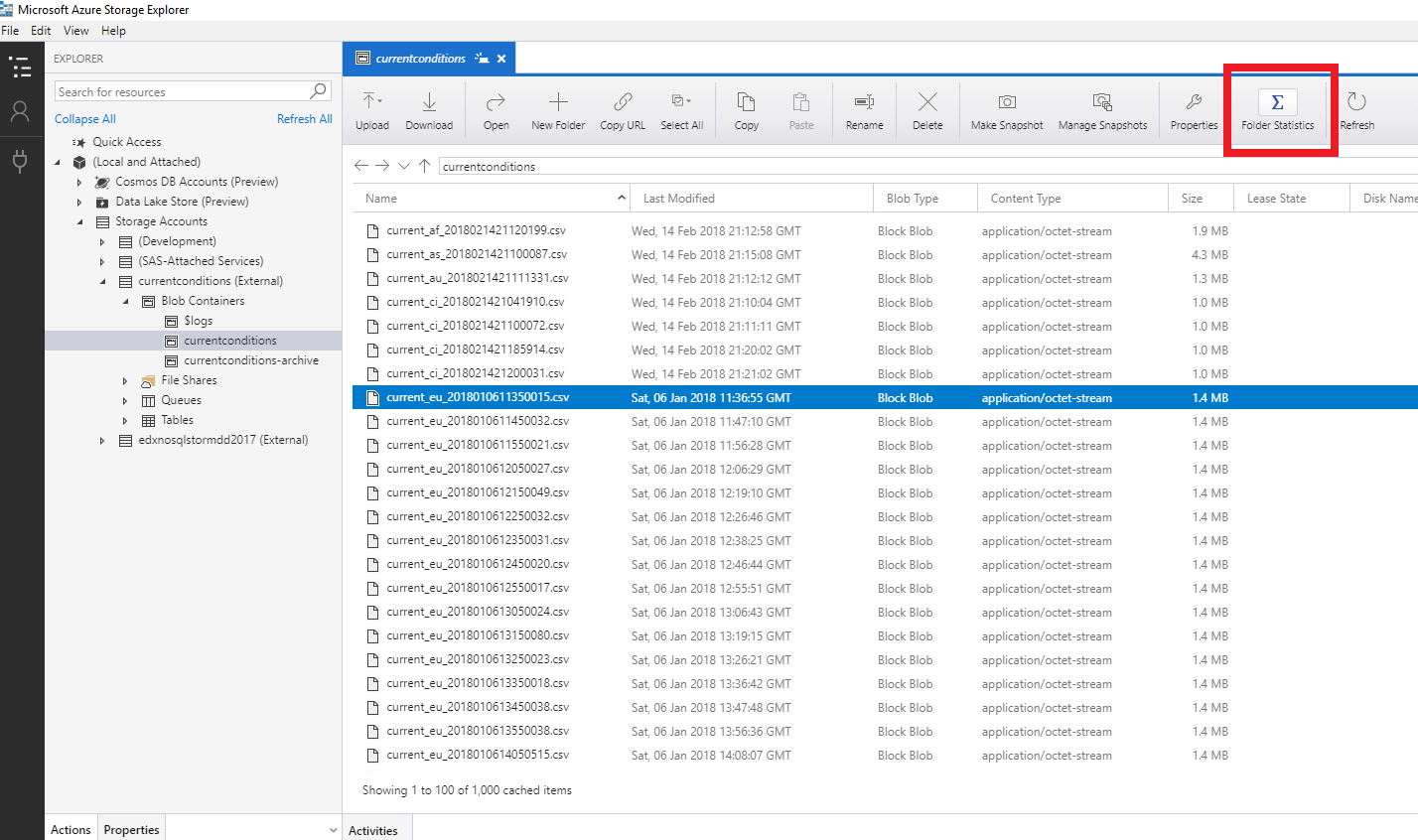

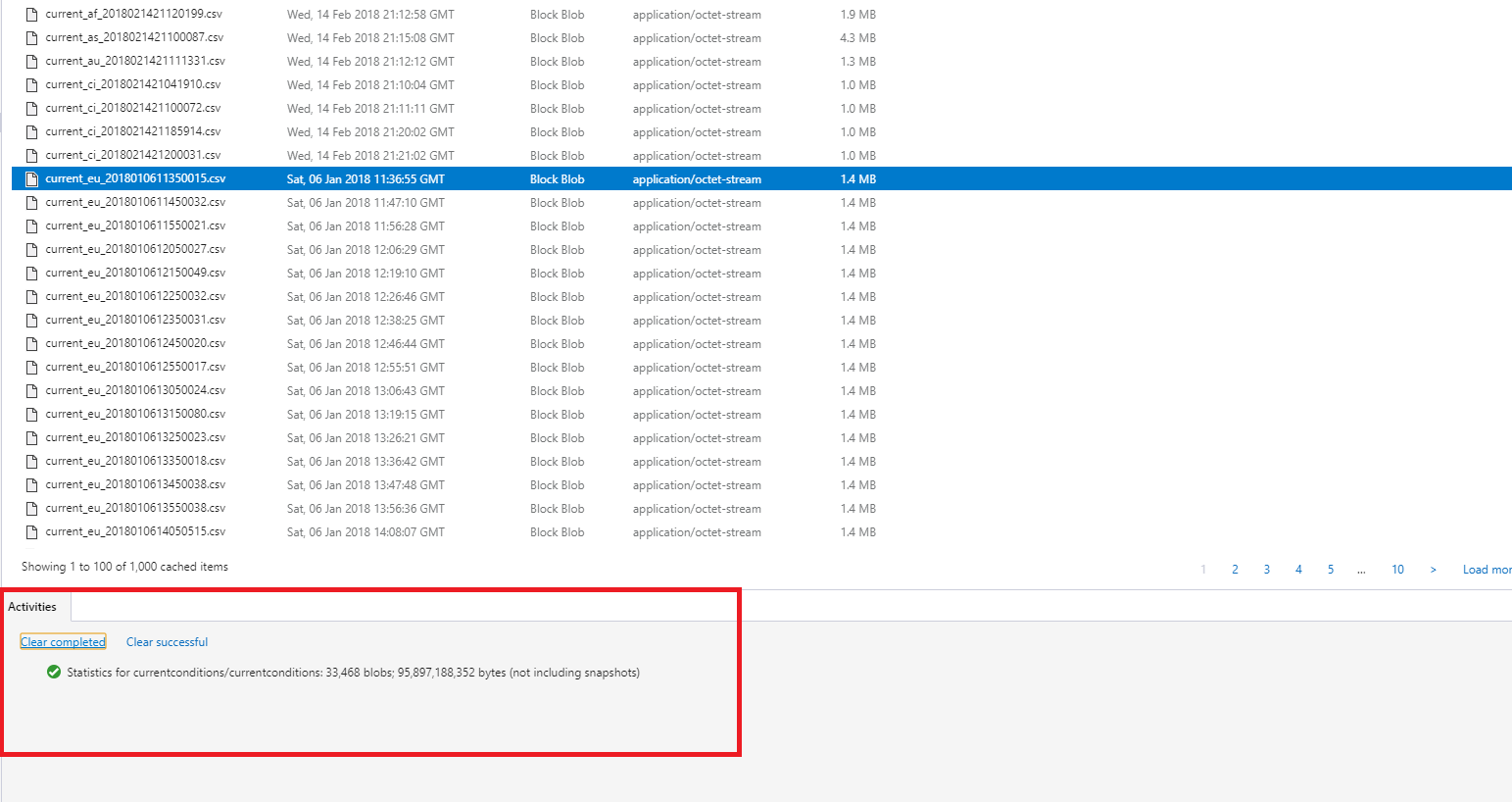

The top-rated answer on this thread is pretty much unusable with large blob storages. The azure storage explorer application simply calls list blobs API under the hood which is paginated and allows 5000 records at a time. In case you have millions of blobs, this will take forever to return the blob count.

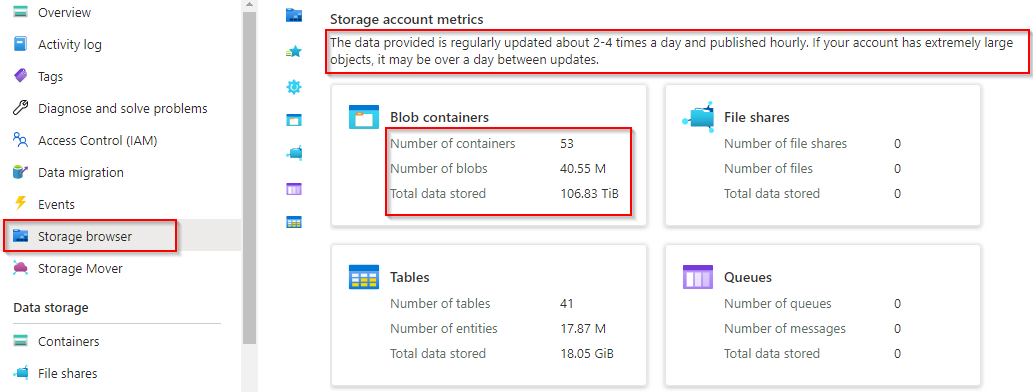

If you are ok with approximate value, then the storage browser option in azure portal is extremely useful. However, note that this value is not very accurate on blob storages that have high write/delete operations.

![enter image description here]() Also, this data should be visible by default. If not, enable the diagnostics metrics. Monitoring -> Diagnostic Settings(classic). (Turn the status on and enable the hour metrics)

Also, this data should be visible by default. If not, enable the diagnostics metrics. Monitoring -> Diagnostic Settings(classic). (Turn the status on and enable the hour metrics)

If you want more accurate results, then the only option is to enable blob storage inventory report. The downside is that this is a background job, and the report can be generated only once per day.

Here is the document on the same. For large blob storage's, my suggestion is to generate a parquet report every day and when you need to inspect/read the report, either use Dbeaver(along with DuckDB) or Databricks or Synapse. Below listed few resources on how this can be achieved.

If you do not wish to use inventory report, here is a PowerShell script to achieve something similar. However, this can take many hours to return blob count on large blob storages.