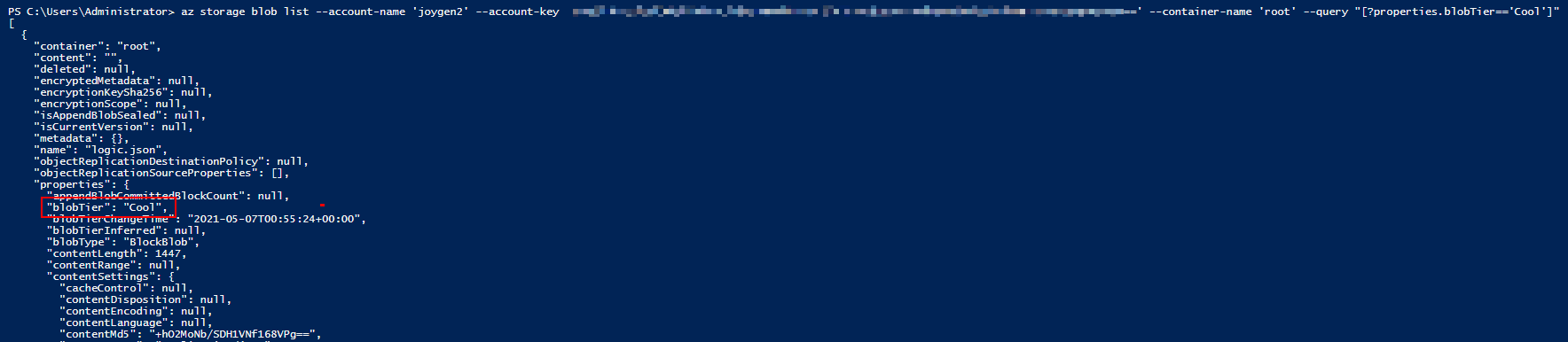

The accepted answer works perfectly fine. However, if you have a lot of files then the results are going to be paginated. The CLI tool will return NextMarker which has to be used in the subsequent call using --marker parameter. In case of huge number of files, this will have to be scripted out using something like power shell.Also az storage blob list makes --container-name mandatory. Which means only one container can be queried at a time.

Blob Inventory

I have a ton of files and many containers. I found an alternate method that worked best for me. Under Data management there is an option called Blob Inventory.

This will basically generate a report of all the blobs across all the containers in a storage account. The report can be customized to include the fields of your choice, for example: Name, Access Tier, Blob Type etc. There are also options to filter certain blobs (include and exclude filters).

The report will be generated in CSV or Parquet format and stored in the container of your choice at a daily or weekly frequency. The only downside is that the report can't be generated on-demand (only scheduled).

Further, If you wish to run SQL on the Inventory report (CSV/Parquet file) then simply use DBeaver to do this.