According to this post there 3 different ways to get feature importance from Xgboost:

- use built-in feature importance,

- use permutation based importance,

- use shap based importance.

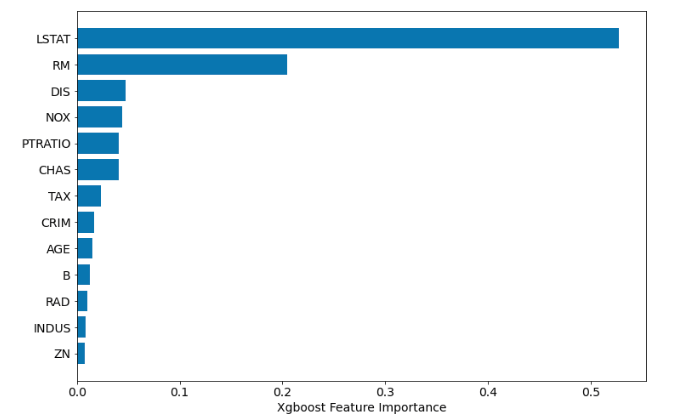

Built-in feature importance

Code example:

xgb = XGBRegressor(n_estimators=100)

xgb.fit(X_train, y_train)

sorted_idx = xgb.feature_importances_.argsort()

plt.barh(boston.feature_names[sorted_idx], xgb.feature_importances_[sorted_idx])

plt.xlabel("Xgboost Feature Importance")

Please be aware of what type of feature importance you are using. There are several types of importance, see the docs. The scikit-learn like API of Xgboost is returning gain importance while get_fscore returns weight type.

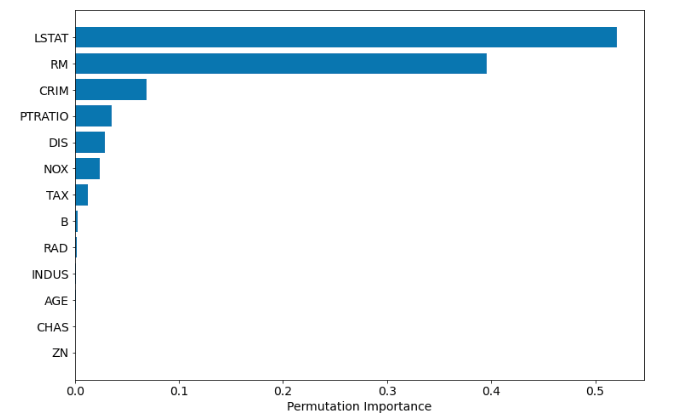

Permutation based importance

perm_importance = permutation_importance(xgb, X_test, y_test)

sorted_idx = perm_importance.importances_mean.argsort()

plt.barh(boston.feature_names[sorted_idx], perm_importance.importances_mean[sorted_idx])

plt.xlabel("Permutation Importance")

This is my preferred way to compute the importance. However, it can fail in case highly colinear features, so be careful! It's using permutation_importance from scikit-learn.

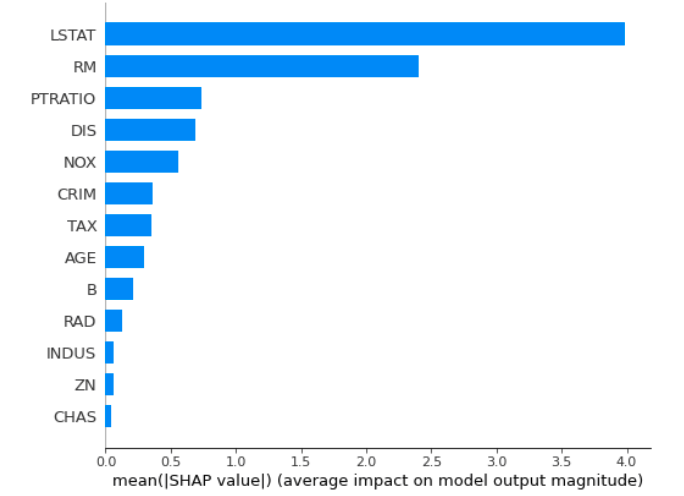

SHAP based importance

explainer = shap.TreeExplainer(xgb)

shap_values = explainer.shap_values(X_test)

shap.summary_plot(shap_values, X_test, plot_type="bar")

To use the above code, you need to have shap package installed.

I was running the example analysis on Boston data (house price regression from scikit-learn). Below 3 feature importance:

Built-in importance

![built in xgboost importance]()

Permutation based importance

![permutation importance]()

SHAP importance

![shap imp]()

All plots are for the same model! As you see, there is a difference in the results. I prefer permutation-based importance because I have a clear picture of which feature impacts the performance of the model (if there is no high collinearity).

bst.feature_names=['foo', 'bar', ...]. – Incumber