It is not required for me to use the PDFBox library, so a solution that uses another library is fine

Camelot and Excalibur

You may want to try Python library Camelot, an open source library for Python. If you are not inclined to write code, you may use the web interface Excalibur created around Camelot. You "upload" the document to a localhost web server, and "download" the result from this localhost server.

Here is an example from using this python code:

import camelot

tables = camelot.read_pdf('foo.pdf', flavor="stream")

tables[0].to_csv('foo.csv')

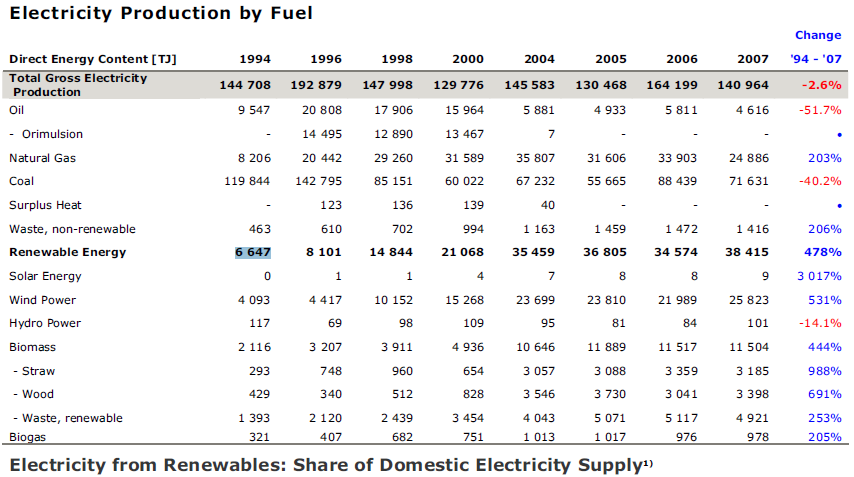

The input is a pdf containing this table:

![PDF-TREX sample]()

Sample table from the PDF-TREX set

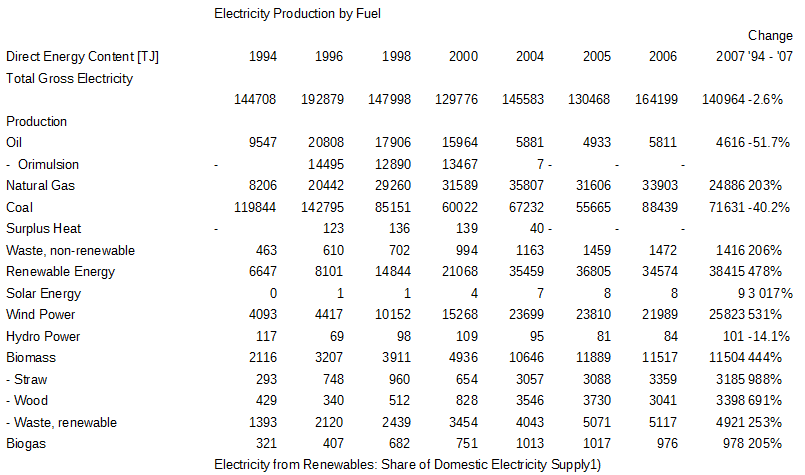

No help is provided to camelot, it is working on its own by looking at pieces of text relative alignment. The result is returned in a csv file:

![PDF table extracted from sample by camelot]()

PDF table extracted from sample by camelot

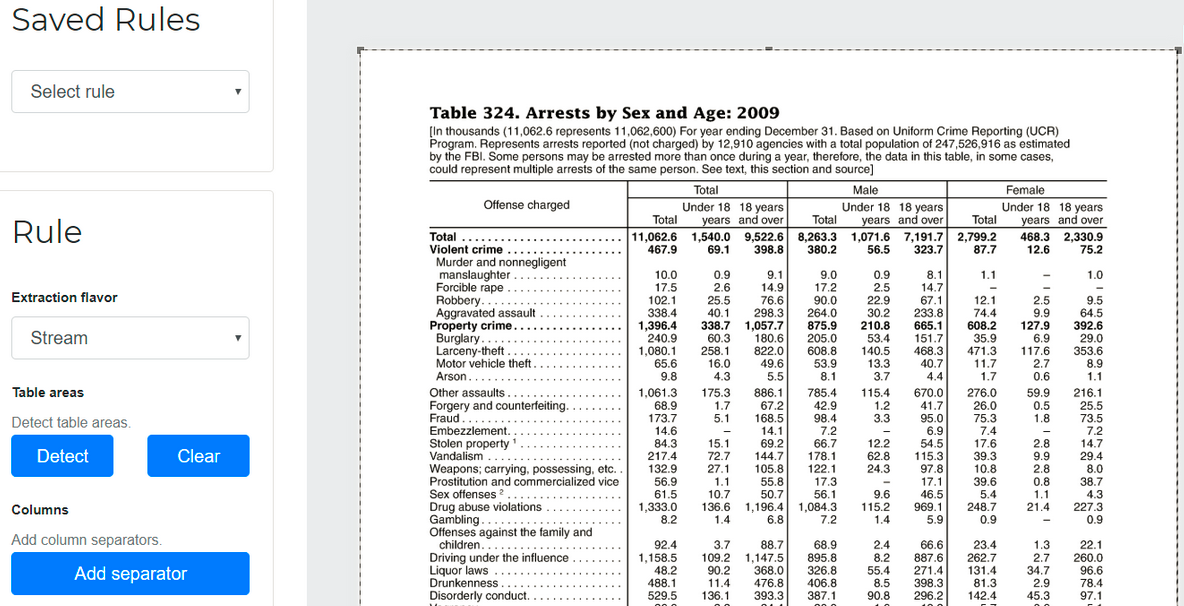

"Rules" can de added to help camelot identify where are fillets in sophisticated tables:

![Rule added to Excalibur]()

Rule added in Excalibur. Source

GitHub:

The two projects are active.

Here is a comparison with other software (with test based on actual documents), Tabula, pdfplumber, pdftables, pdf-table-extract.

I want is to be able to parse the file and know what each parsed number means

You cannot do that automatically, as pdf is not semantically structured.

Book versus document

Pdf "documents" are unstructured from a semantic standpoint (it's like a notepad file), the pdf document gives instructions on where to print a text fragment, unrelated to other fragments of the same section, there is no separation between content (what to print, and whether this is a fragment of a title, a table or a footnote) and the visual representation (font, location, etc). Pdf is an extension of PostScript, which describes a Hello world! page this way:

!PS

/Courier % font

20 selectfont % size

72 500 moveto % current location to print at

(Hello world!) show % add text fragment

showpage % print all on the page

(Wikipedia).

One can imagine what a table looks like with the same instructions.

We could say html is not clearer, however there is a big difference: Html describes the content semantically (title, paragraph, list, table header, table cell, ...) and associates the css to produce a visual form, hence content is fully accessible. In this sense, html is a simplified descendant of sgml which puts constraints to allow data processing:

Markup should describe a document's structure and other attributes

rather than specify the processing that needs to be performed, because

it is less likely to conflict with future developments.

exactly the opposite of PostScript/Pdf. SGML is used in publishing. Pdf doesn't embed this semantical structure, it carries only the css-equivalent associated to plain character strings which may not be complete words or sentences. Pdf is used for closed documents and now for the so-called workflow management.

After having experimented the uncertainty and difficulty in trying to extract data from pdf, it's clear pdf is not at all a solution to preserve a document content for the future (in spite Adobe has obtained from their pairs a pdf standard).

What is actually preserved well is the printed representation, as the pdf was fully dedicated to this aspect when created. Pdf are nearly as dead as printed books.

When reusing the content matters, one must rely again on manual re-entering of data, like from a printed book (possibly trying to do some OCR on it). This is more and more true, as many pdf even prevent the use of copy-paste, introducing multiple spaces between words or produce an unordered characters gibberish when some "optimization" is done for web use.

When the content of the document, not its printed representation, is valuable, then pdf is not the correct format. Even Adobe is unable to recreate perfectly the source of a document from its pdf rendering.

So open data should never be released in pdf format, this limits their use to reading and printing (when allowed), and makes reuse harder or impossible.