The phrase "strongly happens before" is used several times in the C++ draft standard.

For example: Termination [basic.start.term]/5

If the completion of the initialization of an object with static storage duration strongly happens before a call to std::atexit (see , [support.start.term]), the call to the function passed to std::atexit is sequenced before the call to the destructor for the object. If a call to std::atexit strongly happens before the completion of the initialization of an object with static storage duration, the call to the destructor for the object is sequenced before the call to the function passed to std::atexit. If a call to std::atexit strongly happens before another call to std::atexit, the call to the function passed to the second std::atexit call is sequenced before the call to the function passed to the first std::atexit call.

And defined in Data races [intro.races]/12

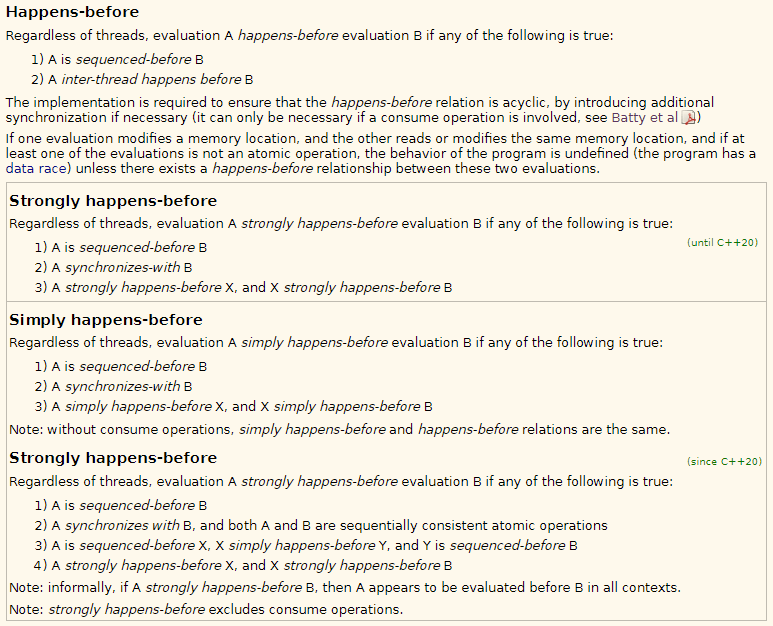

An evaluation A strongly happens before an evaluation D if, either

(12.1) A is sequenced before D, or

(12.2) A synchronizes with D, and both A and D are sequentially consistent atomic operations ([atomics.order]), or

(12.3) there are evaluations B and C such that A is sequenced before B, B simply happens before C, and C is sequenced before D, or

(12.4) there is an evaluation B such that A strongly happens before B, and B strongly happens before D.

[ Note: Informally, if A strongly happens before B, then A appears to be evaluated before B in all contexts. Strongly happens before excludes consume operations. — end note ]

Why was "strongly happens before" introduced? Intuitively, what's its difference and relation with "happens before"?

What does the "A appears to be evaluated before B in all contexts" in the note mean?

(Note: the motivation for this question are Peter Cordes's comments under this answer.)

Additional draft standard quote (thanks to Peter Cordes)

Order and consistency [atomics.order]/4

There is a single total order S on all memory_order::seq_cst operations, including fences, that satisfies the following constraints. First, if A and B are memory_order::seq_cst operations and A strongly happens before B, then A precedes B in S. Second, for every pair of atomic operations A and B on an object M, where A is coherence-ordered before B, the following four conditions are required to be satisfied by S:

(4.1) if A and B are both memory_order::seq_cst operations, then A precedes B in S; and

(4.2) if A is a memory_order::seq_cst operation and B happens before a memory_order::seq_cst fence Y, then A precedes Y in S; and

(4.3) if a memory_order::seq_cst fence X happens before A and B is a memory_order::seq_cst operation, then X precedes B in S; and

(4.4) if a memory_order::seq_cst fence X happens before A and B happens before a memory_order::seq_cst fence Y, then X precedes Y in S.

seq_cst, in Atomics 31.4 Order and consistency: 4. That's not in the C++17 n4659 standard, where 32.4 - 3 defines the existence of a single total order of seq_cst ops consistent with the “happens before” order and modification orders for all affected locations; the "strongly" was added in a later draft. – Hubsheratexit()in one thread andexit()in another, it’s not enough for initializers to carry only a consume-based dependency only because the results then differ from ifexit()was invoked by the same thread. An older answer of mine concerned this difference. – Overthrowexit(). Any thread can kill the entire program by exiting, or the main thread can exit byreturn-ing. It results in the calling ofatexit()handlers and the death of all threads whatever they were doing. – Overthrowexit(3). The C library does some work like flushing buffers and executing atexit handlers. These handlers have to be carefully coded to avoid breaks, but they can accomplish useful things like deleting temporaries and logging stuff. Then, when a process has truly exited, the Linux kernel cleans up its threads and open file descriptors. – Overthrow