The problem is related to a dirty upper half of an AVX register after calling omp_get_wtime(). This is a problem particularly for Skylake processors.

The first time I read about this problem was here. Since then other people have observed this problem: here and here.

Using gdb I found that omp_get_wtime() calls clock_gettime. I rewrote my code to use clock_gettime() and I see the same problem.

void fix_avx() { __asm__ __volatile__ ( "vzeroupper" : : : ); }

void fix_sse() { }

void (*fix)();

double get_wtime() {

struct timespec time;

clock_gettime(CLOCK_MONOTONIC, &time);

#ifndef __AVX__

fix();

#endif

return time.tv_sec + 1E-9*time.tv_nsec;

}

void dispatch() {

fix = fix_sse;

#if defined(__INTEL_COMPILER)

if (_may_i_use_cpu_feature (_FEATURE_AVX)) fix = fix_avx;

#else

#if defined(__GNUC__) && !defined(__clang__)

__builtin_cpu_init();

#endif

if(__builtin_cpu_supports("avx")) fix = fix_avx;

#endif

}

Stepping through code with gdb I see that the first time clock_gettime is called it calls _dl_runtime_resolve_avx(). I believe the problem is in this function based on this comment. This function appears to only be called the first time clock_gettime is called.

With GCC the problem goes away using //__asm__ __volatile__ ( "vzeroupper" : : : ); after the first call with clock_gettime however with Clang (using clang -O2 -fno-vectorize since Clang vectorizes even at -O2) it only goes away using it after every call to clock_gettime.

Here is the code I used to test this (with GCC 6.3 and Clang 3.8)

#include <string.h>

#include <stdio.h>

#include <x86intrin.h>

#include <time.h>

void fix_avx() { __asm__ __volatile__ ( "vzeroupper" : : : ); }

void fix_sse() { }

void (*fix)();

double get_wtime() {

struct timespec time;

clock_gettime(CLOCK_MONOTONIC, &time);

#ifndef __AVX__

fix();

#endif

return time.tv_sec + 1E-9*time.tv_nsec;

}

void dispatch() {

fix = fix_sse;

#if defined(__INTEL_COMPILER)

if (_may_i_use_cpu_feature (_FEATURE_AVX)) fix = fix_avx;

#else

#if defined(__GNUC__) && !defined(__clang__)

__builtin_cpu_init();

#endif

if(__builtin_cpu_supports("avx")) fix = fix_avx;

#endif

}

#define N 1000000

#define R 1000

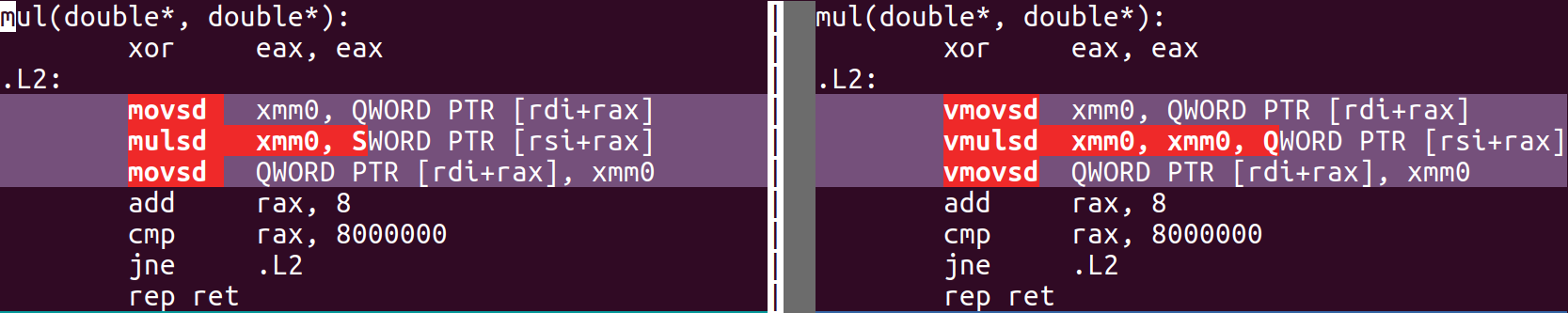

void mul(double *a, double *b) {

for (int i = 0; i<N; i++) a[i] *= b[i];

}

int main() {

dispatch();

const double mem = 3*sizeof(double)*N*R/1024/1024/1024;

const double maxbw = 34.1;

double *a = (double*)_mm_malloc(sizeof *a * N, 32);

double *b = (double*)_mm_malloc(sizeof *b * N, 32);

//b must be initialized to get the correct bandwidth!!!

memset(a, 1, sizeof *a * N);

memset(b, 1, sizeof *b * N);

double dtime;

//dtime = get_wtime(); // call once to fix GCC

//printf("%f\n", dtime);

//fix = fix_sse;

dtime = -get_wtime();

for(int i=0; i<R; i++) mul(a,b);

dtime += get_wtime();

printf("time %.2f s, %.1f GB/s, efficency %.1f%%\n", dtime, mem/dtime, 100*mem/dtime/maxbw);

_mm_free(a), _mm_free(b);

}

If I disable lazy function call resolution with -z now (e.g. clang -O2 -fno-vectorize -z now foo.c) then Clang only needs __asm__ __volatile__ ( "vzeroupper" : : : ); after the first call to clock_gettime just like GCC.

I expected that with -z now I would only need __asm__ __volatile__ ( "vzeroupper" : : : ); right after main() but I still need it after the first call to clock_gettime.

__asm__ __volatile__ ( "vzeroupper" : : : );right after the calls toomp_get_wtime()fixes it. – Fossvzeroupperas well. Just insert avzeroupperafter each library call, if you want te be 100% sure to avoid this problem on Skylake with non-VEX encoded SSE code. – Forespent#ifndef __AVX__(newline)if (_may_i_use_cpu_feature (_FEATURE_AVX)) __asm__ __volatile__ ( "vzeroupper" : : : );(newline)#endif(untested). Maybe it works?? This only inserts avzeroupperif the source was compiled without AVX, but only executes avzeroupperif the system supports it. – Forespentomp_get_wtime()callsgettimeofdateor some other glibc function. I think the problem is the first time it is called it uses a CPU dispatcher and this leaves it dirty. I only need to usevzeroupperafter the first call toomp_get_wtime()to fix the problem. Somebody else found the problem in_dl_runtime_resolve_avx(). That looks like some kind of dispatcher to me. A can step through gdb (if I can figure out how to use it) to find out. – Fossomp_get_wtimecallsclock_gettime. Andclock_gettimecalls_dl_runtime_resolve_avx. My guess is this is where the problem is. – Fossvzeroupperis only needed after the first library call. – Forespentclock_gettime. In Clang even gets a higher bandwidth for the scalar operation. – Foss-O2 -fno-vectorize– Foss__asm__ __volatile__ ( "vzeroupper" : : : );after every call toclock_gettimebut not with GCC. With GCC only only have to do it once. – Foss