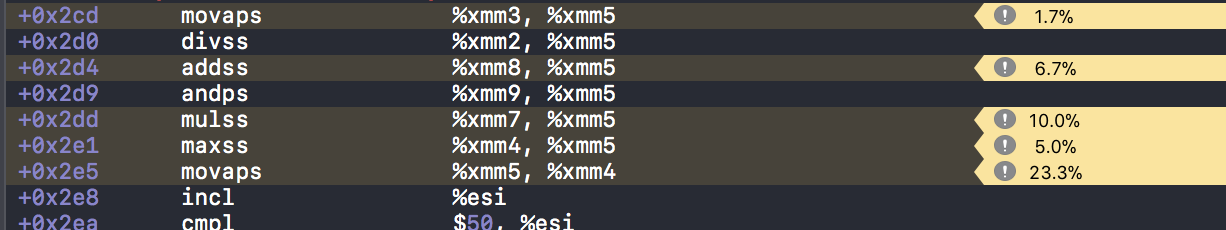

First of all, it's possible that some counts that really belong to divss are being charged to later instructions, which is called a "skid". (Also see the rest of that comment thread for some more details.) Presumably Xcode is like Linux perf, and uses the fixed cpu_clk_unhalted.thread counter for cycles instead of one of the programmable counters. This is not a "precise" event (PEBS), so skids are possible. As @BeeOnRope points out, you can use a PEBS event that ticks once per cycle (like UOPS_RETIRED < 16) as a PEBS substitute for the fixed cycles counter, removing some of the dependence on interrupt behaviour.

But the way counters fundamentally work for pipelined / out-of-order execution also explains most of what you're seeing. Or it might; you didn't show the complete loop so we can't simulate the code on a simple pipeline model like IACA does, or by hand using hardware guides like http://agner.org/optimize/ and Intel's optimization manual. (And you haven't even specified what microarchitecture you have. I guess it's some member of Intel Sandybridge-family on a Mac).

Counts for cycles are typically charged to the instruction that's waiting for the result, not usually the instruction that's slow to produce the result. Pipelined CPUs don't stall until you try to read a result that isn't ready yet.

Out-of-order execution massively complicates this, but it's still generally true when there's one really slow instruction, like a load that often misses in cache. When the cycles counter overflows (triggering an interrupt), there are many instruction in flight, but only one can be the RIP associated with that performance-counter event. It's also the RIP where execution will resume after the interrupt.

So what happens when an interrupt is raised? See Andy Glew's answer about that, which explains the internals of perf-counter interrupts in the Intel P6 microarchitecture's pipeline, and why (before PEBS) they were always delayed. Sandybridge-family is similar to P6 for this.

I think a reasonable mental model for perf-counter interrupts on Intel CPUs is that it discards any uops that haven't yet been dispatched to an execution unit. But ALU uops that have been dispatched already go through the pipeline to retirement (if there aren't any younger uops that got discarded) instead of being aborted, which makes sense because the maximum extra latency is ~16 cycles for sqrtpd, and flushing the store queue can easily take longer than that. (Pending stores that have already retired can't be rolled back). IDK about loads/stores that haven't retired; at least the loads are probably discarded.

I'm basing this guess on the fact that it's easy to construct loops that don't show any counts for divss when the CPU is sometimes waiting for it to produce its outputs. If it was discarded without retiring, it would be the next instruction when resuming the interrupt, so (other than skids) you'd see lots of counts for it.

Thus, the distribution of cycles counts shows you which instructions spend the most time being the oldest not-yet-dispatched instruction in the scheduler. (Or in case of front-end stalls, which instructions the CPU is stalled trying to fetch / decode / issue). Remember, this usually means it shows you the instructions that are waiting for inputs, not the instructions that are slow to produce them.

(Hmm, this might not be right, and I haven't tested this much. I usually use perf stat to look at overall counts for a whole loop in a microbenchmark, not statistical profiles with perf record. addss and mulss are higher latency than andps, so you'd expect andps to get counts waiting for its xmm5 input if my proposed model was right.)

Anyway, the general problem is, with multiple instructions in flight at once, which one does the HW "blame" when the cycles counter wraps around?

Note that divss is slow to produce the result, but is only a single-uop instruction (unlike integer div which is microcoded on AMD and Intel). If you don't bottleneck on its latency or its not-fully-pipelined throughput, it's not slower than mulss because it can overlap with surrounding code just as well.

(divss / divps is not fully pipelined. On Haswell for example, an independent divps can start every 7 cycles. But each only takes 10-13 cycles to produce its result. All other execution units are fully pipelined; able to start a new operation on independent data every cycle.)

Consider a large loop that bottlenecks on throughput, not latency of any loop-carried dependency, and only needs divss to run once per 20 FP instructions. Using divss by a constant instead of mulss with the reciprocal constant should make (nearly) no difference in performance. (In practice out-of-order scheduling isn't perfect, and longer dependency chains hurt some even when not loop-carried, because they require more instructions to be in flight to hide all that latency and sustain max throughput. i.e. for the out-of-order core to find the instruction-level parallelism.)

Anyway, the point here is that divss is a single uop and it makes sense for it not to get many counts for the cycles event, depending on the surrounding code.

You see the same effect with a cache-miss load: the load itself mostly only gets counts if it has to wait for the registers in the addressing mode, and the first instruction in the dependency chain that uses the loaded data gets a lot of counts.

What your profile result might be telling us:

The divss isn't having to wait for its inputs to be ready. (The movaps %xmm3, %xmm5 before the divss sometimes takes some cycles, but the divss never does.)

We may come close to bottlenecking on the throughput of divss

The dependency chain involving xmm5 after divss is getting some counts. Out-of-order execution has to work to keep multiple independent iterations of that in flight at once.

The maxss / movaps loop-carried dependency chain may be a significant bottleneck. (Especially if you're on Skylake where divss throughput is one per 3 clocks, but maxss latency is 4 cycles. And resource conflicts from competition for ports 0 and 1 will delay maxss.)

The high counts for movaps might be due to it following maxss, forming the only loop-carried dependency in the part of the loop you show. So it's plausible that maxss really is slow to produce results. But if it really was a loop-carried dep chain that was the major bottleneck, you'd expect to see lots of counts on maxss itself, as it would be waiting for its input from the last iteration.

But maybe mov-elimination is "special", and all the counts for some reason get charged to movaps? On Ivybridge and later CPUs, register copies doesn't need an execution unit, but instead are handled in the issue/rename stage of the pipeline.

divpsitself doesn't get any counts because it doesn't have to wait for its input. The counts apply to instructions that have to wait for the slowdivssresult. (But that's not the full explanation; looks like it should bottleneck on divss throughput unless you're on Skylake. And there are lots of counts for instructions later in the chain, not concentrated on the first instruction using thedivssresult.) – Diaconalcyclesyou get in a small loop. Oh, didn't realize the bounty was expiring so soon, yeah I guess I should get on that. – Diaconalperf recordprofiles very often. How much of what I made up is just plain wrong? – Diaconal