In a typical Shapley value estimation for a numerical regression task, there is a clear way in which the marginal contribution of an input feature i to the final numerical output variable can be calculated. For input features (age=45, location=’NY’, sector=’CS’), our model may give a predicted salary of 70k, and when the location is removed it may give a predicted salary of 45k. The marginal contribution of the feature location in this particular coalition is therefore 70-45=25k.

From my understanding, the base value would be the predicted salary if no feature’s are present and summing the base value and all feature contributions will result in the model’s actual salary prediction for the given instance.

Extending this to Classification

Say we convert the above problem to a classification problem, where the same features are used and the model output can be interpreted as a probability that the person makes >50k. In this example, the effect on p(>50k) would be what is measured rather than the effect on the salary itself. If I have followed this correctly, my Shapley value can then be interpreted as a probability.

Extending to Word Embeddings

From the SHAP documentation, we can see that SHAP explainers can calculate feature contributions for Seq2Seq NMT problems.

In my case, I am working on a neural machine translation problem using the Marian NMT library. This is a sequence-to-sequence problem, where string input is encoded into word embeddings and the output is decoded from such word embeddings. My question relates to how this logic of feature contributions extends to such a problem both in theory and in practice. I find the SHAP Partition Explainer gives me reliable feature attributions for NMT tasks, but I cannot figure out why.

More succinctly:

How are feature contributions calculated using the SHAP library for models that typically output a list of word embeddings?

Brief Example

In order to see the SHAP values which I mention, run the below MRE.

import shap

from transformers import MarianMTModel, MarianTokenizer

class NMTModel:

def __init__(self, source, target):

model_name = "Helsinki-NLP/opus-mt-{}-{}".format(source, target)

#The tokenizer converts the text to a more NN-suitable

#format, as it cannot take raw text.

#Uses sentencepiece library

self.tokenizer = MarianTokenizer.from_pretrained(model_name)

#Load the model

self.model = MarianMTModel.from_pretrained(model_name)

def translate(self, src: str):

#Encode: convert to format suitable for an NN

encode_src = self.tokenizer(src, return_tensors="pt", padding=True)

#Feed forward through NMT model

translation = self.model.generate(**encode_src)

#Decode: convert to regular string

decode_target = [self.tokenizer.decode(t, skip_special_tokens=True) for t in translation]

return translation

nmt_model = NMTModel('en','de')

#Create a SHAP Partition Explainer

exp_partition = shap.Explainer(

model=nmt_model.model, \

masker=nmt_model.tokenizer,

algorithm="partition")

#Compute the SHAP estimates

values_partition = exp_partition(["Good morning, Patrick"])

#Plot the SHAP estimates

shap.plots.text(values_partition)

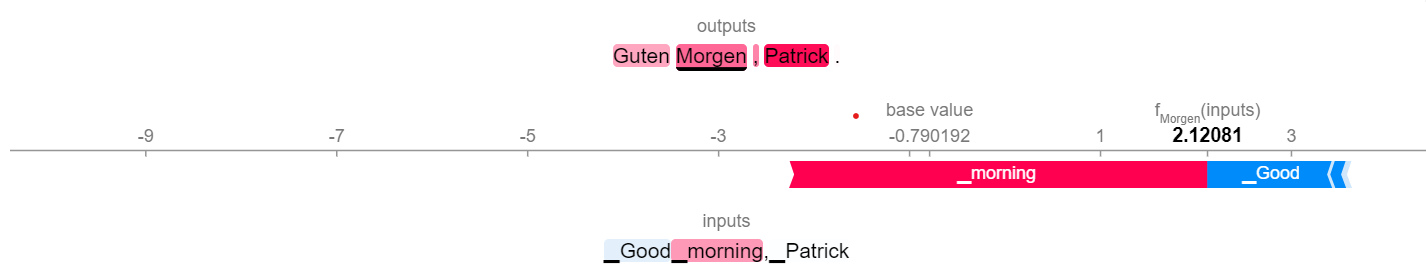

In the output we see the contribution of each input feature in the English sentence "Good morning, Patrick" to the output feature "Morgen". It has correctly identified that "morning" is the most important feature, as this is the correct translation of German "Morgen". I am unable to interpret the actual Shapley values that occur here, where do they stem from? How have the contributions been calculated?

My Thoughts

I found it difficult to find the answer through exploring the SHAP repository. My best estimation would be that the numerical output of the corresponding unit in the neural network, or logit, is used. However, that begs the question of whether the range of your word embeddings has an influence over feature importance. That is, if the word “bring” has a word embedding 500 and “brought” has a word embedding 6512, is the marginal contribution calculated the same as before as if the numerical distance is important here?

I would really appreciate any clarification and hope my question has been clearly expressed.