I have some vertex data. Positions, normals, texture coordinates. I probably loaded it from a .obj file or some other format. Maybe I'm drawing a cube. But each piece of vertex data has its own index. Can I render this mesh data using OpenGL/Direct3D?

In the most general sense, no. OpenGL and Direct3D only allow one index per vertex; the index fetches from each stream of vertex data. Therefore, every unique combination of components must have its own separate index.

So if you have a cube, where each face has its own normal, you will need to replicate the position and normal data a lot. You will need 24 positions and 24 normals, even though the cube will only have 8 unique positions and 6 unique normals.

Your best bet is to simply accept that your data will be larger. A great many model formats will use multiple indices; you will need to fixup this vertex data before you can render with it. Many mesh loading tools, such as Open Asset Importer, will perform this fixup for you.

It should also be noted that most meshes are not cubes. Most meshes are smooth across the vast majority of vertices, only occasionally having different normals/texture coordinates/etc. So while this often comes up for simple geometric shapes, real models rarely have substantial amounts of vertex duplication.

GL 3.x and D3D10

For D3D10/OpenGL 3.x-class hardware, it is possible to avoid performing fixup and use multiple indexed attributes directly. However, be advised that this will likely decrease rendering performance.

The following discussion will use the OpenGL terminology, but Direct3D v10 and above has equivalent functionality.

The idea is to manually access the different vertex attributes from the vertex shader. Instead of sending the vertex attributes directly, the attributes that are passed are actually the indices for that particular vertex. The vertex shader then uses the indices to access the actual attribute through one or more buffer textures.

Attributes can be stored in multiple buffer textures or all within one. If the latter is used, then the shader will need an offset to add to each index in order to find the corresponding attribute's start index in the buffer.

Regular vertex attributes can be compressed in many ways. Buffer textures have fewer means of compression, allowing only a relatively limited number of vertex formats (via the image formats they support).

Please note again that any of these techniques may decrease overall vertex processing performance. Therefore, it should only be used in the most memory-limited of circumstances, after all other options for compression or optimization have been exhausted.

OpenGL ES 3.0 provides buffer textures as well. Higher OpenGL versions allow you to read buffer objects more directly via SSBOs rather than buffer textures, which might have better performance characteristics.

I found a way that allows you to reduce this sort of repetition that runs a bit contrary to some of the statements made in the other answer (but doesn't specifically fit the question asked here). It does however address my question which was thought to be a repeat of this question.

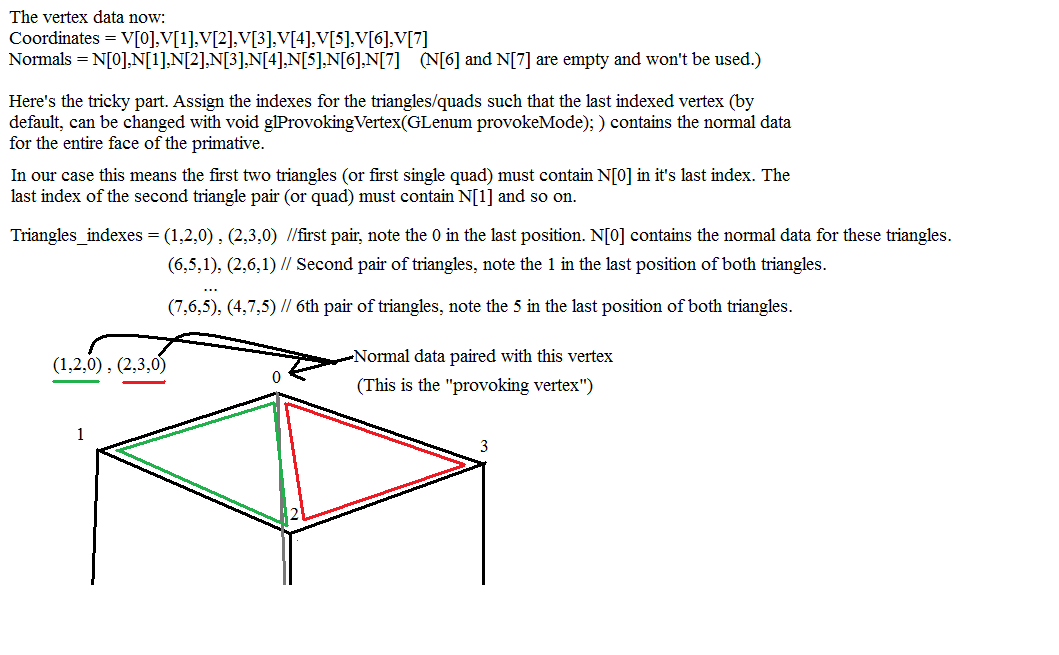

I just learned about Interpolation qualifiers. Specifically "flat". It's my understanding that putting the flat qualifier on your vertex shader output causes only the provoking vertex to pass it's values to the fragment shader.

This means for the situation described in this quote:

So if you have a cube, where each face has its own normal, you will need to replicate the position and normal data a lot. You will need 24 positions and 24 normals, even though the cube will only have 8 unique positions and 6 unique normals.

You can have 8 vertexes, 6 of which contain the unique normals and 2 of normal values are disregarded, so long as you carefully order your primitives indices such that the "provoking vertex" contains the normal data you want to apply to the entire face.

EDIT: My understanding of how it works:

© 2022 - 2024 — McMap. All rights reserved.