You're vastly underestimating the cost of a branch miss. It takes multiple cycles to recover after a branch miss is detected (many pipeline stages after fetch/decode of the branch). What exactly happens when a skylake CPU mispredicts a branch?.

And for some reason you're assuming that mis-speculation doesn't continue into later instructions (not shown here).

Plus, adding up instruction counts is very far from accurate for estimating throughput or latency costs. Some instructions have more or less latency; for example many recent x86 CPUs have zero-latency mov. (Can x86's MOV really be "free"? Why can't I reproduce this at all?). For throughput, though, mov still costs front-end bandwidth.

See What considerations go into predicting latency for operations on modern superscalar processors and how can I calculate them by hand? for how to actually do static analysis to figure out how fast some code will run on a modern x86 (in the correctly-predicted case). Spoiler: it's complicated, and sometimes what's optimal for throughput isn't optimal for latency, so the choice depends on the surrounding code. (Doing stuff on independent data, vs. a long chain where the output of one is the input to the next.)

cmov is 2 uops on Intel before Broadwell, and control dependencies are off the critical path (thanks to speculative execution). So with correct prediction, branchy code can be excellent vs. having a data dependency to select the correct result after calculating both (http://yarchive.net/comp/linux/cmov.html). Here's a case where cmov was a pessimization: gcc optimization flag -O3 makes code slower than -O2.

If branch-prediction failed 50% of the time (i.e. no better than chance!), a loop including the cmov version could be something like 10x faster.

@Mysticial's real-life benchmarks for branchy vs. branchless show a factor of 5 speedup for branchless on this condition over a random (not sorted) array:

if (data[c] >= 128)

sum += data[c];

Why is it faster to process a sorted array than an unsorted array?. (With sorted data, branchy is only slightly faster in this case. Apparently some other bottleneck stopped branchy from being a lot faster on Mysticial's Nehalem.)

50% branch mispredict rate would be absolutely horrible, nearly worst case. Even 20% is really bad; modern branch predictors are ridiculously good if there's any kind of pattern in the branch history. e.g. Skylake can fully predict the branching in a BubbleSort over 12 elements, if done repeatedly with the same input data every time as part of a microbenchmark.

But looping over random data can be highly unpredictable.

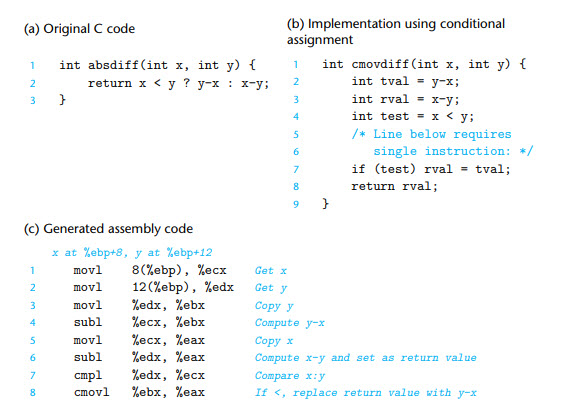

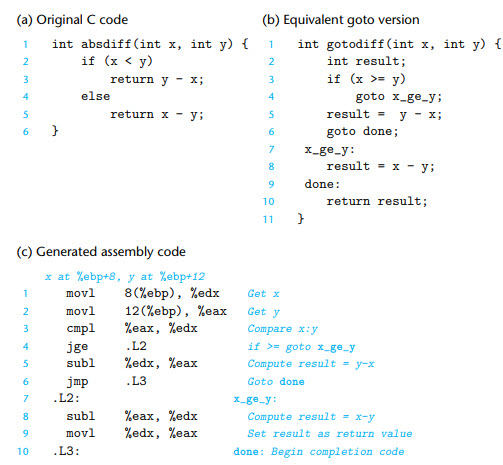

Your source code is all images, so I can't copy/paste it onto the Godbolt compiler explorer (https://godbolt.org/) and see what modern gcc and clang do when you compile with -O3. I suspect it will be more efficient than the code sample you're showing: looks like a lot of mov, and the cmov should be able to use flags set by sub. This is what I'd expect (or at least hope for) from your absdiff function:

# loading args into registers not shown, only necessary with crappy stack-args calling conventions

# branchless absdiff. Hand-written.

# Compilers should do something like this if they choose branchless at all.

# inputs in x=%edi, y=%esi

mov %edi, %eax

sub %esi, %eax # EAX= x-y

sub %edi, %esi # ESI= y-x

cmovg %esi, %eax # if(y>x) eax=y-x

# result in EAX

On Broadwell/Skylake, or Ryzen:

- both

sub instructions can run on the same cycle (because mov is zero latency, assuming successful mov-elimination).

cmovg has its inputs ready the cycle after that, and produces its result in another 1 cycle.

So we have 4 uops with 2 cycle latency from both x and y being ready. Haswell and earlier are an extra uop with an extra cycle of latency, because cmov has 3 inputs and earlier Intel CPUs can't decode 3-input instructions to a single uop.

The compiler output you show is pretty poor; way too much mov and using a separate cmp. cmp is just a sub that only writes flags, not the integer-register operand, and we already need the sub result.

If you have a compiler that emits something like that with full optimization enabled, instead of what I showed above, report a missed-optimization bug.

As @Ped7g says, compilers can use branchless for if(), or branching for a ternary, if they decide. If you're dealing with array elements instead of local vars, ternary tends to help because unconditionally writing a variable lets the compiler optimize without worrying about stepping on something another thread is doing. (i.e. the compiler can't invent writes to potentially-shared variables, but the ternary operator always writes.)

how to force the use of cmov in gcc and VS

AVX-512 and Branching

movmakes only the result difficult to predict, so any further instructions depending on theeaxwill have to wait until the condition is finalized and result is known, but even those may be already fetch+decoded+prepared, and other instructions which don't depend on those can be even fully executed (out of order), so the conditional mov puts less strain on that parallel-out-of-order complex thing, which is modern x86 CPU. (it's much more difficult to speculate whole branch, than to stall depending instructions in single queue). – Utterance