I'm working on optimization techniques performed by the .NET Native compiler. I've created a sample loop:

for (int i = 0; i < 100; i++)

{

Function();

}

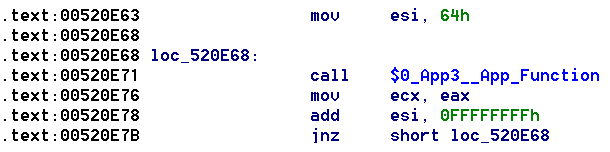

And I've compiled it with Native. Then I disassembled the result .dll file with machine code inside in IDA. As the result, I have:

(I've removed a few unnecessary lines, so don't worry that address lines are inconsistent)

I understand that add esi, 0FFFFFFFFh means really subtract one from esi and alter Zero Flag if needed, so we can jump to the beginning if zero hasn't been reached yet.

What I don't understand is why did the compiler reverse the loop?

I came to the conclusion that

LOOP:

add esi, 0FFFFFFFFh

jnz LOOP

is just faster than for example

LOOP:

inc esi

cmp esi, 064h

jl LOOP

But is it really because of that and is the speed difference really significant?

ianywhere so it can generate less code. – Saccharide