There are a lot of questions here about matching points in polygons efficiently (examples: Here and Here). The primary variables of interest in these are high number of points N, and number of polygon vertices V. These are all good and useful, but I am looking at a high number of points N and polygons G. This also means that my output will be different (I've primarily seen output consisting of the points that fall inside a polygon, but here I'd like to know the polygon attached to a point).

I have a shapefile with a large number of polygons (hundreds of thousands). Polygons can touch, but there is little to no overlap between them (any overlap of interiors would be a result of error - think census block groups). I also have a csv with points (millions), and I would like to categorize those points by which polygon the point falls in, if any. Some may not fall into a polygon (continuing with my example, think points over the ocean). Below I set up a toy example to look at the issue.

Setup:

import numpy as np

from shapely.geometry import shape, MultiPolygon, Point, Polygon

import geopandas as gpd

import pandas as pd

import matplotlib.pyplot as plt

from shapely.strtree import STRtree

#setup:

np.random.seed(12345)

# shape gridsize:

gridsize=10

avgpointspergridspace=10 #point density

Creating a geodataframe of polygons (simulating a shapefile imported using geopandas):

# creating a geodataframe (shapefile imported via geopandas):

garr=np.empty((gridsize,gridsize),dtype=object)

for i in range(gridsize):

for j in range(gridsize):

garr[i,j]=Point(i,j)

# polygons:

poly_list=[]

for i in range(gridsize-1):

for j in range(gridsize-1):

temp_points=[garr[i,j],garr[i,j+1],garr[i+1,j+1],garr[i+1,j],garr[i,j]]

poly=Polygon([[p.x,p.y] for p in temp_points])

poly_list+=[poly]

# creating a geodataframe, including some additional numeric and string variables:

gdf=gpd.GeoDataFrame()

gdf['geometry']= poly_list

gdf['id']=list(range(len(gdf['geometry'])))

gdf['numeric']=0

gdf['string']='foo'

# creating some holes in the grid:

gdf['included']=[np.random.choice([True,False],p=[.95,.05]) for x in range(len(gdf))]

gdf_polys=gdf[gdf['included']]

Generating points (simulating a csv imported via pandas):

# creating a pandas dataframe with points (csv of coordinates imported to pandas):

npoints=(gridsize+2)**2*10

fgridend=gridsize+1

fgridstart=-1

xlist=[]

ylist=[]

points=[]

for i in range(npoints):

x=fgridstart+np.random.random()*fgridend

y=fgridstart+np.random.random()*fgridend

xlist+=[x]

ylist+=[y]

df=pd.DataFrame(list(zip(xlist,ylist)),columns=['x','y'])

coords=[Point(xy) for xy in zip(df['x'],df['y'])]

gdf_points=gpd.GeoDataFrame(df,geometry=coords)

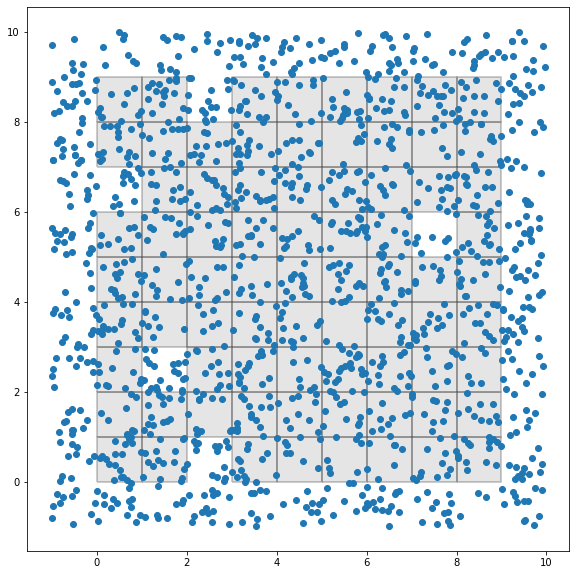

Plotting the results:

fig, ax = plt.subplots(figsize=[10,10])

gdf_polys.plot(ax=ax,facecolor='gray',alpha=.2,edgecolor='black',lw=2)

gdf_points.plot(ax=ax)

Returns:

I now want to match points with the polygons. So The desired output would be an additional column in gdf_points with an identifier for which polygon the point is associated with (using the gdf_polys['id'] column). My very slow code, which produces the correct result is below:

def id_gen(row):

point=row['geometry']

out=0

for i,poly in shapes_list:

if poly.contains(point):

out=i

break

return out

#shapes_list=gdf_polys['geometry']

shapes_list=[(gdf_polys['id'].iloc[i],gdf_polys['geometry'].iloc[i]) for i in range(len(gdf_polys['geometry']))]

point_list=[]

gdf_points['poly']=gdf_points.apply(id_gen,axis=1)

which returns:

x y geometry poly

0 4.865555 1.777419 POINT (4.86555 1.77742) 37

1 6.929483 3.041826 POINT (6.92948 3.04183) 57

2 4.485133 1.492326 POINT (4.48513 1.49233) 37

3 2.889222 6.159370 POINT (2.88922 6.15937) 24

4 2.442262 7.456090 POINT (2.44226 7.45609) 25

... ... ... ... ...

1435 6.414556 5.254309 POINT (6.41456 5.25431) 59

1436 6.409027 4.454615 POINT (6.40903 4.45461) 58

1437 5.763154 2.770337 POINT (5.76315 2.77034) 47

1438 9.613874 1.371165 POINT (9.61387 1.37116) 0

1439 6.013953 3.622011 POINT (6.01395 3.62201) 57

1440 rows × 4 columns

I should note that actual shapefiles will have much more complicated shapes than this grid. I think there are a few places that this could be sped up:

- Not having to loop over every polygon (this will get unwieldy as P increases)

- Using a different algorithm for the point-in-poly. I feel like there should be a way to use STRtree to do this, but currently I've only been able to return the points (and not the indices).

- Vectorize dataframe operations (avoiding apply).

- Probably something else not on my radar (parallelization or something else)

Beginning benchmarking: With a grid size of 10, point density of 10 (1440 points): took about 180ms With a grid size of 20, point density of 10 (4840 points): took about 2.8s With a grid size of 30, point density of 10 (10240 points): took about 12.8s With a grid size of 50, point density of 10 (27040 points): took about 1.5 mins So we can see this scales poorly.