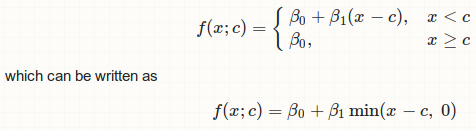

I want to do a piecewise linear regression with one break point, where the 2nd half of the regression line has slope = 0. There are examples of how to do a piecewise linear regression, such as here. The problem I'm having is I'm not clear how to fix the slope of half of the model to be 0.

I tried

lhs <- function(x) ifelse(x < k, k-x, 0)

rhs <- function(x) ifelse(x < k, 0, x-k)

fit <- lm(y ~ lhs(x) + rhs(x))

where k is the break point, but the segment on the right is not a flat / horizontal one.

I want to constrain the slope of the second segment at 0. I tried:

fit <- lm(y ~ x * (x < k) + x * (x > k))

but again, I'm not sure how to get the second half to have a zero slope.

Any help is greatly appreciated.

My own solution

I have a solution thanks to the comment below. Here's the code that I use to optimize and then plot the fit:

x <- c(1, 2, 3, 1, 2, 1, 6, 1, 2, 3, 2, 1, 4, 3, 1)

y <- c(0.041754212, 0.083491254, 0.193129615, 0.104249201, 0.17280516,

0.154342335, 0.303370501, 0.025503008, 0.123934121, 0.191486527,

0.183958737, 0.156707866, 0.31019215, 0.281890206, 0.25414608)

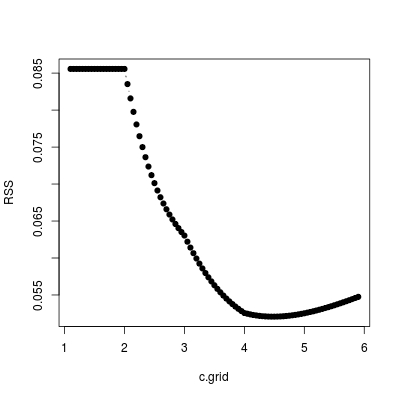

range_x <- max(x) - min(x)

intervals <- 1000

coef1 <- c()

coef2 <- c()

r2 <- c()

for (i in 1:intervals) {

k <- min(x) + (i-1) * (range_x / intervals)

x2 = (x - k) * (x < k)

fit <- lm(y ~ x2)

coef1[i] <- summary(fit)$coef[1]

coef2[i] <- summary(fit)$coef[2]

r2[i] <- summary(fit)$r.squared

}

best_r2 <- max(r2) # get best r squared

pos <- which.max(r2)

best_k <- min(x) + (pos - 1) * (range_x / intervals)

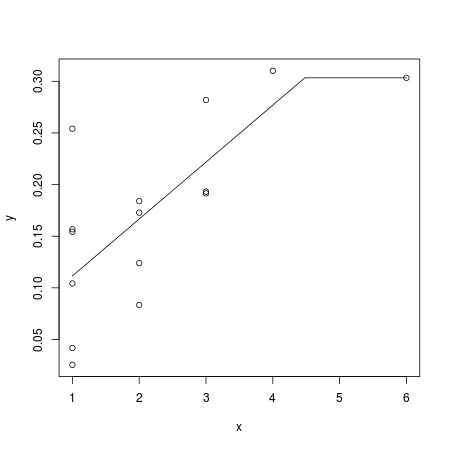

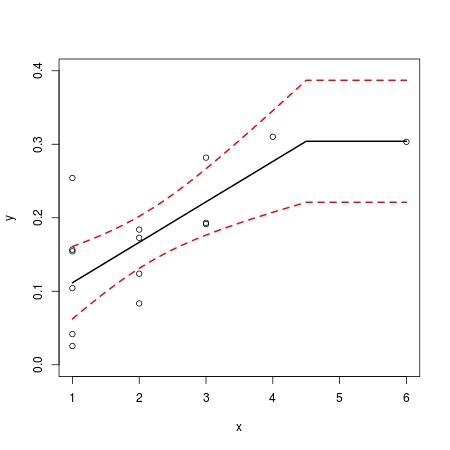

plot(x, y)

curve(coef1[pos] - best_k * coef2[pos] + coef2[pos] * x,

from=min(x), to=best_k, add = TRUE)

segments(best_k, coef1[pos], max(x), coef1[pos])

x = c(1, 2, 3, 1, 2, 1, 6, 1, 2, 3, 2, 1, 4, 3, 1)y = c(0.041754212, 0.083491254, 0.193129615, 0.104249201, 0.17280516, 0.154342335, 0.303370501, 0.025503008, 0.123934121, 0.191486527, 0.183958737, 0.156707866, 0.31019215, 0.281890206, 0.025414608)I realize these data aren't great for the fit i'm describing but that's kind of the point... – Accusatorial