Terminology: you can say a loop is "bound on latency", but when analyzing that bottleneck I wouldn't say "the latency bound" or "bounds". That sounds wrong to me. The thing you're measuring (or calculating via static performance analysis) is the latency or length of the critical path, or the length of the loop-carried dependency chain. (The critical path is the latency chain that's longest, and is the one responsible for the CPU stalling if it's longer than out-of-order exec can hide.)

The key point is that out-of-order execution only cares about true dependencies, and allows operations to execute in parallel otherwise. The CPU can start a new multiply and a new add every cycle. (Assuming from the latency numbers that it's Intel Sandybridge or Haswell, or similar1. i.e. assume the FPU is fully pipelined.)

The two loop-carried dependency chains in the first loop are xpwr *= x and result += stuff, each reading from their own previous iteration, but not coupled to each other in a way that would add their latencies. They can overlap.

result += a[i] * xpwr has 3 inputs:

result from the previous iteration.a[i] is assumed to be ready as early as you want it.xpwr is from the previous iteration. And more importantly, that previous iteration could start computing xpwr right away, not waiting for the previous result.

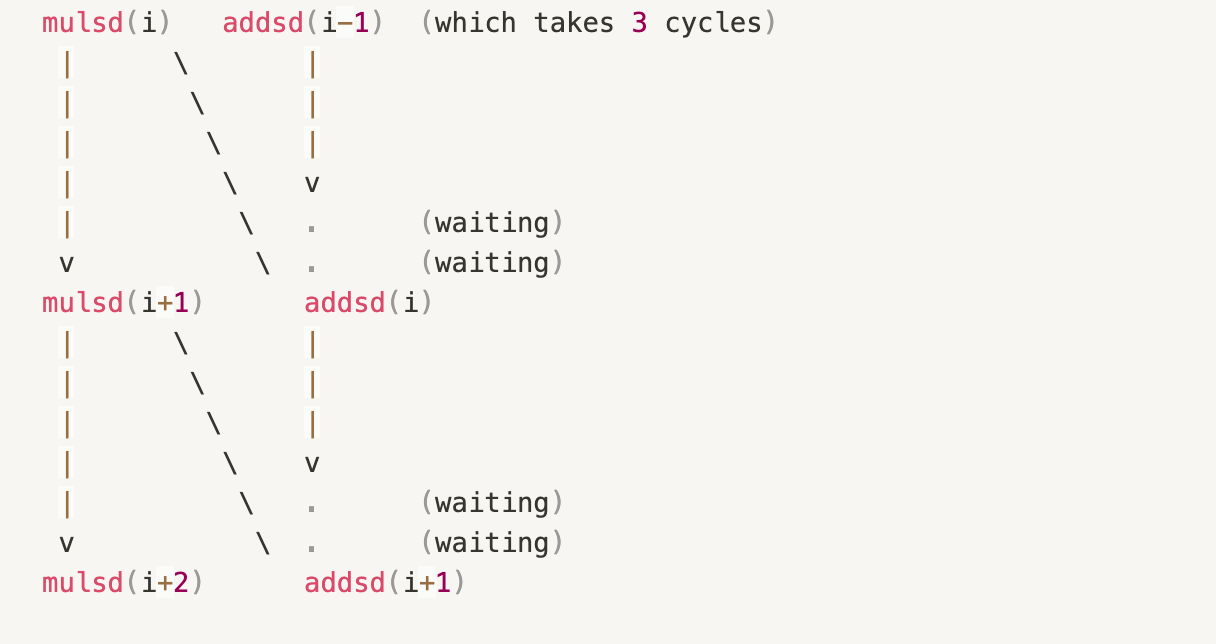

So you have 2 dependency chains, one reading from the other. The addition dep chain has lower latency per step so it just ends up waiting for the multiplication dep chain.

The a[i] * xpwr "forks off" from the xpwr dep chain, independent of it after reading its input. Each computation of that expression is independent of the previous computation of it. It depends on a later xpwr, so the start of each a[i] * xpwr is limited only by the xpwr dependency chain having that value ready.

The loads and integer loop overhead (getting load addresses ready) can be executed far ahead by out-of-order execution.

Graph of the dependency pattern across iterations

mulsd (x86-64 multiply Scalar Double) is for the xpwr updates, addsd for the result updates. The a[i] * xpwr; multiplication is not shown because it's independent work each iteration. It skews the adds later by a fixed amount, but we're assuming there's enough FP throughput to get that done without resource conflicts for the critical path.

mulsd addsd # first iteration result += stuff

| \ | # first iteration xpwr *= x can start at the same time

v \ v

mulsd addsd

| \ |

v \ v

mulsd addsd

| \ |

v \ v

mulsd addsd

(Last xpwr mulsd result is unused, compiler could peel the final iteration and optimize it away.)

Second loop, Horner's method - fast with FMA, else 8 cycles

result = a[i] + x * result; is fewer math operations, but there we do have a loop-carried critical path of 8 cycles. The next mulsd can't start until the addsd is also done. This is bad for long chains (high-degree polynomials), although out-of-order exec can often hide the latency for small degrees, like 5 or 6 coefficients.

This really shines when you have FMA available: each iteration becomes one Fused Multiply-Add. On real Haswell CPUs, FMA has the same 5-cycle latency as an FP multiply, so the loop runs at one iteration per 5 cycles, with less extra latency at the tail to get the final result.

Real world high-performance code often uses this strategy for short polynomials when optimizing for machines with FMA, for high throughput evaluating the same polynomial for many different inputs. (So the instruction-level parallelism is across separate evaluations of the polynomial, rather than trying to create any within one by spending more operations.)

Footnote 1: similarity to real hardware

Having two FP multipliers with those latencies matches Haswell with SSE2/AVX math, although in actual Haswell the FP adder is on the same port as a multiplier, so you can't start all 3 operations in one cycle. FP execution units share ports with the 4 integer ALUs, too, but Sandybridge/Haswell's front-end is only 4 uops wide so it's typically not much of a bottleneck.

See David Kanter's deep dive on Haswell with nice diagrams, and https://agner.org/optimize/, and other performance resources in the x86 tag wiki)

On Broadwell, the next generation after Haswell, FP mul latency improved to 3 cycles. Still 2/clock throughput, with FP add/sub still being 3c and FMA 5c. So the loop with more operations would be faster there, even compared to an FMA implementation of Horner's method.

On Skylake, all FP operations are the same 4-cycle latency, all running on the two FMA units with 2/clock throughput for FP add/sub as well. More recently, Alder Lake re-introduced lower latency FP addition (3 cycles vs. 4 for mul/fma but still keeping the 2/clock throughput), since real-world code often does stuff like naively summing an array, and strict FP semantics don't let compilers optimize it to multiple accumulators. So on recent x86, there's nothing to gain by avoiding FMA if you would still have a multiply dep chain, not just add.

Also related:

What considerations go into predicting latency for operations on modern superscalar processors and how can I calculate them by hand? more general analysis needs to consider other possible bottlenecks: front-end uop throughput, and back-end contention for execution units. Dep chains, especially loop-carried ones, are the 3rd major possible bottleneck (other than stalls like cache misses and branch misses.)

How many CPU cycles are needed for each assembly instruction? - another basic intro to these concepts

Understanding the impact of lfence on a loop with two long dependency chains, for increasing lengths - ability of out-of-order exec to overlap dep chains is limited when they're too long.

Why does mulss take only 3 cycles on Haswell, different from Agner's instruction tables? (Unrolling FP loops with multiple accumulators) - FP dep chains in parallel with unrolling when possible, e.g. a dot product.

In this case, for large-degree polynomials you could be doing stuff like x2 = x*x / x4 = x2 * x2, and maybe generate x^(2n) and x^(2n+1) in parallel. As in Estrin's scheme used in Agner Fog's vectorclass library for short polynomials. I found that when short polynomials are part of independent work across loop iterations (e.g. as part of log(arr[i])), straight Horner's Rule was better throughput, as out-of-order exec was able to hide the latency of a chain of 5 or 6 FMAs interleaved with other work.

=instead of+=was probably a transcription error, thanks for cleaning up this question. Also, this is apparently Intel Haswell specifically, or a simplified model of it. Everything matches that. (Although the answer is still the same for Sandybridge; we don't have any need to start more than 1 mulsd per clock, or more than 3 ALU ops per cycle.) – Peruzzi