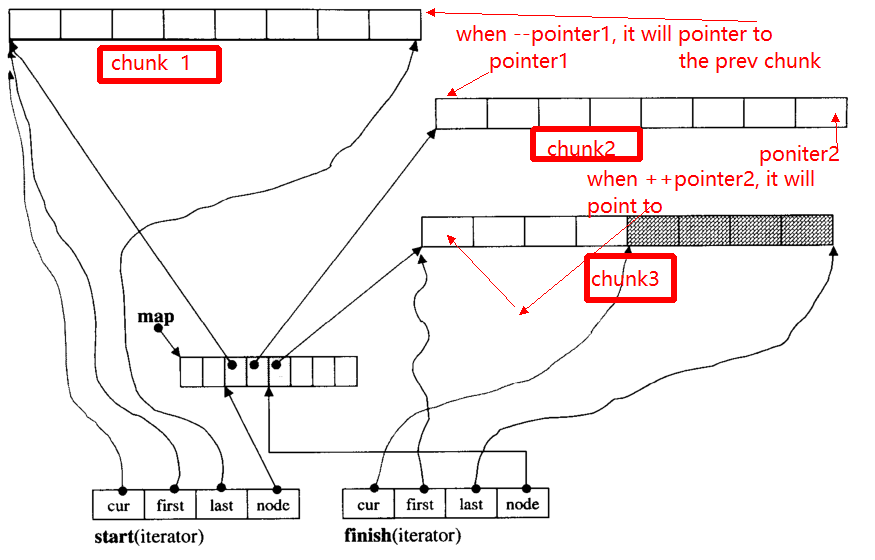

The datas in deque are stored by chuncks of fixed size vector, which are

pointered by a map(which is also a chunk of vector, but its size may change)

![deque internal structure]()

The main part code of the deque iterator is as below:

/*

buff_size is the length of the chunk

*/

template <class T, size_t buff_size>

struct __deque_iterator{

typedef __deque_iterator<T, buff_size> iterator;

typedef T** map_pointer;

// pointer to the chunk

T* cur;

T* first; // the begin of the chunk

T* last; // the end of the chunk

//because the pointer may skip to other chunk

//so this pointer to the map

map_pointer node; // pointer to the map

}

The main part code of the deque is as below:

/*

buff_size is the length of the chunk

*/

template<typename T, size_t buff_size = 0>

class deque{

public:

typedef T value_type;

typedef T& reference;

typedef T* pointer;

typedef __deque_iterator<T, buff_size> iterator;

typedef size_t size_type;

typedef ptrdiff_t difference_type;

protected:

typedef pointer* map_pointer;

// allocate memory for the chunk

typedef allocator<value_type> dataAllocator;

// allocate memory for map

typedef allocator<pointer> mapAllocator;

private:

//data members

iterator start;

iterator finish;

map_pointer map;

size_type map_size;

}

Below i will give you the core code of deque, mainly about two parts:

iterator

How to Random access a deque realize

1. iterator(__deque_iterator)

The main problem of iterator is, when ++, -- iterator, it may skip to other chunk(if it pointer to edge of chunk). For example, there are three data chunks: chunk 1,chunk 2,chunk 3.

The pointer1 pointers to the begin of chunk 2, when operator --pointer it will pointer to the end of chunk 1, so as to the pointer2.

![enter image description here]()

Below I will give the main function of __deque_iterator:

Firstly, skip to any chunk:

void set_node(map_pointer new_node){

node = new_node;

first = *new_node;

last = first + chunk_size();

}

Note that, the chunk_size() function which compute the chunk size, you can think of it returns 8 for simplify here.

operator* get the data in the chunk

reference operator*()const{

return *cur;

}

operator++, --

// prefix forms of increment

self& operator++(){

++cur;

if (cur == last){ //if it reach the end of the chunk

set_node(node + 1);//skip to the next chunk

cur = first;

}

return *this;

}

// postfix forms of increment

self operator++(int){

self tmp = *this;

++*this;//invoke prefix ++

return tmp;

}

self& operator--(){

if(cur == first){ // if it pointer to the begin of the chunk

set_node(node - 1);//skip to the prev chunk

cur = last;

}

--cur;

return *this;

}

self operator--(int){

self tmp = *this;

--*this;

return tmp;

}

iterator skip n steps / random access

self& operator+=(difference_type n){ // n can be postive or negative

difference_type offset = n + (cur - first);

if(offset >=0 && offset < difference_type(buffer_size())){

// in the same chunk

cur += n;

}else{//not in the same chunk

difference_type node_offset;

if (offset > 0){

node_offset = offset / difference_type(chunk_size());

}else{

node_offset = -((-offset - 1) / difference_type(chunk_size())) - 1 ;

}

// skip to the new chunk

set_node(node + node_offset);

// set new cur

cur = first + (offset - node_offset * chunk_size());

}

return *this;

}

// skip n steps

self operator+(difference_type n)const{

self tmp = *this;

return tmp+= n; //reuse operator +=

}

self& operator-=(difference_type n){

return *this += -n; //reuse operator +=

}

self operator-(difference_type n)const{

self tmp = *this;

return tmp -= n; //reuse operator +=

}

// random access (iterator can skip n steps)

// invoke operator + ,operator *

reference operator[](difference_type n)const{

return *(*this + n);

}

2. Random access deque elements

common function of deque

iterator begin(){return start;}

iterator end(){return finish;}

reference front(){

//invoke __deque_iterator operator*

// return start's member *cur

return *start;

}

reference back(){

// cna't use *finish

iterator tmp = finish;

--tmp;

return *tmp; //return finish's *cur

}

reference operator[](size_type n){

//random access, use __deque_iterator operator[]

return start[n];

}

You also see this question which give the main code of deque

https://mcmap.net/q/109492/-what-really-is-a-deque-in-stl