I have a question about the beam search algorithm.

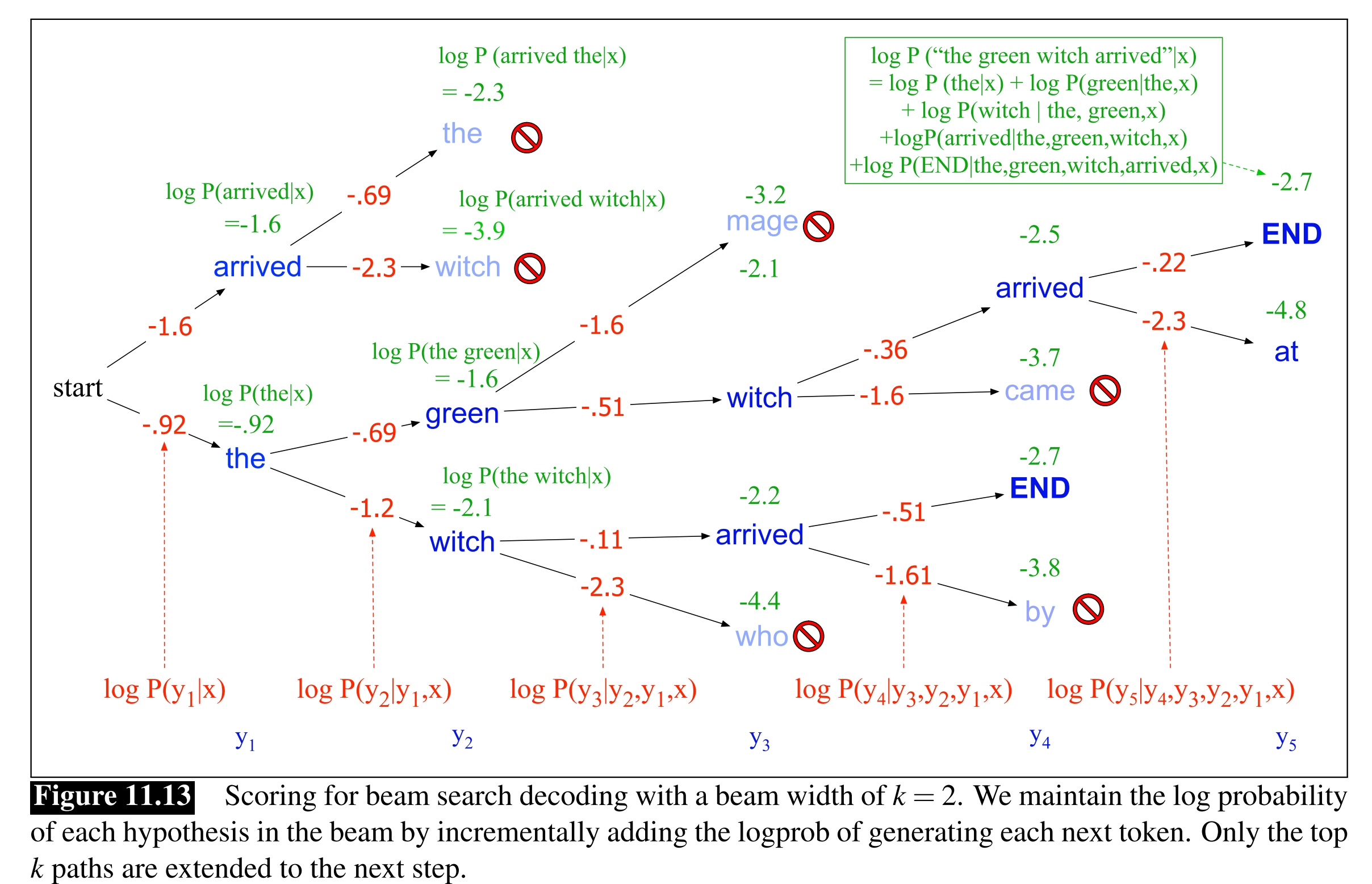

Let's say that n = 2 (the number of nodes we are going to expand from every node). So, at the beginning, we only have the root, with 2 nodes that we expand from it. Now, from those two nodes, we expand two more. So, at the moment, we have 4 leafs. We will continue like this till we find the answer.

Is this how beam search works? Does it expand only n = 2 of every node, or it keeps 2 leaf nodes at all the times?

I used to think that n = 2 means that we should have 2 active nodes at most from each node, not two for the whole tree.