I have a function that draws triangles through OpenGL

I draw two triangles by pressing a button (function on_drawMapPushButton_clicked()).

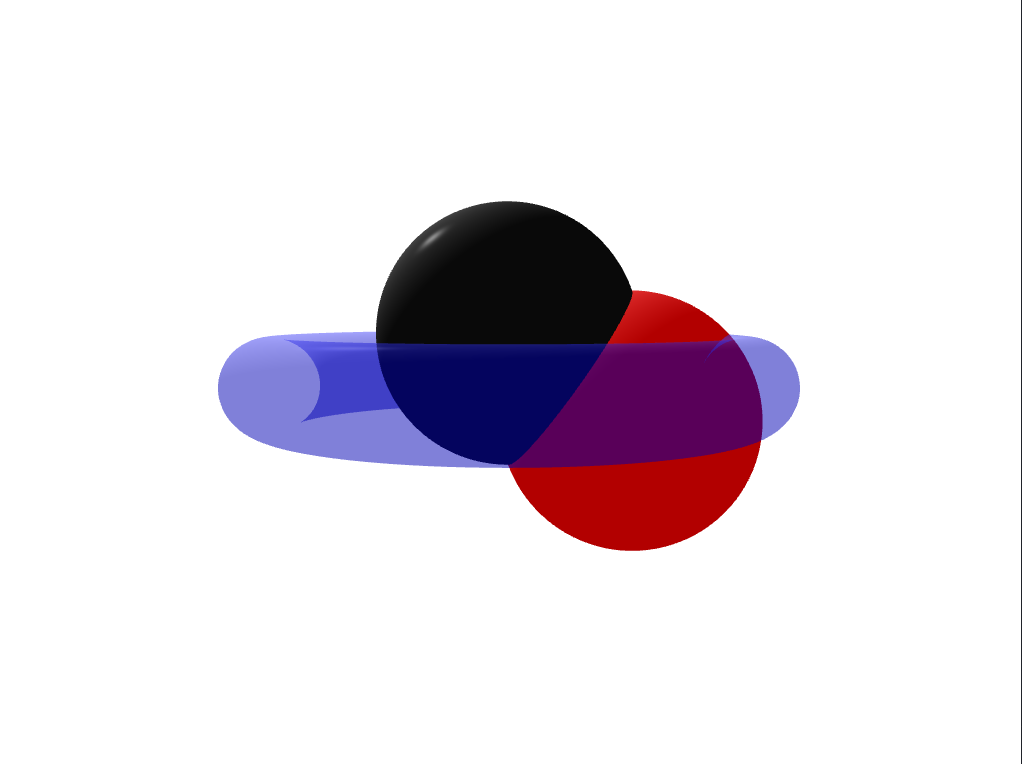

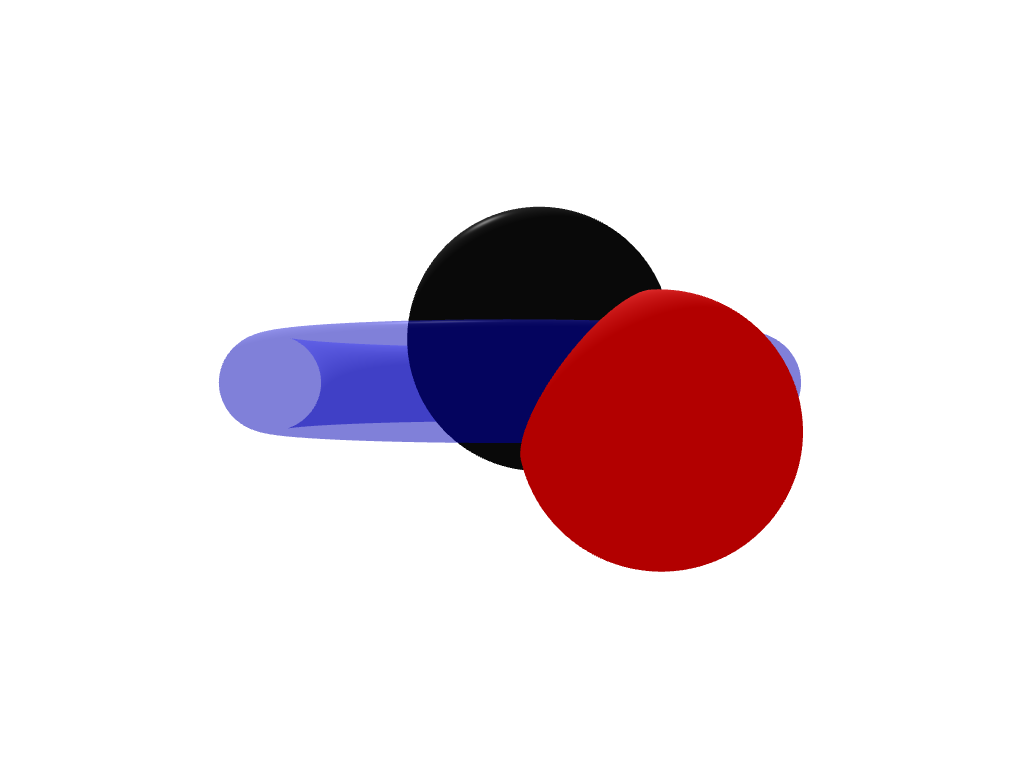

Then i draw a sphere that placed above these triangles. And now i see, that sphere is drawed correctly over first triangle, but second triangle drawed over the sphere and not vice versa.

If i press the button second time, then spehere is drawed correctly over first and second triangles.

When i press the button third time, then second triangle drawed over the sphere again.

When i press the button fourth time, then spehere is drawed correctly over first and second triangles and so on.

If i use in sphereMesh QPhongMaterial instead of QPhongAlphaMaterial, then spehere is drawed correctly over first and second triangles always. Like it must to be.

I can't understand what i do wrong to get my sphere is drawed always over the triangles.

Code, that draws transparent sphere:

selectModel_ = new Qt3DExtras::QSphereMesh(selectEntity_);

selectModel_->setRadius(75);

selectModel_->setSlices(150);

selectMaterial_ = new Qt3DExtras::QPhongAlphaMaterial(selectEntity_);

selectMaterial_->setAmbient(QColor(28, 61, 136));

selectMaterial_->setDiffuse(QColor(11, 56, 159));

selectMaterial_->setSpecular(QColor(10, 67, 199));

selectMaterial_->setShininess(0.8f);

selectEntity_->addComponent(selectModel_);

selectEntity_->addComponent(selectMaterial_);

Function drawTriangles:

void drawTriangles(QPolygonF triangles, QColor color){

int numOfVertices = triangles.size();

// Create and fill vertex buffer

QByteArray bufferBytes;

bufferBytes.resize(3 * numOfVertices * static_cast<int>(sizeof(float)));

float *positions = reinterpret_cast<float*>(bufferBytes.data());

for(auto point : triangles){

*positions++ = static_cast<float>(point.x());

*positions++ = 0.0f; //We need to drow only on the surface

*positions++ = static_cast<float>(point.y());

}

geometry_ = new Qt3DRender::QGeometry(mapEntity_);

auto *buf = new Qt3DRender::QBuffer(geometry_);

buf->setData(bufferBytes);

positionAttribute_ = new Qt3DRender::QAttribute(mapEntity_);

positionAttribute_->setName(Qt3DRender::QAttribute::defaultPositionAttributeName());

positionAttribute_->setVertexBaseType(Qt3DRender::QAttribute::Float); //In our buffer we will have only floats

positionAttribute_->setVertexSize(3); // Size of a vertex

positionAttribute_->setAttributeType(Qt3DRender::QAttribute::VertexAttribute); // Attribute type

positionAttribute_->setByteStride(3 * sizeof(float));

positionAttribute_->setBuffer(buf);

geometry_->addAttribute(positionAttribute_); // Add attribute to ours Qt3DRender::QGeometry

// Create and fill an index buffer

QByteArray indexBytes;

indexBytes.resize(numOfVertices * static_cast<int>(sizeof(unsigned int))); // start to end

unsigned int *indices = reinterpret_cast<unsigned int*>(indexBytes.data());

for(unsigned int i = 0; i < static_cast<unsigned int>(numOfVertices); ++i) {

*indices++ = i;

}

auto *indexBuffer = new Qt3DRender::QBuffer(geometry_);

indexBuffer->setData(indexBytes);

indexAttribute_ = new Qt3DRender::QAttribute(geometry_);

indexAttribute_->setVertexBaseType(Qt3DRender::QAttribute::UnsignedInt); //In our buffer we will have only unsigned ints

indexAttribute_->setAttributeType(Qt3DRender::QAttribute::IndexAttribute); // Attribute type

indexAttribute_->setBuffer(indexBuffer);

indexAttribute_->setCount(static_cast<unsigned int>(numOfVertices)); // Set count of our vertices

geometry_->addAttribute(indexAttribute_); // Add the attribute to ours Qt3DRender::QGeometry

shape_ = new Qt3DRender::QGeometryRenderer(mapEntity_);

shape_->setPrimitiveType(Qt3DRender::QGeometryRenderer::Triangles);

shape_->setGeometry(geometry_);

//Create material

material_ = new Qt3DExtras::QPhongMaterial(mapEntity_);

material_->setAmbient(color);

trianglesEntity_ = new Qt3DCore::QEntity(mapEntity_);

trianglesEntity_->addComponent(shape_);

trianglesEntity_->addComponent(material_);

}

Press button handler on_drawMapPushButton_clicked():

void on_drawMapPushButton_clicked()

{

clearMap(); //Implementation is above

QPolygonF triangle1;

triangle1 << QPointF( 0 ,-1000) << QPointF(0 ,1000) << QPointF(1000, -1000);

drawTriangles(triangle1, Qt::black);

QPolygonF triangle2;

triangle2 << QPointF(-1000,-1000) << QPointF(-100,1000) << QPointF(-100,-1000);

drawTriangles(triangle2, Qt::red);

}

Map clearing function clearMap():

void clearMap()

{

if(mapEntity_){

delete mapEntity_;

mapEntity_ = nullptr;

mapEntity_ = new Qt3DCore::QEntity(view3dRootEntity_);

}

}

transparentLayeroropaqueLayeras a parent of QEntity of my map or a QMesh, but in this case i get all background colored with material, that i use in my map or a QMesh. Where did you get all these knowledges? From books or sites? – Scrappy