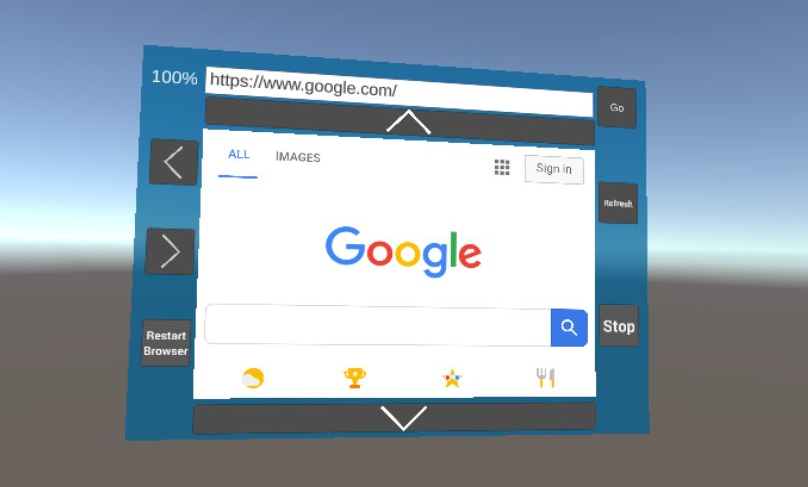

UPDATE: I updated the repo to support video and is a fully functioning 3D browser based on the GeckoView browser engine. It relies on the OVROverlay from Oculus to render frames generated in an Android plugin onto a Unity3D texture.

This is a repo I made in the hopes we can implement a nice in-game browser. It's a bit buggy/slow but it works (most of the time).

It uses a Java plugin that renders an Android WebView to a Bitmap by overriding the view's Draw method, converts that to a png and passes it to a unity RawImage. There is plenty of work to do so feel free to improve it!

![enter image description here]()

How to use it:

At the repo you can find the plugin (unitylibrary-debug.aar) you need to import to Assets/Plugins/Android/ and BrowserView.cs and UnityThread.cs which you can use to convert an Android WebView to a texture that Unity's RawImage can display. Fill BrowserView.cs's public fields appropriately. Make sure your API level is set to 25 in Unity's player settings.

Code samples

Here's overriding the WebView's Draw method to create the bitmap and PNG, and init-ing the variables you need:

public class BitmapWebView extends WebView{

private void init(){

stream = new ByteArrayOutputStream();

array = new ReadData(new byte[]{});

bm = Bitmap.createBitmap(outputWindowWidth,

outputWindowHeight, Bitmap.Config.ARGB_8888);

bmCanvas = new Canvas(bm);

}

@Override

public void draw( Canvas ){

// draw onto a new canvas

super.draw(bmCanvas);

bm.compress(Bitmap.CompressFormat.PNG, 100, stream);

array.Buffer = stream.toByteArray();

UnityBitmapCallback.onFrameUpdate(array,

bm.getWidth(),

bm.getHeight(),

canGoBack,

canGoForward );

stream.reset();

}

}

// you need this class to communicate properly with unity

public class ReadData {

public byte[] Buffer;

public ReadData(byte[] buffer) {

Buffer=buffer;

}

}

Then we pass the png to a unity RawImage.

Here's the Unity receiving side:

// class used for the callback with the texture

class AndroidBitmapPluginCallback : AndroidJavaProxy

{

public AndroidBitmapPluginCallback() : base("com.unityexport.ian.unitylibrary.PluginInterfaceBitmap") { }

public BrowserView BrowserView;

public void onFrameUpdate(AndroidJavaObject jo, int width, int height, bool canGoBack, bool canGoForward)

{

AndroidJavaObject bufferObject = jo.Get<AndroidJavaObject>("Buffer");

byte[] bytes = AndroidJNIHelper.ConvertFromJNIArray<byte[]>(bufferObject.GetRawObject());

if (bytes == null)

return;

if (BrowserView != null)

{

UnityThread.executeInUpdate(()=> BrowserView.SetTexture(bytes,width,height,canGoBack,canGoForward));

}

else

Debug.Log("TestAndroidPlugin is not set");

}

}

public class BrowserView : MonoBehaviour {

// Browser view needs a RawImage component to display webpages

void Start () {

_imageTexture2D = new Texture2D(Screen.width, Screen.height, TextureFormat.ARGB32, false);

_rawImage = gameObject.GetComponent<RawImage>();

_rawImage.texture = _imageTexture2D;

#if !UNITY_EDITOR && UNITY_ANDROID

// Get your Java class and create a new instance

var tempAjc = new AndroidJavaClass("YOUR_LIBRARY.YOUR_CLASS")

_ajc = tempAjc.CallStatic<AndroidJavaObject>("CreateInstance");

// send the callback object to java to get frame updates

AndroidBitmapPluginCallback androidPluginCallback = new AndroidBitmapPluginCallback {BrowserView = this};

_ajc.Call("SetUnityBitmapCallback", androidPluginCallback);

#endif

}

// Android callback to change our browser view texture

public void SetTexture( byte[] bytes, int width, int height, bool canGoBack, bool canGoForward)

{

if (width != _imageTexture2D.width || height != _imageTexture2D.height)

_imageTexture2D = new Texture2D(width, height, TextureFormat.ARGB32, false);

_imageTexture2D.LoadImage(bytes);

_imageTexture2D.Apply();

_rawImage.texture = _imageTexture2D;

}

}