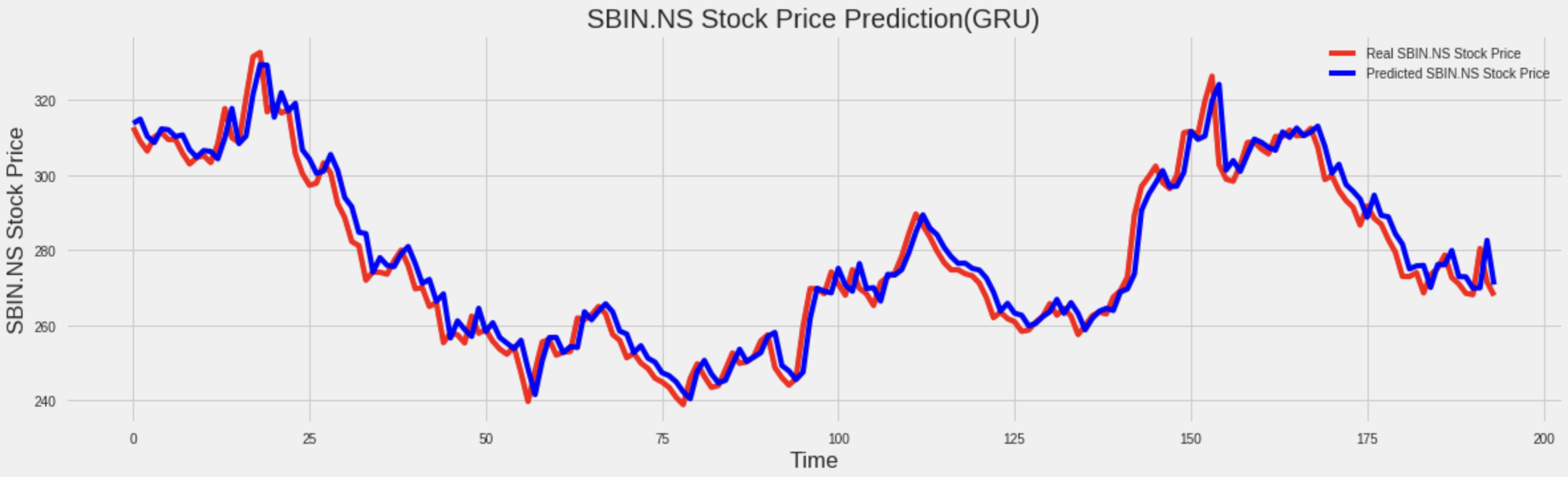

i was just testing this model from kaggle post this model suppose to predict 1 day ahead from given set of last stocks. After tweaking few parameters i got surprisingly good result, as you can see.

mean squared error was 5.193.so overall it looks good at predicting future stocks right? well it turned out to be horrible when i take a look closely on the results.

mean squared error was 5.193.so overall it looks good at predicting future stocks right? well it turned out to be horrible when i take a look closely on the results.

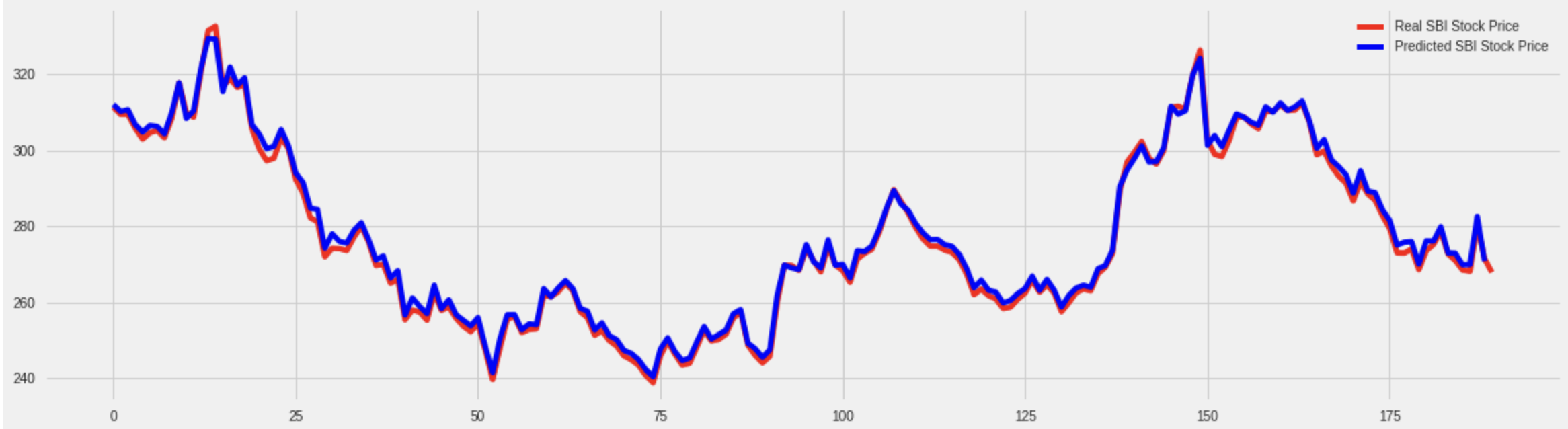

as you can see that this model is predicting last value of the given stocks which is our current last stock.

so i did adjusted predictions to one step back..

so now you can clearly see that model is predicting one step backward or last stock prise instead of future stock predictions.

so now you can clearly see that model is predicting one step backward or last stock prise instead of future stock predictions.

This is my training data

# So for each element of training set, we have 30 previous training set elements

X_train = []

y_train = []

previous = 30

for i in range(previous,len(training_set_scaled)):

X_train.append(training_set_scaled[i-previous:i,0])

y_train.append(training_set_scaled[i,0])

X_train, y_train = np.array(X_train), np.array(y_train)

print(X_train[-1],y_train[-1])

This is my model

# The GRU architecture

regressorGRU = Sequential()

# First GRU layer with Dropout regularisation

regressorGRU.add(GRU(units=50, return_sequences=True, input_shape=(X_train.shape[1],1)))

regressorGRU.add(Dropout(0.2))

# Second GRU layer

regressorGRU.add(GRU(units=50, return_sequences=True))

regressorGRU.add(Dropout(0.2))

# Third GRU layer

regressorGRU.add(GRU(units=50, return_sequences=True))

regressorGRU.add(Dropout(0.2))

# Fourth GRU layer

regressorGRU.add(GRU(units=50))

regressorGRU.add(Dropout(0.2))

# The output layer

regressorGRU.add(Dense(units=1))

# Compiling the RNN

regressorGRU.compile(optimizer='adam',loss='mean_squared_error')

# Fitting to the training set

regressorGRU.fit(X_train,y_train,epochs=50,batch_size=32)

And here is my full code, you also able to run this code at google colab.

so my question is what is the reason behind it? what am i doing wrong any suggestions?