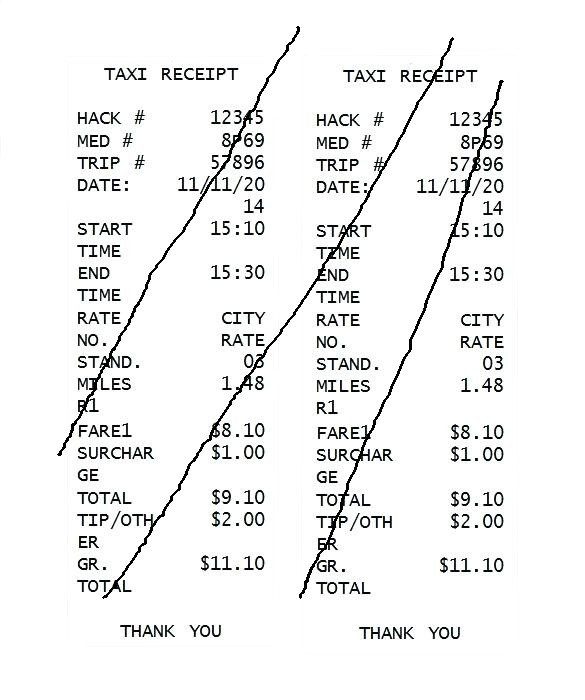

I have an image containing text but with non straight lines drawn on it.

I want to remove those lines without affecting/removing anything from the text.

For that I used Hough probabilistic transform:

import cv2

import numpy as np

def remove_lines(filename):

img = cv2.imread(filename)

gray = cv2.cvtColor(img, cv2.COLOR_BGR2GRAY)

edges = cv2.Canny(gray, 50, 200)

lines = cv2.HoughLinesP(edges, rho=1, theta=1*np.pi/180,

threshold=100, minLineLength=100, maxLineGap=5)

# Draw lines on the image

for line in lines:

x1, y1, x2, y2 = line[0]

cv2.line(img, (x1, y1), (x2, y2), (0, 0, 255), 3)

cv2.imwrite('result', img)

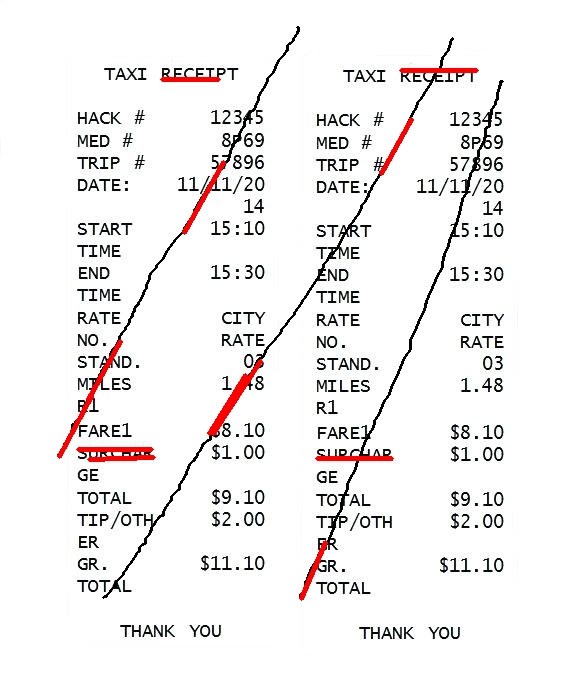

The result was not as good as I expected:

The lines were not entirely detected (only some segments, the straight segments, of the lines were detected).

I did some adjustments on cv2.Canny and cv2.HoughLinesP parameters, but it didn't work too.

I also tried cv2.createLineSegmentDetector (Not available in the latest version of opencv due to license issue, so I had to downgrade opencv to version 4.0.0.21):

import cv2

import numpy as np

def remove_lines(filename):

im = cv2.imread(filename)

gray = cv2.cvtColor(im, cv2.COLOR_BGR2GRAY)

# Create default parametrization LSD

lsd = cv2.createLineSegmentDetector(0)

# Detect lines in the image (Position 0 of the returned tuple are the

# detected lines)

lines = lsd.detect(gray)[0]

# drawn_img = lsd.drawSegments(res, lines)

for element in lines:

if (abs(int(element[0][0]) - int(element[0][2])) > 70 or

abs(int(element[0][1]) - int(element[0][3])) > 70):

cv2.line(im, (int(element[0][0]), int(element[0][1])), (int(

element[0][2]), int(element[0][3])), (0, 0, 255), 3)

cv2.imwrite('lsd.jpg', im)

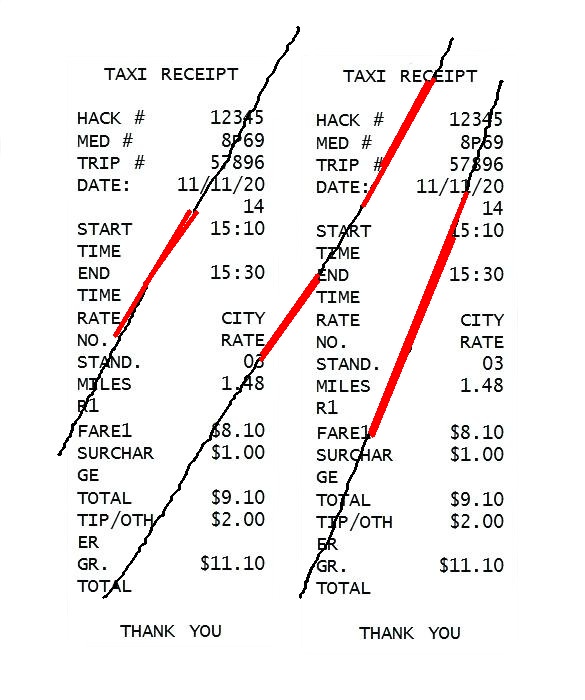

The result was a bit better, but didn't detect the entire lines.

Any idea how to make the lines detection more efficient?