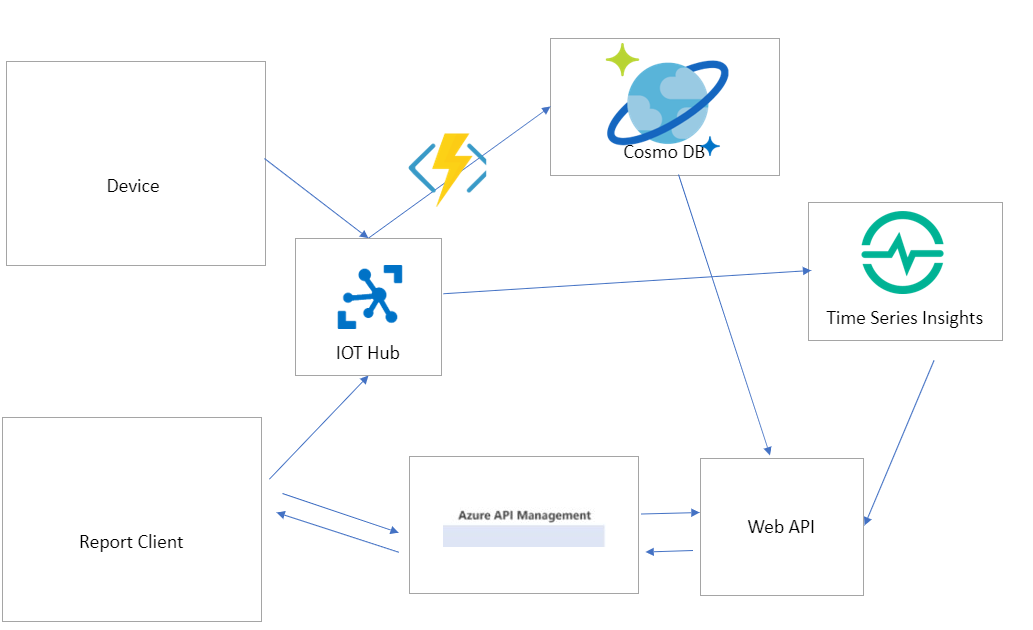

I am working on a IoT solution that will save weather data. I have googled for some days now on how to set up the backend. I am going to use Azure IoT Hub for handling communication, but the next step is the problem.

I want to store the telemetry to a database. This is where I get confused. Some examples says that I should use Azure BLOB storage or Azure Table storage or Azure SQL.

After some years of data collection I want to start creating reports of the data. So the storage needs to be good at working with big data.

Next problem I am stuck on is the worker that will receive the D2C and store it to database. All Azure IoT examples use a console application and some use Azure Stream analytics just to port the event to a database. What is the best practice? It needs to be able to scale and try to use best practice.

Thanks in advance!

DeviceId, Timestamp, value– Gynophore