I am getting confused with the filter paramater, which is the first parameter in the Conv2D() layer function in keras. As I understand the filters are supposed to do things like edge detection or sharpening the image or blurring the image, but when I am defining the model as

input_shape = (32, 32, 3)

model = Sequential()

model.add( Conv2D(64, kernel_size=(5, 5), activation='relu', input_shape=input_shape, strides=(1,1), padding='same') )

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2,2)))

model.add(Conv2D(64, kernel_size=(5, 5), activation='relu', input_shape=input_shape, strides=(1,1), padding='same'))

model.add(MaxPooling2D(pool_size=(2, 2), strides=(2,2)))

model.add(Conv2D(128, kernel_size=(5, 5), activation='relu', input_shape=input_shape, strides=(1,1), padding='same'))

model.add(Flatten())

model.add(Dense(3072, activation='relu'))

model.add(Dense(2048, activation='relu'))

model.add(Dense(num_classes, activation='softmax'))

I am not mentioning the the edge detection or blurring or sharpening anywhere in the Conv2D function. The input images are 32 by 32 RGB images.

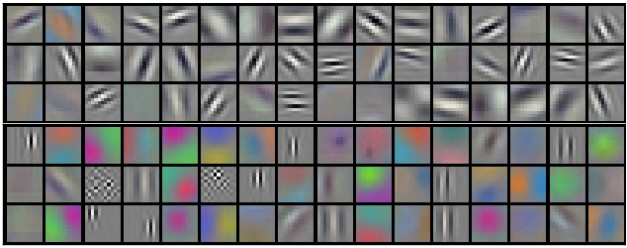

So my question is, when I define the Convolution layer as Conv2D(64, ...), does this 64 means 64 different types of filters, such as vertical edge, horizontal edge, etc, which are chosen by keras at random? if so then is the output of the convolution layer (with 64 filters and 5x5 kernel and 1x1 stride) on a 32x32 1-channel image is 64 images of 28x28 size each. How are these 64 images combined to form a single image for further layers?