I am trying to compute the derivative of the activation function for softmax. I found this : https://math.stackexchange.com/questions/945871/derivative-of-softmax-loss-function nobody seems to give the proper derivation for how we would get the answers for i=j and i!= j. Could someone please explain this! I am confused with the derivatives when a summation is involved as in the denominator for the softmax activation function.

The derivative of a sum is the sum of the derivatives, ie:

d(f1 + f2 + f3 + f4)/dx = df1/dx + df2/dx + df3/dx + df4/dx

To derive the derivatives of p_j with respect to o_i we start with:

d_i(p_j) = d_i(exp(o_j) / Sum_k(exp(o_k)))

I decided to use d_i for the derivative with respect to o_i to make this easier to read.

Using the product rule we get:

d_i(exp(o_j)) / Sum_k(exp(o_k)) + exp(o_j) * d_i(1/Sum_k(exp(o_k)))

Looking at the first term, the derivative will be 0 if i != j, this can be represented with a delta function which I will call D_ij. This gives (for the first term):

= D_ij * exp(o_j) / Sum_k(exp(o_k))

Which is just our original function multiplied by D_ij

= D_ij * p_j

For the second term, when we derive each element of the sum individually, the only non-zero term will be when i = k, this gives us (not forgetting the power rule because the sum is in the denominator)

= -exp(o_j) * Sum_k(d_i(exp(o_k)) / Sum_k(exp(o_k))^2

= -exp(o_j) * exp(o_i) / Sum_k(exp(o_k))^2

= -(exp(o_j) / Sum_k(exp(o_k))) * (exp(o_j) / Sum_k(exp(o_k)))

= -p_j * p_i

Putting the two together we get the surprisingly simple formula:

D_ij * p_j - p_j * p_i

If you really want we can split it into i = j and i != j cases:

i = j: D_ii * p_i - p_i * p_i = p_i - p_i * p_i = p_i * (1 - p_i)

i != j: D_ij * p_i - p_i * p_j = -p_i * p_j

Which is our answer.

d_i(exp(o_j)) / Sum_k(exp(o_k)) + exp(o_j) * d_i(1/Sum_k(exp(o_k))) ? Missing exp before the last o_k –

Thieve i and j refer to the elements of the Jacobian matrix. you seem to think that the 'thing' that goes to 0 is the derivative, but it's just one part of the partial derivative. You wrote out each derivative manually (for 4 inputs) whereas I treated the general case. –

Tarango d_i(exp(o_j)) which is part of the subexpression d_i(exp(o_j)) / Sum_k(exp(o_k)). Look carefully at the parentheses and you will see that this is the derivative of exp(o_j)` with respect to o_i divided by Sum over k of exp(o_k). The derivative of Sum_k(exp(o_k)) with respect to o_i is taken care of in the second part of the product rule expansion. Does this help clear things up? –

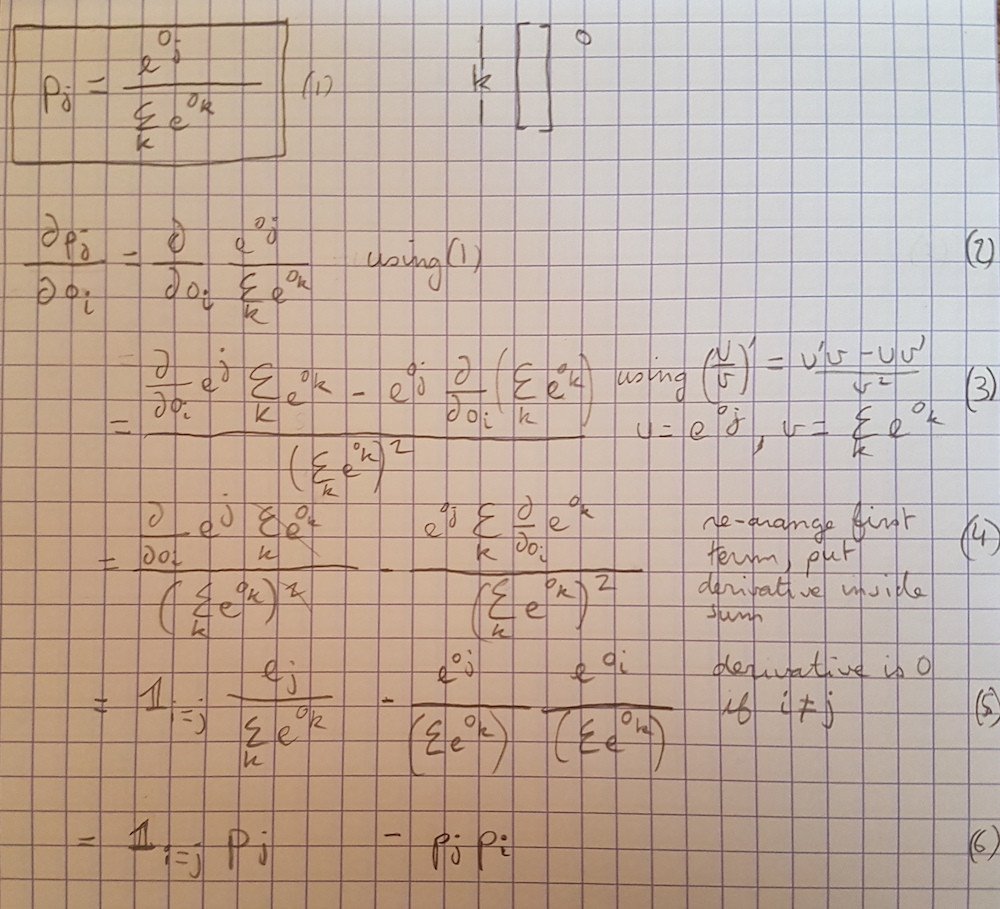

Tarango For what it's worth, here is my derivation based on SirGuy answer: (Feel free to point errors if you find any).

Σ_k ( ( d e^{o_k} ) / do_i ) evaluate to e^{o_i} from step 4 to 5? I'd be very grateful for any insights you can offer on that question. –

Monopoly d/do_i e^o_i which is e^o_i. When i != k, you get a bunch of zeroes. –

Thieve © 2022 - 2024 — McMap. All rights reserved.