The short answer for this set of images is use OpenCV matchShapes method I2 and re-code the method matchShapes with a smaller "eps." double eps = 1.e-20; is more than small enough.

I'm a high school robotics team mentor and I thought the OpenCV matchShapes was just what we needed to improve the robot's vision (scale, translation and rotation invariant and easy for the students to use in existing OpenCV code). I came across this article a couple of hours into my research and this was horrifying! How could matchShapes ever work for us given these results? I was incredulous about these poor results.

I coded my own matchShapes (in Java - that's what the students wanted to use) to see what is the effect of changing the eps (the small value that apparently protects the log10 function from zero and prevents BIG discrepancies by calling them a perfect match - the opposite of what it really is; I couldn't find the basis of the value). I changed matchShapes eps to 1.e-20 from the OpenCV number 1.e-5 and got good results but still the process is disconcerting.

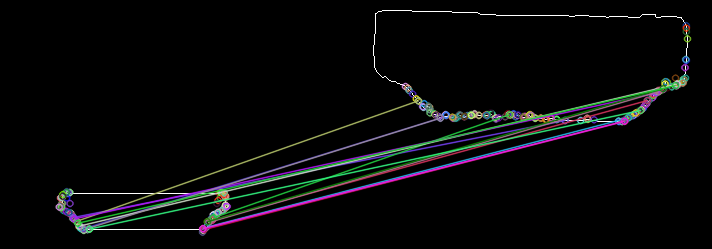

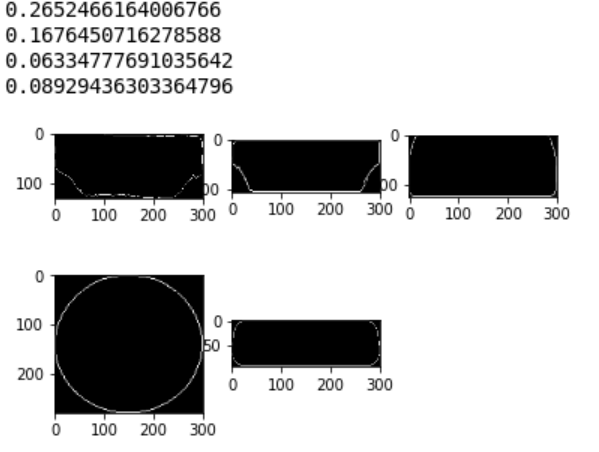

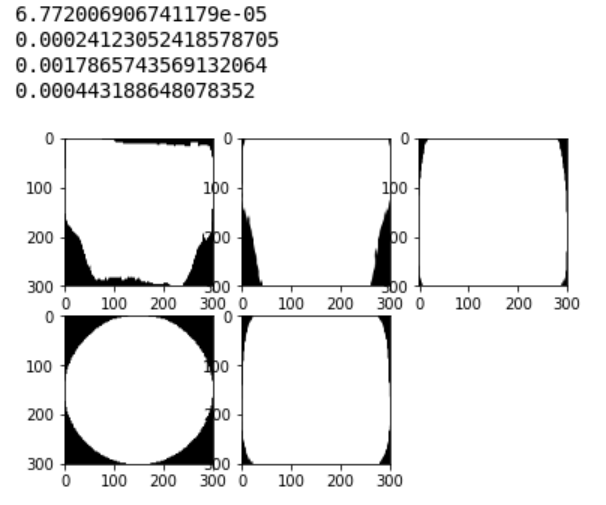

It's wonderful but scary that given the right answer we can contort a process to get it. The attached image has all 3 methods of the Hu Moment comparisons and methods 2 and 3 do a pretty good job.

My process was save the images above, convert to binary 1 channel, dilate 1, erode 1, findCountours, matchShapes with eps = 1.e-20.

Method 2,Target HDMI with itself = 0., HDMI=1.15, DVI=11.48, DB25=27.37, DIN=74.82

Method 3,Target HDMI with itself = 0. ,HDMI=0.34, DVI= 0.48, DB25= 2.33, DIN= 3.29

contours and Hu Moment comparisons - matchShapes 3 methods

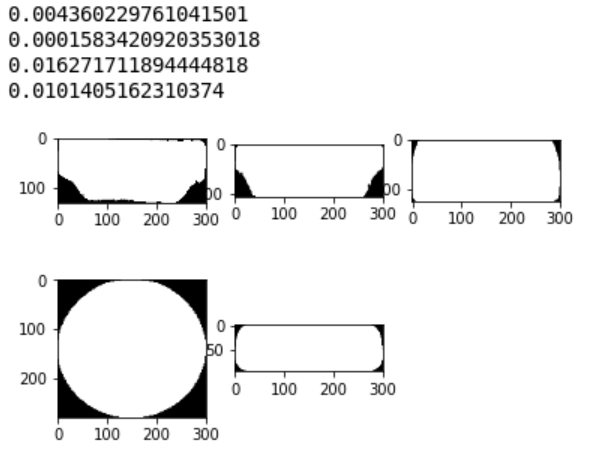

I continued my naive research (little background in statistics) and found various other ways to make normalizations and comparisons. I couldn't figure out the details for the Pearson correlation coefficient and other co-variance methods and maybe they aren't appropriate. I tested two more normalization methods and another matching method.

OpenCV normalizes with the Log10 function for all three of its matching computations.

I tried normalizing each pair of Hu moments with the ratio to each pair's maximum value max(Ai,Bi) and I tried normalizing each pair to a vector length of 1 (divide by sqrt of the sum of the squares).

I used those two new normalizations before computing the angle between the 7-dimension Hu moments vectors using the cosine theta method and before computing the sum of the element pair differences similar to OpenCV method I2.

My four new concoctions worked well but didn't contribute anything beyond the openCV I2 with "corrected" eps except the range of values was smaller and still ordered the same.

Notice that the I3 method is not symmetric - swapping the matchShapes argument order changes the results. For this set of images put the moments of the "UNKNOWN" as the first argument and compare with the list of known shapes as the second argument for best results. The other way around changes the results to the "wrong" answer!

The number 7 of the matching methods I attempted is merely co-incidental to the number of Hu Moments - 7.

Description of the matching indices for the 7 different computations

|Id|normalization |matching index computation |best value|

|--|-------------------------|---------------------------------|----------|

|I1|OpenCV log |sum element pair reciprocals diff|0|

|I2|OpenCV log |sum element pair diff |0|

|I3|OpenCV log |maximum fraction to A diff |0|

|T4|ratio to element pair max|vectors cosine angle |1|

|T5|unit vector |vectors cosine angle |1|

|T6|ratio to element pair max|sum element pair diff |0|

|T7|unit vector |sum element pair diff |0|

Matching indices results for 7 different computations for each of the 5 images

| | I1 | I2 | I3 | T4 | T5 | T6 | T7 |

|---------------|-----|-----|-----|-----|-----|-----|-----|

|HDMI 0 | 1.13| 1.15| 0.34| 0.93| 0.92| 2.02| 1.72|

|DB25 1 | 1.37|27.37| 2.33| 0.36| 0.32| 5.79| 5.69|

|DVI 2 | 0.36|11.48| 0.48| 0.53| 0.43| 5.06| 5.02|

|DIN5 3 | 1.94|74.82| 3.29| 0.38| 0.34| 6.39| 6.34|

|unknown(HDMI) 4| 0.00| 0.00| 0.00| 1.00| 1.00| 0.00| 0.00|(this image matches itself)

[Created OpenCV issue 16997 to address this weakness in matchShapes.]