Let's see the differences when playing around with the arguments:

tf.keras.backend.clear_session()

tf.set_random_seed(42)

X = np.array([[[1,2,3],[4,5,6],[7,8,9]],[[1,2,3],[4,5,6],[0,0,0]]], dtype=np.float32)

model = tf.keras.Sequential([tf.keras.layers.LSTM(4, return_sequences=True, stateful=True, recurrent_initializer='glorot_uniform')])

print(tf.keras.backend.get_value(model(X)).shape)

# (2, 3, 4)

print(tf.keras.backend.get_value(model(X)))

# [[[-0.16141939 0.05600287 0.15932009 0.15656665]

# [-0.10788933 0. 0.23865232 0.13983202]

[-0. 0. 0.23865232 0.0057992 ]]

# [[-0.16141939 0.05600287 0.15932009 0.15656665]

# [-0.10788933 0. 0.23865232 0.13983202]

# [-0.07900514 0.07872108 0.06463861 0.29855606]]]

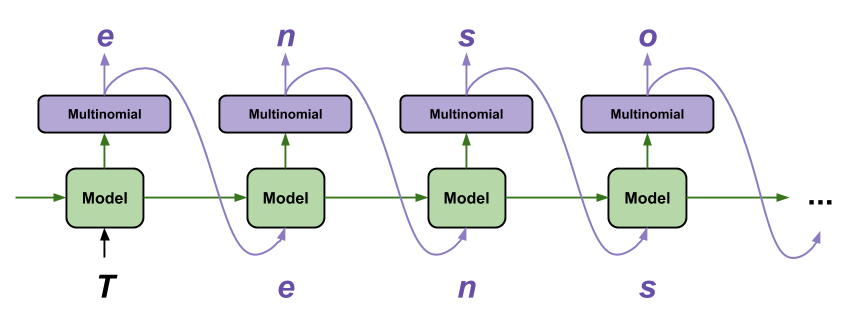

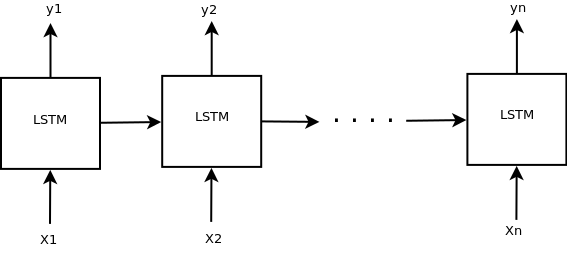

So, if return_sequences is set to True the model returned the full sequence it predicts.

tf.keras.backend.clear_session()

tf.set_random_seed(42)

model = tf.keras.Sequential([

tf.keras.layers.LSTM(4, return_sequences=False, stateful=True, recurrent_initializer='glorot_uniform')])

print(tf.keras.backend.get_value(model(X)).shape)

# (2, 4)

print(tf.keras.backend.get_value(model(X)))

# [[-0. 0. 0.23865232 0.0057992 ]

# [-0.07900514 0.07872108 0.06463861 0.29855606]]

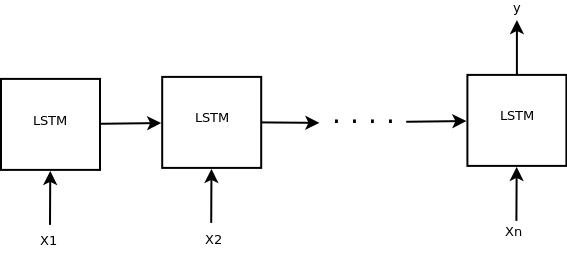

So, as the documentation states, if return_sequences is set to False, the model returns only the last output.

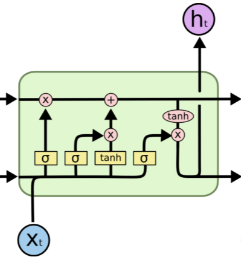

As for stateful it is a bit harder to dive into. But essentially, what it does is when having multiple batches of inputs, the last cell state at batch i will be the initial state at batch i+1. However, I think you will be more than fine going with the default settings.

stateful=Trueshare the context across batches, not the predictions – Goatskin