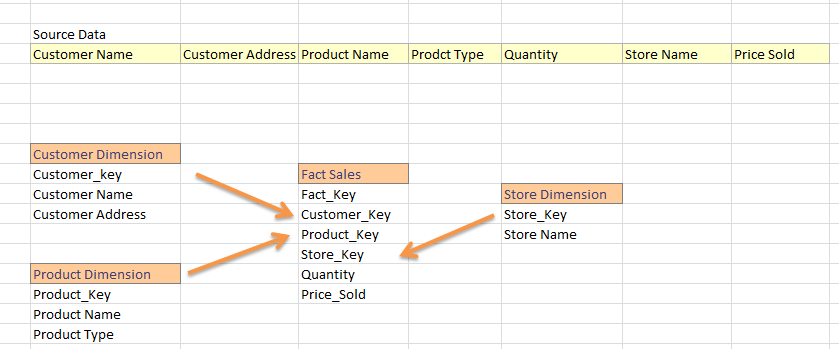

I am from SQL Datawarehouse world where from a flat feed I generate dimension and fact tables. In general data warehouse projects we divide feed into fact and dimension. Ex:

I am completely new to Hadoop and I came to know that I can build data warehouse in hive. Now, I am familiar with using guid which I think is applicable as a primary key in hive. So, the below strategy is the right way to load fact and dimension in hive?

- Load source data into a hive table; let say Sales_Data_Warehouse

Generate Dimension from sales_data_warehouse; ex:

SELECT New_Guid(), Customer_Name, Customer_Address From Sales_Data_Warehouse

When all dimensions are done then load the fact table like

SELECT New_Guid() AS 'Fact_Key', Customer.Customer_Key, Store.Store_Key... FROM Sales_Data_Warehouse AS 'source' JOIN Customer_Dimension Customer on source.Customer_Name = Customer.Customer_Name AND source.Customer_Address = Customer.Customer_Address JOIN Store_Dimension AS 'Store' ON Store.Store_Name = Source.Store_Name JOIN Product_Dimension AS 'Product' ON .....

Is this the way I should load my fact and dimension table in hive?

Also, in general warehouse projects we need to update dimensions attributes (ex: Customer_Address is changed to something else) or have to update fact table foreign key (rarely, but it does happen). So, how can I have a INSERT-UPDATE load in hive. (Like we do Lookup in SSIS or MERGE Statement in TSQL)?

full joinfor hive version <0.14. See this: https://mcmap.net/q/1012753/-hive-best-way-to-do-incremetal-updates-on-a-main-table – Bedew