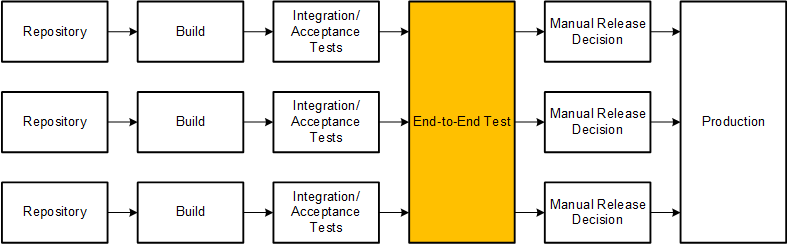

My team develops three microservices. The three work together to provide a business scenario. They communicate with REST and RabbitMQ. Looks like in Toby Clemson's presentation on Microservice Testing.

Each microservice has its own continuous delivery pipeline. They are delivery, not deployment pipelines, meaning there is a manual release decision at the end.

How do I include an end-to-end test for the business scenario, i.e. across all microservices, into the delivery pipelines?

My team suggested this:

We add one shared end-to-end stage that deploys all three microservices and runs the end-to-end test on them. Each time one of the pipelines reaches this stage, it deploys and tests. A semaphore ensures the pipelines pass the stage one after the other. Failure stops all three pipelines.

To me, this seems to sacrifice all the independence the microservice architecture wins in the first place:

The end-to-end stage is a bottleneck. A fast pipeline could thwart slow pipelines because it reserves the end-to-end stage more often, making the others wait before they may run their tests.

Failure in one pipeline would stop the other pipelines from delivering, also disabling them from shipping urgent bug fixes.

The solution doesn't adapt to new business scenarios that need different combinations of microservices. We would either end up with a super-stage that wires all microservices, or each business scenario would require its own, new end-to-end stage.

The end-to-end stage shows only a narrow result because it confirms only that one exact combination of microservice versions work together. If production contains different versions, it does not guarantee this will work as well.

The stage is also in conflict with the manual release decision at the end: What if a build passed end-to-end but we decide to not release it to production? Production would then contain a different version of that microservice than end-to-end, causing warped results.

So what's a better way to do this?