I have some images for which I want to calculate the Minkowski/box count dimension to determine the fractal characteristics in the image. Here are 2 example images:

10.jpg:

24.jpg:

I'm using the following code to calculate the fractal dimension:

import numpy as np

import scipy

def rgb2gray(rgb):

r, g, b = rgb[:,:,0], rgb[:,:,1], rgb[:,:,2]

gray = 0.2989 * r + 0.5870 * g + 0.1140 * b

return gray

def fractal_dimension(Z, threshold=0.9):

# Only for 2d image

assert(len(Z.shape) == 2)

# From https://github.com/rougier/numpy-100 (#87)

def boxcount(Z, k):

S = np.add.reduceat(

np.add.reduceat(Z, np.arange(0, Z.shape[0], k), axis=0),

np.arange(0, Z.shape[1], k), axis=1)

# We count non-empty (0) and non-full boxes (k*k)

return len(np.where((S > 0) & (S < k*k))[0])

# Transform Z into a binary array

Z = (Z < threshold)

# Minimal dimension of image

p = min(Z.shape)

# Greatest power of 2 less than or equal to p

n = 2**np.floor(np.log(p)/np.log(2))

# Extract the exponent

n = int(np.log(n)/np.log(2))

# Build successive box sizes (from 2**n down to 2**1)

sizes = 2**np.arange(n, 1, -1)

# Actual box counting with decreasing size

counts = []

for size in sizes:

counts.append(boxcount(Z, size))

# Fit the successive log(sizes) with log (counts)

coeffs = np.polyfit(np.log(sizes), np.log(counts), 1)

return -coeffs[0]

I = rgb2gray(scipy.misc.imread("24.jpg"))

print("Minkowski–Bouligand dimension (computed): ", fractal_dimension(I))

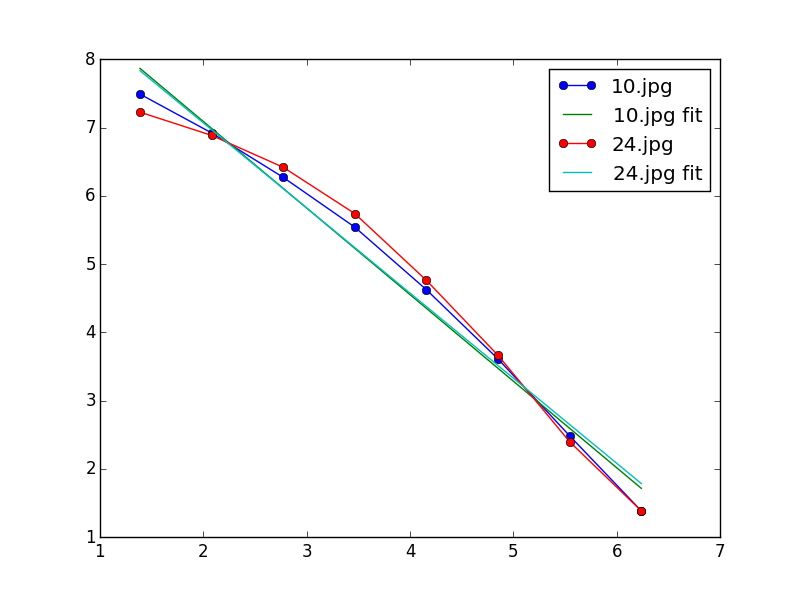

From the literature I've read, it has been suggested that natural scenes (e.g. 24.jpg) are more fractal in nature, and thus should have a larger fractal dimension value

The results it gives me are in the opposite direction than what the literature would suggest:

10.jpg: 1.25924.jpg: 1.073

I would expect the fractal dimension for the natural image to be larger than for the urban

Am I calculating the value incorrectly in my code? Or am I just interpreting the results incorrectly?