I've got a python 3.7 google cloud function integrating with google storage and google logging, so I've deployed a key file with the function and reference it using the GOOGLE_APPLICATION_CREDENTIALS environment variable. All works fine insomuch as my function does what it is supposed to and returns the proper outputs, etc., with the only caveat being that it is logging stuff at ERROR level that seem like they should be at INFO or lower. Or... maybe I'm missing something, and I'd rather not be missing something here.

Bottom line: I want clean logs such that errors/warnings are meaningful and I don't have to deal with noise.

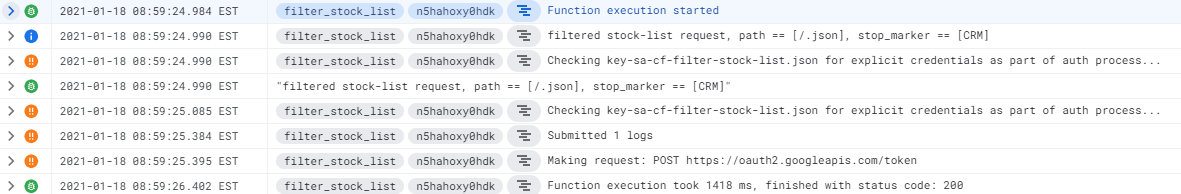

Here's an example of my log output:

You'll notice that I have my own logging appearing at the DEBUG level but right before and after it are seemingly innocuous log entries that are marked as ERROR. (And, yes, I know my own log entry is appearing 2x, once at debug, once at info - that's another question but lower in priority for me.)

Is this just normal for google cloud functions, or is there something I could do to correct this?

UPDATE:

Here is how I configure logging in my python code:

import logging

import logging.config

import google.cloud.logging

client = google.cloud.logging.Client()

client.get_default_handler()

client.setup_logging()

logging.getLogger().setLevel(logging.DEBUG)

Now, I noticed in local debugging (using the functions-framework) that I was also getting these same messages output just to the console without any log entry metadata, so tracing thru the google client code I noticed that the client code is simply using the python logging libraries, and while they are outputting these messages at DEBUG they are somehow bypassing the google logging hooks that I expected to be getting used here. Thus they are being output to stderr which of course google's logging interprets as an error.

This doesn't answer my own question so much as add some more context in hopes someone else has solved this.

UPDATE #2 - an ugly solution that begs for a better one

After doing some more digging through the google cloud client code, I have arrived at the answer, although I don't like it and don't like what I'm doing to "fix" this.

What's going on is that when the function is deployed and running in GCP, the python logging framework is somehow initialized with additional handlers. After calling client.setup_logging() if I print out the handlers attached to the root logger, I see:

root handlers=[<StreamHandler (NOTSET)>, <StreamHandler (WARNING)>, <CloudLoggingHandler (NOTSET)>]

Next, just to make things more fun, when the setup_logging() is called, there are some "excluded loggers" hard-coded into the client code to exclude them from using the CloudLoggingHandler (with a comment stating that without doing that we'd see infinite recursion). So anything logged using those google-specific loggers will always go to one or both of those StreamHandlers I captured above.

AND, the finale of explanation: the default stream used by StreamHandler is stderr, which is rightly (IMHO) interpreted by google's logging reporting as "error."

So with that explanation, I can at least hack around this with the following code in my own function class:

import logging

import google.cloud.logging

client = google.cloud.logging.Client()

client.setup_logging() # NOTE specifying logger_level here sets only the root logger which I'll reset below

logging.getLogger().setLevel(logging.INFO)

# Now I must configure my individual loggers to allow for debug

_LOGGER = logging.getLogger(__name__)

_LOGGER.setLevel(logging.DEBUG)

What this is doing is hiding the debug from those google loggers and then anything they would log at INFO or higher would still show up as errors (due to the stderr output), and then I must remember to configure my own loggers as DEBUG where I want that level of logging to take effect.

Now, why don't I like this? Why am I not just answering my own question here? Because this is a hacky solution requiring me to know the internals of google's client code and logging. It is not intuitive and disallows me to use the root logger for defaults I want to cascade throughout the software.

Thus, I'd still like to see if there is a better solution that I'm missing, and so I'm leaving the question open.

logging.basicConfigI am setting the log format to the followingu'{"message": "%(asctime)s %(module)s %(process)d %(thread)d %(message)s", "severity": "%(levelname)s", "time": "%(asctime)s"}'– Goblet