When you see:

await Task.Yield();

you can think about it this way:

await Task.Factory.StartNew(

() => {},

CancellationToken.None,

TaskCreationOptions.None,

SynchronizationContext.Current != null?

TaskScheduler.FromCurrentSynchronizationContext():

TaskScheduler.Current);

All this does is makes sure the continuation will happen asynchronously in the future. By asynchronously I mean that the execution control will return to the caller of the async method, and the continuation callback will not happen on the same stack frame.

When exactly and on what thread it will happen completely depends on the caller thread's synchronization context.

For a UI thread, the continuation will happen upon some future iteration of the message loop, run by Application.Run (WinForms) or Dispatcher.Run (WPF). Internally, it comes down to the Win32 PostMessage API, which post a custom message to the UI thread's message queue. The await continuation callback will be called when this message gets pumped and processed. You're completely out of control about when exactly this is going to happen.

Besides, Windows has its own priorities for pumping messages: INFO: Window Message Priorities. The most relevant part:

Under this scheme, prioritization can be considered tri-level. All

posted messages are higher priority than user input messages because

they reside in different queues. And all user input messages are

higher priority than WM_PAINT and WM_TIMER messages.

So, if you use await Task.Yield() to yield to the message loop in attempt to keep the UI responsive, you are actually at risk of obstructing the UI thread's message loop. Some pending user input messages, as well as WM_PAINT and WM_TIMER, have a lower priority than the posted continuation message. Thus, if you do await Task.Yield() on a tight loop, you still may block the UI.

This is how it is different from the JavaScript's setTimer analogy you mentioned in the question. A setTimer callback will be called after all user input message have been processed by the browser's message pump.

So, await Task.Yield() is not good for doing background work on the UI thread. In fact, you very rarely need to run a background process on the UI thread, but sometimes you do, e.g. editor syntax highlighting, spell checking etc. In this case, use the framework's idle infrastructure.

E.g., with WPF you could do await Dispatcher.Yield(DispatcherPriority.ApplicationIdle):

async Task DoUIThreadWorkAsync(CancellationToken token)

{

var i = 0;

while (true)

{

token.ThrowIfCancellationRequested();

await Dispatcher.Yield(DispatcherPriority.ApplicationIdle);

// do the UI-related work item

this.TextBlock.Text = "iteration " + i++;

}

}

For WinForms, you could use Application.Idle event:

// await IdleYield();

public static Task IdleYield()

{

var idleTcs = new TaskCompletionSource<bool>();

// subscribe to Application.Idle

EventHandler handler = null;

handler = (s, e) =>

{

Application.Idle -= handler;

idleTcs.SetResult(true);

};

Application.Idle += handler;

return idleTcs.Task;

}

It is recommended that you do not exceed 50ms for each iteration of such background operation running on the UI thread.

For a non-UI thread with no synchronization context, await Task.Yield() just switches the continuation to a random pool thread. There is no guarantee it is going to be a different thread from the current thread, it's only guaranteed to be an asynchronous continuation. If ThreadPool is starving, it may schedule the continuation onto the same thread.

In ASP.NET, doing await Task.Yield() doesn't make sense at all, except for the workaround mentioned in @StephenCleary's answer. Otherwise, it will only hurt the web app performance with a redundant thread switch.

So, is await Task.Yield() useful? IMO, not much. It can be used as a shortcut to run the continuation via SynchronizationContext.Post or ThreadPool.QueueUserWorkItem, if you really need to impose asynchrony upon a part of your method.

Regarding the books you quoted, in my opinion those approaches to using Task.Yield are wrong. I explained why they're wrong for a UI thread, above. For a non-UI pool thread, there's simply no "other tasks in the thread to execute", unless you running a custom task pump like Stephen Toub's AsyncPump.

Updated to answer the comment:

... how can it be asynchronouse operation and stay in the same thread

?..

As a simple example: WinForms app:

async void Form_Load(object s, object e)

{

await Task.Yield();

MessageBox.Show("Async message!");

}

Form_Load will return to the caller (the WinFroms framework code which has fired Load event), and then the message box will be shown asynchronously, upon some future iteration of the message loop run by Application.Run(). The continuation callback is queued with WinFormsSynchronizationContext.Post, which internally posts a private Windows message to the UI thread's message loop. The callback will be executed when this message gets pumped, still on the same thread.

In a console app, you can run a similar serializing loop with AsyncPump mentioned above.

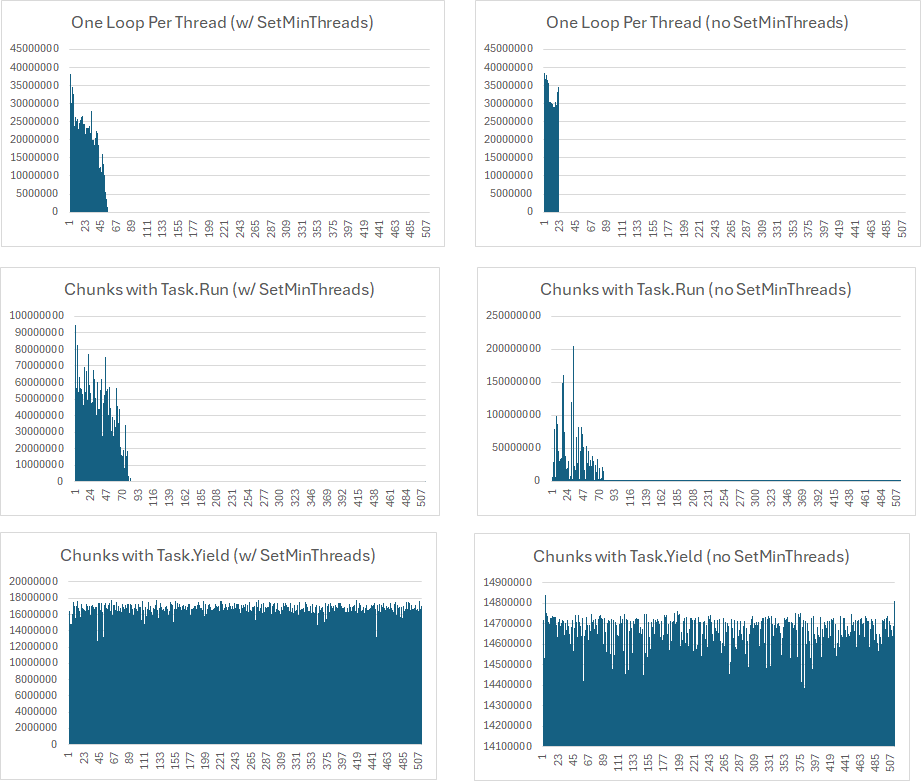

Task.Yieldwon't keep the UI responsive. Also,asyncandawaitdo not free up the UI thread; if you have a long calculation, you'll need to useTask.Run. – Intersperseawait Task.Yield()every second then other short running tasks should get a chance without pending in the queue for a long time. Wouldn't this be fair behavior? – Cytosineawait Task.Yield(). – Intersperse