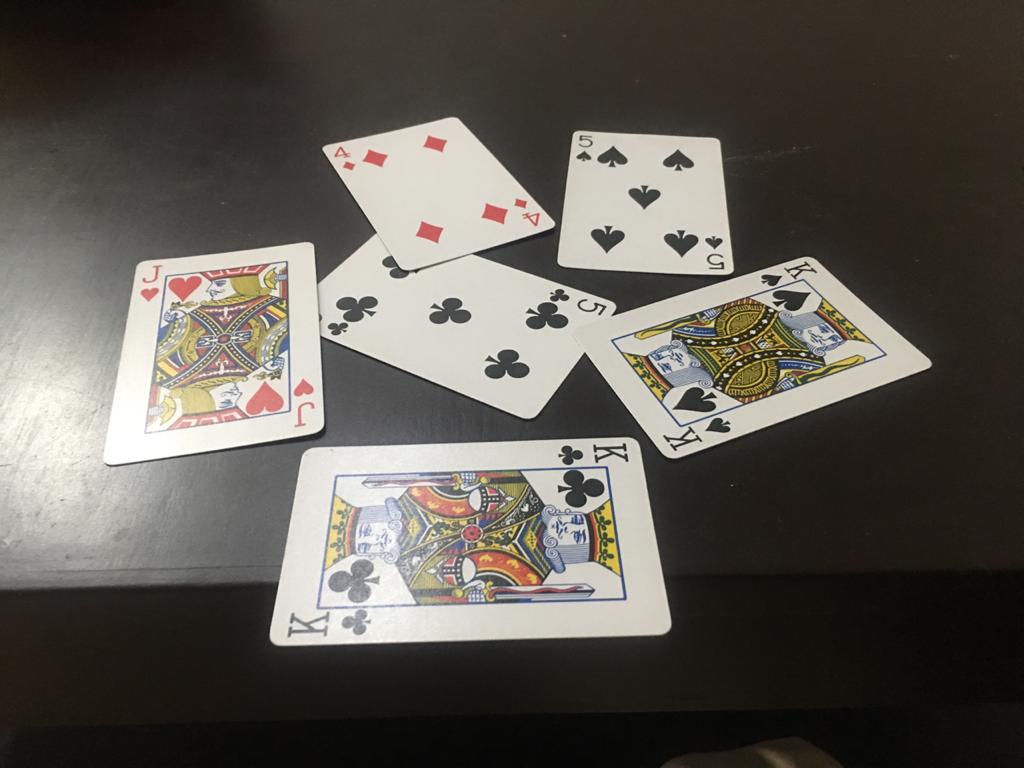

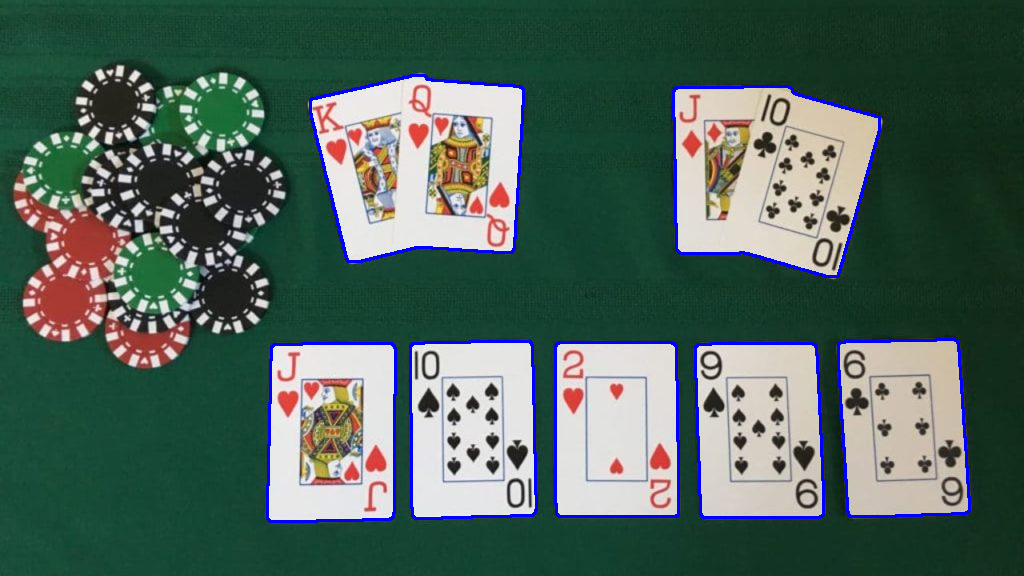

I am trying to detect playing cards and transform them to get a bird's eye view of the card using python opencv. My code works fine for simple cases but I didn't stop at the simple cases and want to try out more complex ones. I'm having problems finding correct contours for cards.Here's an attached image where I am trying to detect cards and draw contours:

My Code:

path1 = "F:\\ComputerVisionPrograms\\images\\cards4.jpeg"

g = cv2.imread(path1,0)

img = cv2.imread(path1)

edge = cv2.Canny(g,50,200)

p,c,h = cv2.findContours(edge, cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE)

rect = []

for i in c:

p = cv2.arcLength(i, True)

ap = cv2.approxPolyDP(i, 0.02 * p, True)

if len(ap)==4:

rect.append(i)

cv2.drawContours(img,rect, -1, (0, 255, 0), 3)

plt.imshow(img)

plt.show()

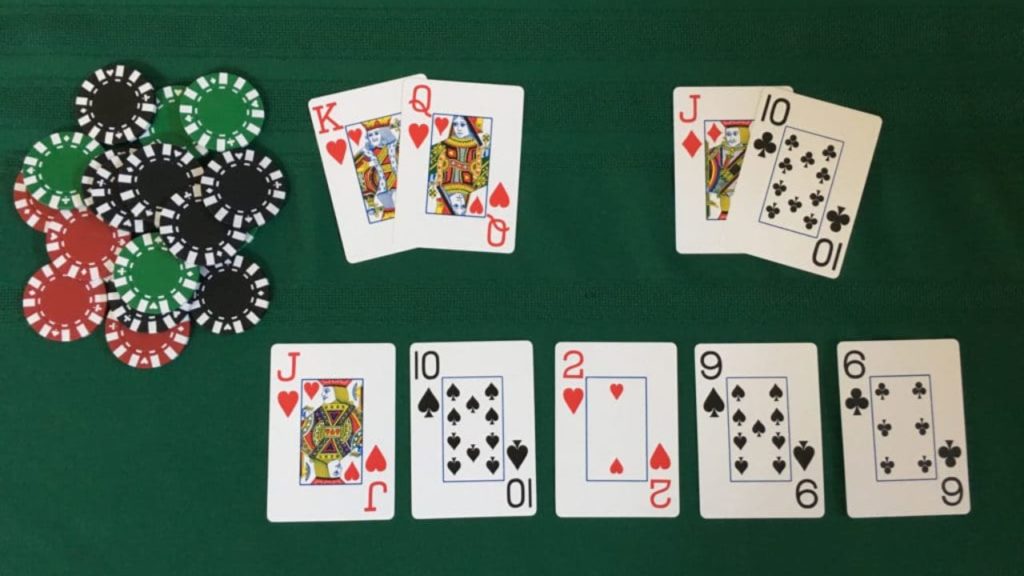

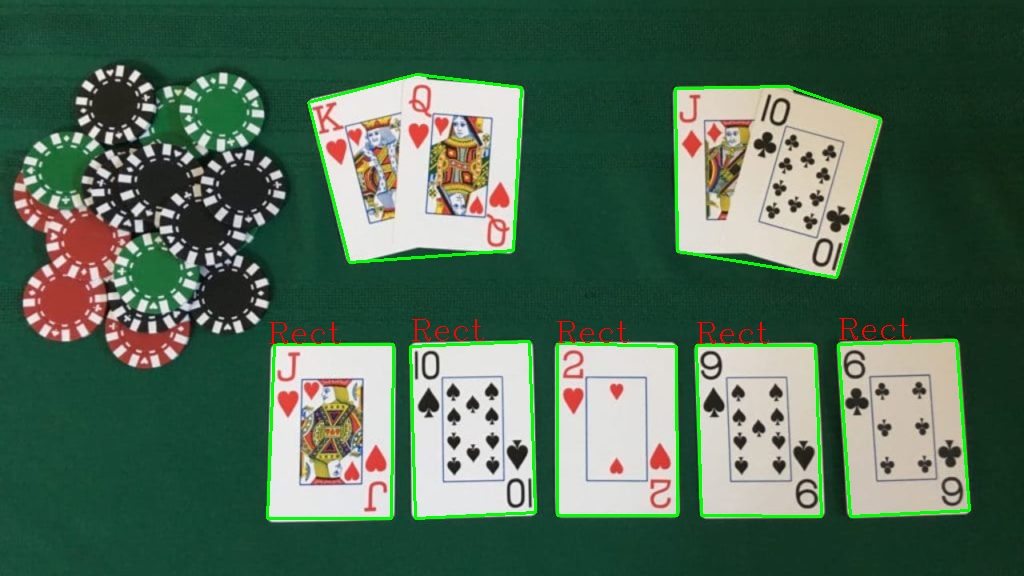

Result:

This is not what I wanted, I wanted only the rectangular cards to be selected but since they are occluding one another, I am not getting what I expected. I believe I need to apply morphological tricks or other operations to maybe separate them or make the edges more prominent or may be something else. It would be really appreciated if you could share your approach to tackle this problem.

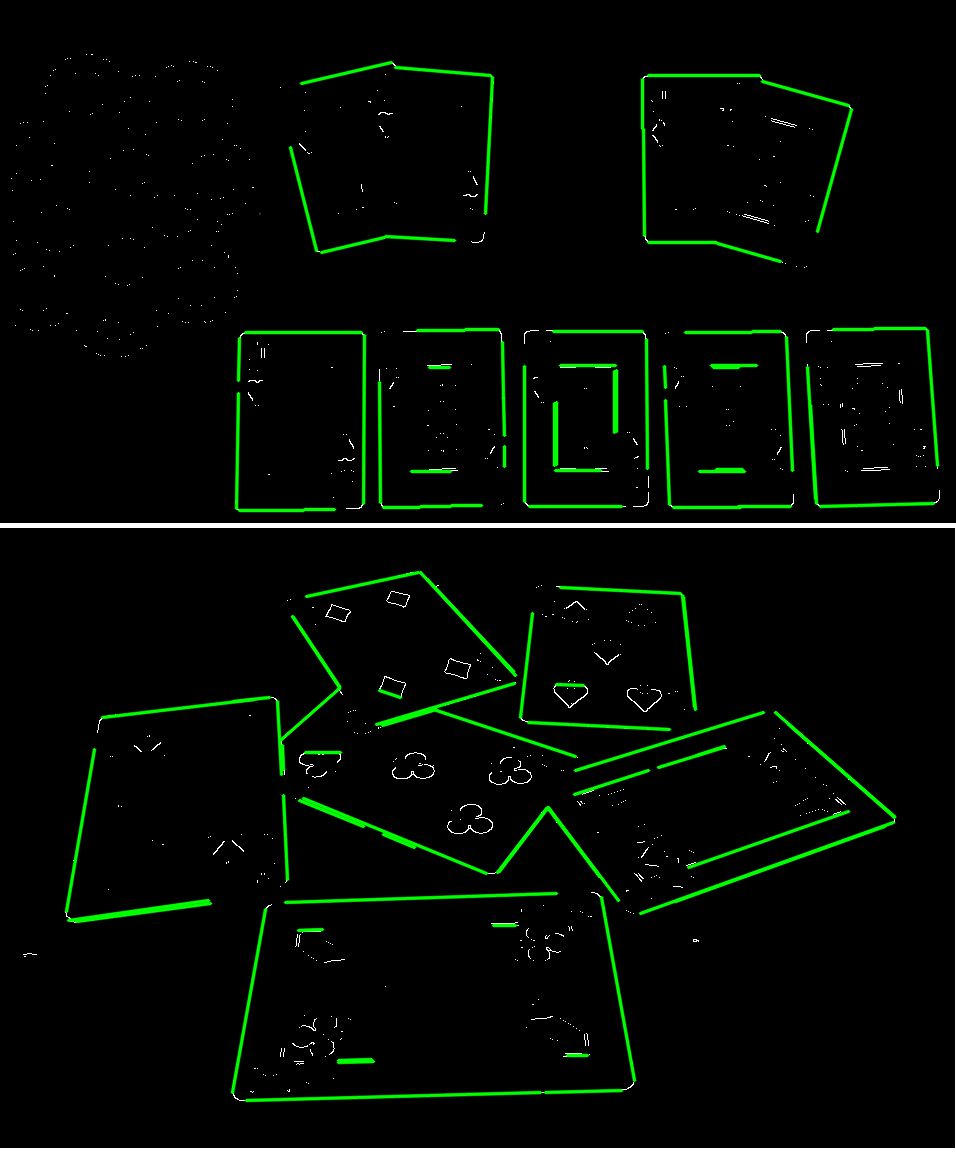

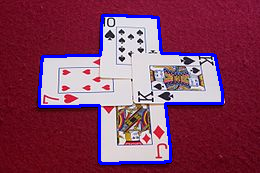

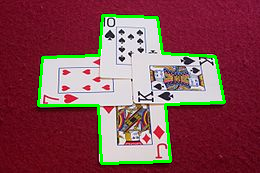

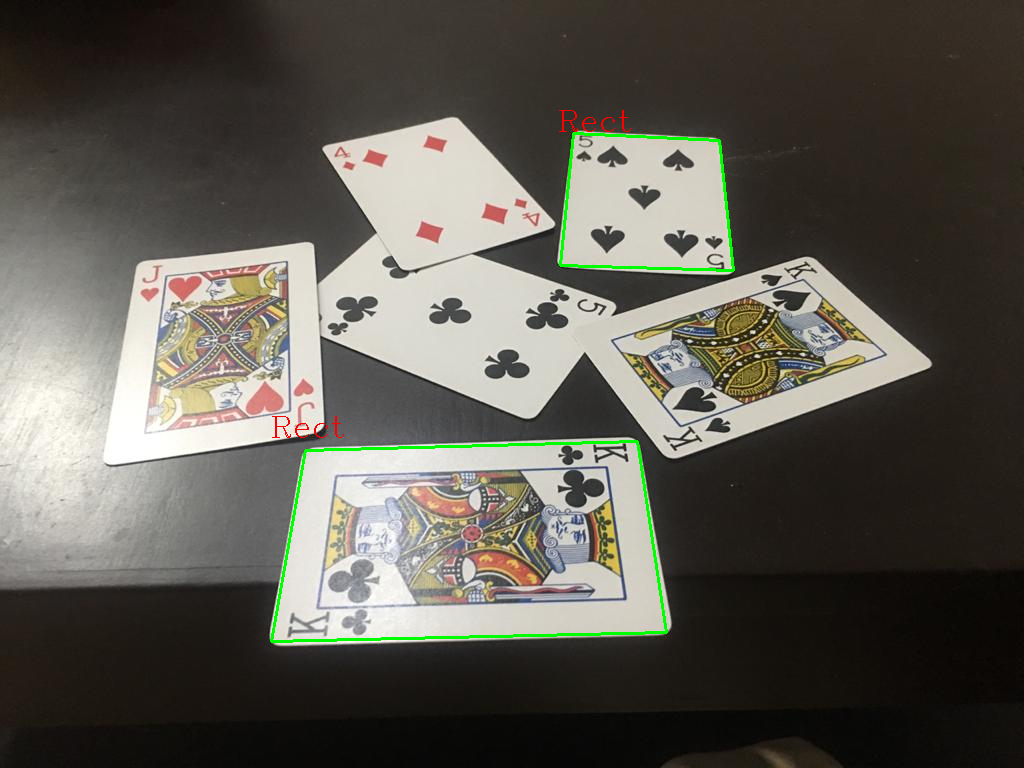

A few more examples requested by other fellows: