I am trying to understand an example snippet that makes use of the PyTorch transposed convolution function, with documentation here, where in the docs the author writes:

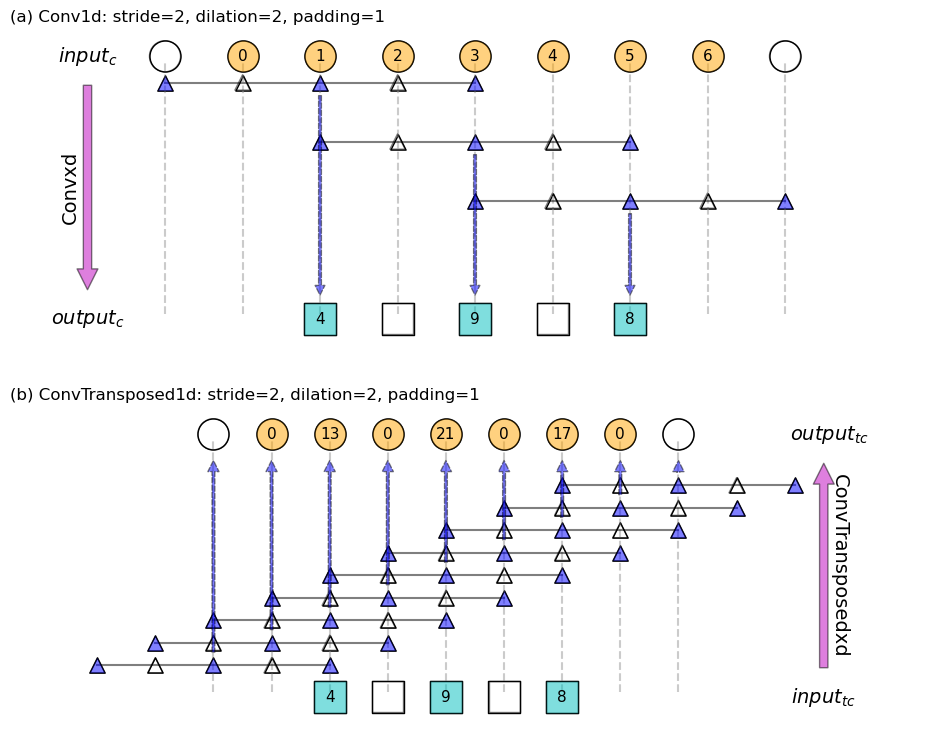

"The padding argument effectively adds dilation * (kernel_size - 1) - padding amount of zero padding to both sizes of the input."

Consider the snippet below where a sample image of shape [1, 1, 4, 4] containing all ones is input to a ConvTranspose2D operation with arguments stride=2 and padding=1 with a weight matrix of shape (1, 1, 4, 4) that has entries from a range between 1 and 16 (in this case dilation=1 and added_padding = 1*(4-1)-1 = 2)

sample_im = torch.ones(1, 1, 4, 4).cuda()

sample_deconv = nn.ConvTranspose2d(1, 1, 4, 2, 1, bias=False).cuda()

sample_deconv.weight = torch.nn.Parameter(

torch.tensor([[[[ 1., 2., 3., 4.],

[ 5., 6., 7., 8.],

[ 9., 10., 11., 12.],

[13., 14., 15., 16.]]]]).cuda())

Which yields:

>>> sample_deconv(sample_im)

tensor([[[[ 6., 12., 14., 12., 14., 12., 14., 7.],

[12., 24., 28., 24., 28., 24., 28., 14.],

[20., 40., 44., 40., 44., 40., 44., 22.],

[12., 24., 28., 24., 28., 24., 28., 14.],

[20., 40., 44., 40., 44., 40., 44., 22.],

[12., 24., 28., 24., 28., 24., 28., 14.],

[20., 40., 44., 40., 44., 40., 44., 22.],

[10., 20., 22., 20., 22., 20., 22., 11.]]]], device='cuda:0',

grad_fn=<CudnnConvolutionTransposeBackward>)

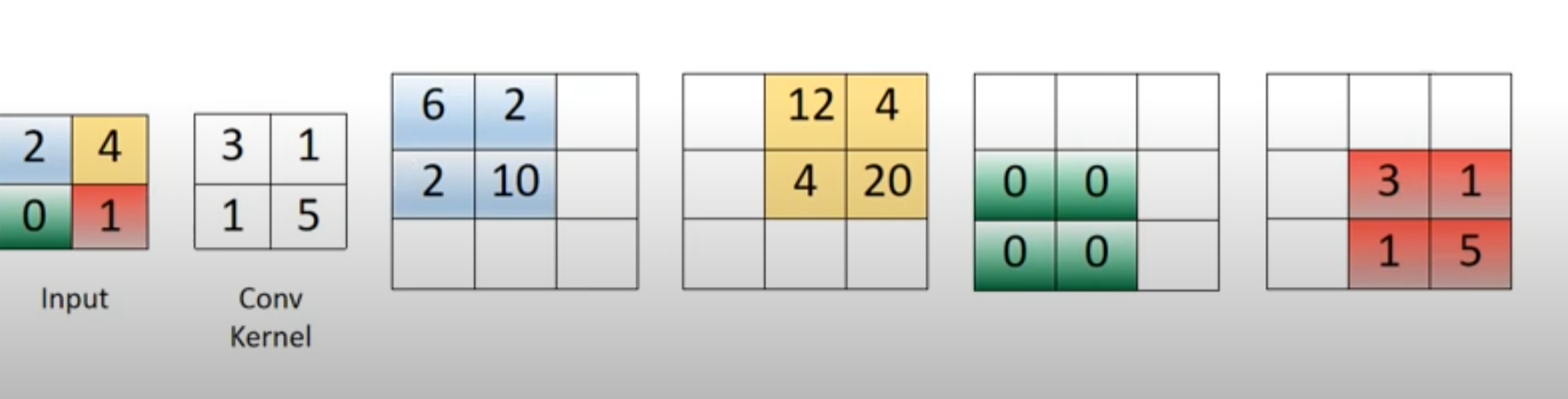

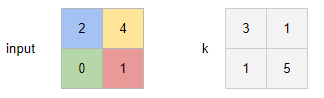

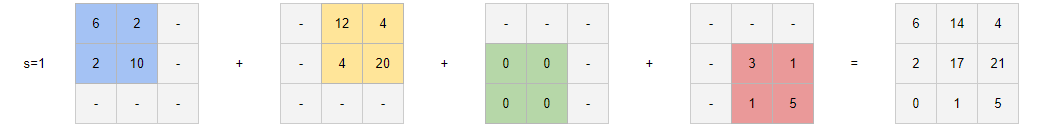

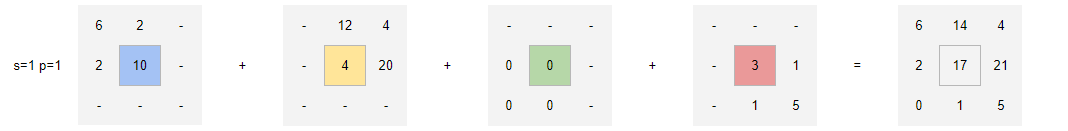

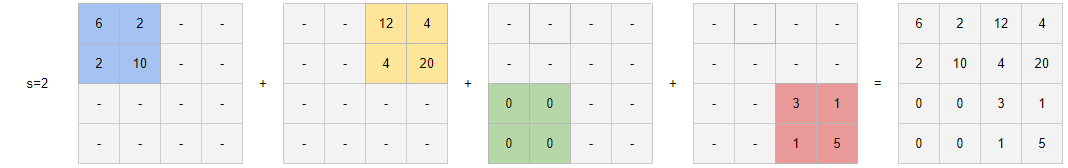

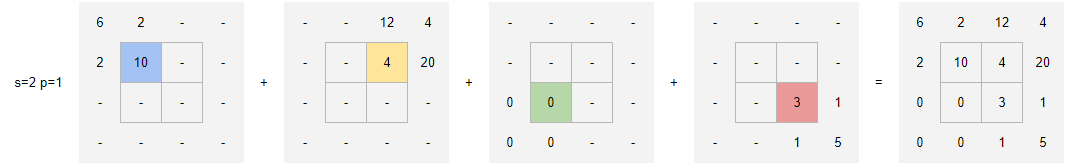

Now I have seen simple examples of transposed convolution without stride and padding. For instance, if the input is a 2x2 image [[2, 4], [0, 1]], and the convolutional filter with one output channel is [[3, 1], [1, 5]], then the resulting tensor of shape (1, 1, 3, 3) can be seen as the sum of the four colored matrices in the image below:

The problem is I can't seem to find examples that use strides and/or padding in the same visualization. As per my snippet, I am having a very difficult time understanding how the padding is applied to the sample image, or how the stride works to get this output. Any insights appreciated, even just understanding how the 6 in the (0,0) entry or the 12 in the (0,1) entry of the resulting matrix are computed would be very helpful.